Its very dependent on model and the number of params gives a rough guide to speed even if some layers are more performant than others.

So far I only have a CRNN done as stuck with the streaming models.

Its a ‘hey marvin’ https://drive.google.com/file/d/1bGf_b8imzPZJNYDUWR94mWuV0deSMVzM/view?usp=share_link

Index[0] is the ‘heymarvin’ kw

Not really sure about time but on my pc its about 0.014763929 seconds per 1sec KW but that doesn’t mean all that much as its more a measurement of my pc.

There is a benchmark tool Performance measurement | TensorFlow Lite just never used it

Usually I am aiming for a Pi02W as think they make great satellites when you can purchase them.

Its running @ 25% on a single core and with python and everything htop says 165mb

The main thing is that it runs and is low latency but really going off what Google have already benched.

crnn_state

parameters: 467K

float accuracy: 97.1; model size: 1800KB; latency 7.1ms

quant accuracy: 96.9; model size: 593KB; latency 2.6ms

stream float accuracy: 96.3; model size: 1700KB; latency 0.2ms

stream quant accuracy: 95.8; model size: 472KB; latency 0.1ms

The only test I can think of is running a 100 hours of librispeech through it as soon as you start adding anything random such as noise or supply your own KW’s it all starts getting very subjective.

I have a bit of a hack here https://www.openslr.org/resources/12/train-clean-100.tar.gz

import tensorflow as tf

import numpy as np

import glob

import os

import soundfile as sf

def softmax_stable(x):

return(np.exp(x - np.max(x)) / np.exp(x - np.max(x)).sum())

def kw_detect(rec, sample_rate ,duration, reset_state):

rec = np.reshape(rec, (1, int(sample_rate * duration)))

#rec = np.multiply(rec, 8)

if reset_state:

for s in range(len(input_details1)):

inputs1[s] = np.zeros(input_details1[s]['shape'], dtype=np.float32)

# Make prediction from model

interpreter1.set_tensor(input_details1[0]['index'], rec)

# set input states (index 1...)

for s in range(1, len(input_details1)):

interpreter1.set_tensor(input_details1[s]['index'], inputs1[s])

interpreter1.invoke()

output_data = interpreter1.get_tensor(output_details1[0]['index'])

# get output states and set it back to input states

# which will be fed in the next inference cycle

for s in range(1, len(input_details1)):

# The function `get_tensor()` returns a copy of the tensor data.

# Use `tensor()` in order to get a pointer to the tensor.

inputs1[s] = interpreter1.get_tensor(output_details1[s]['index'])

out_softmax = softmax_stable(output_data[0])

return out_softmax[0]

# Parameters

duration = 0.020

sample_rate = 16000

num_channels = 1

# Load the TFLite model and allocate tensors.

interpreter1 = tf.lite.Interpreter(model_path="../GoogleKWS/models2/crnn_state/quantize_opt_for_size_tflite_stream_state_external/stream_state_external.tflite", num_threads=2)

interpreter1.allocate_tensors()

# Get input and output tensors.

input_details1 = interpreter1.get_input_details()

output_details1 = interpreter1.get_output_details()

inputs1 = []

for s in range(len(input_details1)):

inputs1.append(np.zeros(input_details1[s]['shape'], dtype=np.float32))

kw_hit_qty = 0

total_duration = 0.0

hit_txt = []

reset_state = True

kw_hit_rbuff = np.zeros(13, dtype=np.float32)

for txtfile in glob.glob('/media/stuart/New Volume/Users/Stuart/Downloads/Noise/LibriSpeech/**/*.txt', recursive=True):

dirtxt = os.path.dirname(txtfile)

with open(txtfile) as f:

lines = f.readlines()

for line in lines:

frame = 0

kw_count = 0

kw_hit = False

content = line.split(" ", 1)

flacfile = dirtxt + '/' + content[0] + '.flac'

data, samplerate = sf.read(flacfile, dtype='float32')

total_duration = total_duration + (len(data) / samplerate)

while frame < 100:

start = 320 * frame

rec = data[start:start + 320]

if len(rec) < 320:

break

kw_prob = kw_detect(rec, sample_rate ,duration, reset_state)

if kw_prob > 0.9999:

kw_hit = True

reset_state = True

kw_hit_rbuff = np.zeros(13, dtype=np.float32)

print(flacfile, kw_prob, frame)

else:

reset_state = False

frame += 1

if kw_hit == True:

kw_hit_qty += 1

hit_txt.append(flacfile)

print(kw_hit_qty, total_duration / 3600)

print(kw_hit_qty, total_duration / 3600)

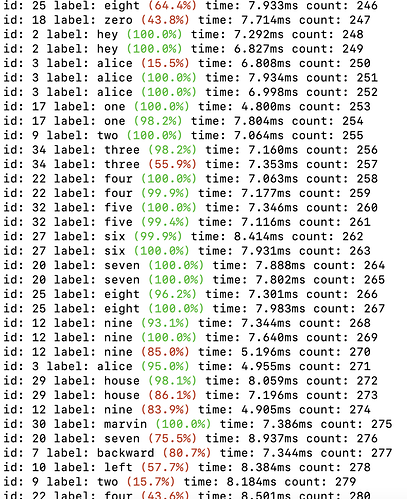

kw is far more subjective but these are the 1400 I had in the test set

import tensorflow as tf

import numpy as np

import glob

import os

import soundfile as sf

import time

def softmax_stable(x):

return(np.exp(x - np.max(x)) / np.exp(x - np.max(x)).sum())

def kw_detect(rec, sample_rate ,duration, reset_state):

rec = np.reshape(rec, (1, int(sample_rate * duration)))

#rec = np.multiply(rec, 8)

# Make prediction from model

if reset_state:

for s in range(len(input_details1)):

inputs1[s] = np.zeros(input_details1[s]['shape'], dtype=np.float32)

interpreter1.set_tensor(input_details1[0]['index'], rec)

# set input states (index 1...)

for s in range(1, len(input_details1)):

interpreter1.set_tensor(input_details1[s]['index'], inputs1[s])

interpreter1.invoke()

output_data = interpreter1.get_tensor(output_details1[0]['index'])

# get output states and set it back to input states

# which will be fed in the next inference cycle

for s in range(1, len(input_details1)):

# The function `get_tensor()` returns a copy of the tensor data.

# Use `tensor()` in order to get a pointer to the tensor.

inputs1[s] = interpreter1.get_tensor(output_details1[s]['index'])

out_softmax = softmax_stable(output_data[0])

return out_softmax[0]

# Parameters

duration = 0.020

sample_rate = 16000

num_channels = 1

# Load the TFLite model and allocate tensors.

interpreter1 = tf.lite.Interpreter(model_path="../GoogleKWS/models2/crnn_state/quantize_opt_for_size_tflite_stream_state_external/stream_state_external.tflite", num_threads=2)

interpreter1.allocate_tensors()

# Get input and output tensors.

input_details1 = interpreter1.get_input_details()

output_details1 = interpreter1.get_output_details()

inputs1 = []

for s in range(len(input_details1)):

inputs1.append(np.zeros(input_details1[s]['shape'], dtype=np.float32))

kw_hit_qty = 0

total_duration = 0.0

hit_txt = []

start_time = time.time()

for kwfile in glob.glob(os.path.join('../GoogleKWS/data2/testing/heymarvin', '*.wav')):

reset_state = True

frame = 0

kw_count = 0

kw_hit = False

data, samplerate = sf.read(kwfile, dtype='float32')

total_duration = total_duration + (len(data) / samplerate)

while frame < 100:

start = 320 * frame

rec = data[start:start + 320]

if len(rec) < 320:

break

kw_prob = kw_detect(rec, sample_rate ,duration, reset_state)

if kw_prob > 0.9999:

kw_hit = True

reset_state = True

else:

reset_state = False

kw_count = 0

frame += 1

if kw_hit == False:

kw_hit_qty += 1

hit_txt.append(kwfile)

#print(kw_hit_qty, total_duration / 3600)

print(kw_hit_qty, total_duration / 3600)

print(time.time() - start_time)

With if kw_prob > 0.999: it gives 12 (100 hours) false negatives for 1.21% false positives (1400 kw)

As with if kw_prob > 0.9999: it gives 1 (100 hours) false negative for 3.78 % false positives (1400 kw)

The 1400 kw files are here https://drive.google.com/file/d/1dreV5fBIwzdcJnXEueYwc4NeWCyufdS-/view?usp=share_link

Those are the benchmarks to beat false positives/negatives and you need to quote together as its swings and roundabouts as less false negatives will give more false positives but in respect to Picovoice I can seem to match them approx for false positives but 10x better on false negatives or match them on false negatives with x3 less false positives.

Guess just test and see what you think

import tensorflow as tf

import sounddevice as sd

import numpy as np

import threading

def softmax_stable(x):

return(np.exp(x - np.max(x)) / np.exp(x - np.max(x)).sum())

def sd_callback(rec, frames, time, status):

global max_rec, rec_samples

# Notify if errors

if status:

print('Error:', status)

rec = np.reshape(rec, (1, rec_samples))

# Make prediction from model

interpreter1.set_tensor(input_details1[0]['index'], rec)

# set input states (index 1...)

for s in range(1, len(input_details1)):

interpreter1.set_tensor(input_details1[s]['index'], inputs1[s])

interpreter1.invoke()

output_data = interpreter1.get_tensor(output_details1[0]['index'])

# get output states and set it back to input states

# which will be fed in the next inference cycle

for s in range(1, len(input_details1)):

# The function `get_tensor()` returns a copy of the tensor data.

# Use `tensor()` in order to get a pointer to the tensor.

inputs1[s] = interpreter1.get_tensor(output_details1[s]['index'])

lvl = np.max(np.abs(rec))

if lvl > max_rec:

max_rec = lvl

out_softmax = softmax_stable(output_data[0])

if out_softmax[0] > 0.999:

print("Marvin:", out_softmax[0], max_rec)

for s in range(len(input_details1)):

inputs1[s] = np.zeros(input_details1[s]['shape'], dtype=np.float32)

max_rec = 0.0

# Parameters

kw_duration = 1.0

rec_duration = 0.020

sample_rate = 16000

num_channels = 1

max_rec = 0.0

rec_samples = int((sample_rate * kw_duration) * rec_duration)

sd.default.latency= ('high', 'high')

sd.default.dtype= ('float32', 'float32')

sd.default.device = 'donglein'

# Load the TFLite model and allocate tensors.

interpreter1 = tf.lite.Interpreter(model_path="stream_state_external.tflite")

interpreter1.allocate_tensors()

# Get input and output tensors, really should be static copies to use as KW resets

input_details1 = interpreter1.get_input_details()

output_details1 = interpreter1.get_output_details()

inputs1 = []

for s in range(len(input_details1)):

inputs1.append(np.zeros(input_details1[s]['shape'], dtype=np.float32))

# Start streaming from microphone

with sd.InputStream(channels=num_channels,

samplerate=sample_rate,

blocksize=rec_samples,

callback=sd_callback):

threading.Event().wait()

All the scripts are super hacky just for tests but will prob create c/c++ runner eventually as python just sucks for any DSP even if it is very light using soundevice as the MFCC is embedded in the model so no external MFCC.

The problem with non streaming KWS is that audio is a stream whilst say video is stream of images but you can use a single image but a single sample means nothing in audio.

So you have to run a non streaming KWS as you ‘inch’ the incoming stream and you can quickly pick up a lot of load or not get the granularity of the KW in the right position and not detect it.

The streaming model I am using does it in 20ms chunks so that would mean running a non-streaming kws x50 a second. Likely less as your samples can shift but that often can reduce accuracy, generally my samples are fairly centered but doesn’t matter due to the 20ms sample rate whilst the lower you go the more chance you may miss the optimal recognition position.

PS if I had upped the kw_prob to just > 0.999915 it would be zero false negatives for 100 hours  for 4.21% false positives which is what I am working on as if I use on-device training of in-use captured KW then likely I can retain close to that level of false negatives whilst reducing false positives greatly.

for 4.21% false positives which is what I am working on as if I use on-device training of in-use captured KW then likely I can retain close to that level of false negatives whilst reducing false positives greatly.

Thats what is in the pipeline anyway.