Its sort of strange but the 30k bc_resnet_2 model has gone strangely round circle as Esspressif are using https://arxiv.org/pdf/1811.07684.pdf aka Alice Coucke, Mohammed Chlieh, Thibault Gisselbrecht, David Leroy,Mathieu Poumeyrol, Thibaut, Lavril, Snips, Paris, France according to the Esspressif documentation.

There is a working framework in the kws_streaming repo from Google Research.

Esspressif seem to have a channel(s) input vector which is near what I was planning myself as was expecting 2x instances, but it does seem to look like they use a single model with a channel dimention, which I didn’t think about.

If you don’t make the assumption you need a huge dataset of all voice-types for the KW of choice so that any utterance of that KW will trigger, or that you need a KWS at all downstream.

Via enrollment you can create what I have previously called a bad VAD on a small dataset of captured voice and I called it ‘Bad’ as its overfitted to enrollment voice, so only accepts the enrollment voice, but in this case isn’t ‘Bad’ as provides one way for Personalised VAD that combined with an Upstream KWS overall provides more accuracy.

VAD with shorter frame / window has less parameters and is considerabilly lighter, but the same principle goes with a ‘Bad KWS’ that is enrollment based that has a double factor check with upstream models.

There are many ways to attack this and lighter weight schemes in conjunction with upstream methods can provide some of the cutting edge Targetted voice extraction that the likes of Google do accomplished by lateral thought more than Google science

Its not low-cost hardware or opensource its non Rtos / non DSP applications SoC’s such as the Pi where schedulers make it near impossible to guarantee exact timings. Low-cost micro-controllers because of being an RTOS don’t suffer the same way as the state cycle should always be the same.

When Esspressif added vector instructions with the ESP32-S3 it has elevated a low cost microcontroller very much into this realm and why Essp[ressif have frameworks, where the previous minus vector instructions struggled.

Google & Amazon run on lowcost micro-controllers likely some form of Arm-M style because they have created and researched thier own DSP libs as have Xmos and they are no different or better its just your paying for closed source software embedded in silicon at a premium.

Even then often implementations lack correaltion as KWS/VAD should be synced to direction, but most of the time its the Pi that is the problem not the Algs themselves.

Google likely doesn’t even employ beamforming at all and from testing the Nest products are slightly better in noise than the Gen4 Alexa’s complex 6mic, that I presume Google have concrete patents for and deliberately force Amazons more expensive hardware platform.

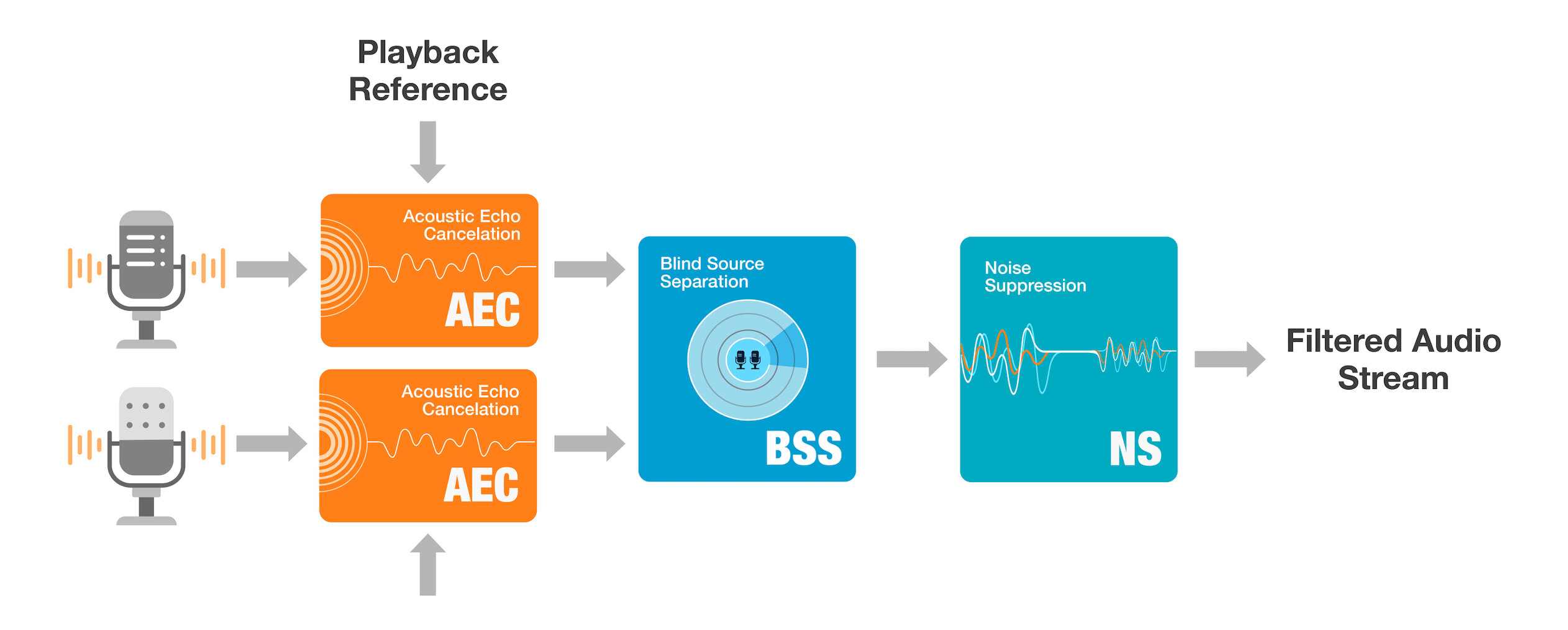

Speex AEC and this is what I find so strange, is that I seem to be the only one in the community to tackle low level DSP and have a working beamformer and know the technology well and why I don’t really think its the way forward, but it can definately be used.

But GitHub - voice-engine/ec: Echo Canceller, part of Voice Engine project did a great job on AEC and @synesthesiam has created a fork for me as I can push a few changes to add a few more cli parameters, to make config for environment a tad easier.

For beamforming have a look at GitHub - StuartIanNaylor/2ch_delay_sum: 2 channel delay sum beamformer as here my name is in reverse as gmail had already been taken.

It writes the current TDOA to /tmp/ds-out watch -n 0.1 cat /tmp/ds-out to monitor but likely should store a buffer of TDOA so on KW hit you can fix the beam by writing the avg TDOA to /tmp/ds-in and delete the file to clear.

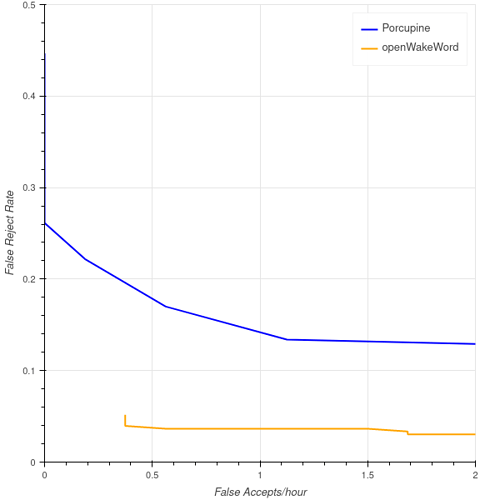

Generally when going over 33% SNR unfiltered recognition starts to degrade (The MFCC is less clear) and you can get higher levels of SNR but false positives/negatives start to steeply climb, but there are some great filters that if the datasets are mixed with noise and then filtered before training, the filter signature will be recognised (Build the filter into the model).

This is something that has always been missing as we do have that opensource with DTLN and the awesome RTXvoice like Deepfilter.net but we have to train in the filter into the dataset, but if you do especially with Deepfilter.net you can achieve crazy levels of SNR its just a shame its LadSpa plugin is single thread only, but a base with an RK3588 or above as said is pretty amazing DTLN less so but does have much less load and far more Pi4 friendly.

We have always had many solutions, AEC & Beamforming but as a community its lacked ability, resources and will and to generally leave this open to any filter and bring your own DSP & Mic, but unfortunately for a voice assistant input DSP audio of a closed circuit of dictate, as a solution is actually far superior and could exist, but seems unable to co-ordinate. That closed loop of cheap hardware adds a big advantage to commercial hardware, that models are specifically trained for.

They exist, so do others, but complementary ASR/KWS models do not exist and in some cases without they can degrade recognition.