In each folder there is a ‘model_summary.txt’

Model: "model_1"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_audio (InputLayer) [(1, 320)] 0 []

speech_features (SpeechFeature (1, 1, 20) 0 ['input_audio[0][0]']

s)

tf_op_layer_ExpandDims (Tensor (1, 1, 20, 1) 0 ['speech_features[0][0]']

FlowOpLayer)

stream (Stream) (1, 1, 18, 16) 160 ['tf_op_layer_ExpandDims[0][0]']

stream_1 (Stream) (1, 1, 16, 16) 3856 ['stream[0][0]']

reshape (Reshape) (1, 1, 256) 0 ['stream_1[0][0]']

tf_op_layer_streaming/gru_1/Sq [(1, 256)] 0 ['reshape[0][0]']

ueeze (TensorFlowOpLayer)

gru_1input_state (InputLayer) [(1, 256)] 0 []

tf_op_layer_streaming/gru_1/ce [(1, 768)] 0 ['tf_op_layer_streaming/gru_1/Squ

ll/MatMul (TensorFlowOpLayer) eeze[0][0]']

tf_op_layer_streaming/gru_1/ce [(1, 768)] 0 ['gru_1input_state[0][0]']

ll/MatMul_1 (TensorFlowOpLayer

)

tf_op_layer_streaming/gru_1/ce [(1, 768)] 0 ['tf_op_layer_streaming/gru_1/cel

ll/BiasAdd (TensorFlowOpLayer) l/MatMul[0][0]']

tf_op_layer_streaming/gru_1/ce [(1, 768)] 0 ['tf_op_layer_streaming/gru_1/cel

ll/BiasAdd_1 (TensorFlowOpLaye l/MatMul_1[0][0]']

r)

tf_op_layer_streaming/gru_1/ce [(1, 256), 0 ['tf_op_layer_streaming/gru_1/cel

ll/split (TensorFlowOpLayer) (1, 256), l/BiasAdd[0][0]']

(1, 256)]

tf_op_layer_streaming/gru_1/ce [(1, 256), 0 ['tf_op_layer_streaming/gru_1/cel

ll/split_1 (TensorFlowOpLayer) (1, 256), l/BiasAdd_1[0][0]']

(1, 256)]

tf_op_layer_streaming/gru_1/ce [(1, 256)] 0 ['tf_op_layer_streaming/gru_1/cel

ll/add_1 (TensorFlowOpLayer) l/split[0][1]',

'tf_op_layer_streaming/gru_1/cel

l/split_1[0][1]']

gru_1 (GRU) (1, 1, 256) 394752 ['reshape[0][0]']

tf_op_layer_streaming/gru_1/ce [(1, 256)] 0 ['tf_op_layer_streaming/gru_1/cel

ll/Sigmoid_1 (TensorFlowOpLaye l/add_1[0][0]']

r)

stream_2 (Stream) (1, 256) 0 ['gru_1[0][0]']

tf_op_layer_streaming/gru_1/ce [(1, 256)] 0 ['tf_op_layer_streaming/gru_1/cel

ll/add (TensorFlowOpLayer) l/split[0][0]',

'tf_op_layer_streaming/gru_1/cel

l/split_1[0][0]']

tf_op_layer_streaming/gru_1/ce [(1, 256)] 0 ['tf_op_layer_streaming/gru_1/cel

ll/mul (TensorFlowOpLayer) l/Sigmoid_1[0][0]',

'tf_op_layer_streaming/gru_1/cel

l/split_1[0][2]']

dropout (Dropout) (1, 256) 0 ['stream_2[0][0]']

tf_op_layer_streaming/gru_1/ce [(1, 256)] 0 ['tf_op_layer_streaming/gru_1/cel

ll/Sigmoid (TensorFlowOpLayer) l/add[0][0]']

tf_op_layer_streaming/gru_1/ce [(1, 256)] 0 ['tf_op_layer_streaming/gru_1/cel

ll/add_2 (TensorFlowOpLayer) l/split[0][2]',

'tf_op_layer_streaming/gru_1/cel

l/mul[0][0]']

dense (Dense) (1, 128) 32896 ['dropout[0][0]']

data_frame_1input_state (Input [(1, 640)] 0 []

Layer)

lambda_8 (Lambda) (1, 320) 0 ['input_audio[0][0]']

stream/ExternalState (InputLay [(1, 3, 20, 1)] 0 []

er)

stream_1/ExternalState (InputL [(1, 5, 18, 16)] 0 []

ayer)

tf_op_layer_streaming/gru_1/ce [(1, 256)] 0 ['tf_op_layer_streaming/gru_1/cel

ll/sub (TensorFlowOpLayer) l/Sigmoid[0][0]']

tf_op_layer_streaming/gru_1/ce [(1, 256)] 0 ['tf_op_layer_streaming/gru_1/cel

ll/Tanh (TensorFlowOpLayer) l/add_2[0][0]']

stream_2/ExternalState (InputL [(1, 1, 256)] 0 []

ayer)

dense_1 (Dense) (1, 256) 33024 ['dense[0][0]']

tf_op_layer_streaming/speech_f [(1, 320)] 0 ['data_frame_1input_state[0][0]']

eatures/data_frame_1/strided_s

lice (TensorFlowOpLayer)

lambda_7 (Lambda) (1, 320) 0 ['lambda_8[0][0]']

tf_op_layer_streaming/stream/s [(1, 2, 20, 1)] 0 ['stream/ExternalState[0][0]']

trided_slice (TensorFlowOpLaye

r)

tf_op_layer_streaming/stream_1 [(1, 4, 18, 16)] 0 ['stream_1/ExternalState[0][0]']

/strided_slice (TensorFlowOpLa

yer)

tf_op_layer_streaming/gru_1/ce [(1, 256)] 0 ['tf_op_layer_streaming/gru_1/cel

ll/mul_1 (TensorFlowOpLayer) l/Sigmoid[0][0]',

'gru_1input_state[0][0]']

tf_op_layer_streaming/gru_1/ce [(1, 256)] 0 ['tf_op_layer_streaming/gru_1/cel

ll/mul_2 (TensorFlowOpLayer) l/sub[0][0]',

'tf_op_layer_streaming/gru_1/cel

l/Tanh[0][0]']

tf_op_layer_streaming/stream_2 [(1, 0, 256)] 0 ['stream_2/ExternalState[0][0]']

/strided_slice (TensorFlowOpLa

yer)

dense_2 (Dense) (1, 7) 1799 ['dense_1[0][0]']

tf_op_layer_streaming/speech_f [(1, 640)] 0 ['tf_op_layer_streaming/speech_fe

eatures/data_frame_1/concat (T atures/data_frame_1/strided_slice

ensorFlowOpLayer) [0][0]',

'lambda_7[0][0]']

tf_op_layer_streaming/stream/c [(1, 3, 20, 1)] 0 ['tf_op_layer_streaming/stream/st

oncat (TensorFlowOpLayer) rided_slice[0][0]',

'tf_op_layer_ExpandDims[0][0]']

tf_op_layer_streaming/stream_1 [(1, 5, 18, 16)] 0 ['tf_op_layer_streaming/stream_1/

/concat (TensorFlowOpLayer) strided_slice[0][0]',

'stream[0][0]']

tf_op_layer_streaming/gru_1/ce [(1, 256)] 0 ['tf_op_layer_streaming/gru_1/cel

ll/add_3 (TensorFlowOpLayer) l/mul_1[0][0]',

'tf_op_layer_streaming/gru_1/cel

l/mul_2[0][0]']

tf_op_layer_streaming/stream_2 [(1, 1, 256)] 0 ['tf_op_layer_streaming/stream_2/

/concat (TensorFlowOpLayer) strided_slice[0][0]',

'gru_1[0][0]']

==================================================================================================

Total params: 466,487

Trainable params: 466,487

Non-trainable params: 0

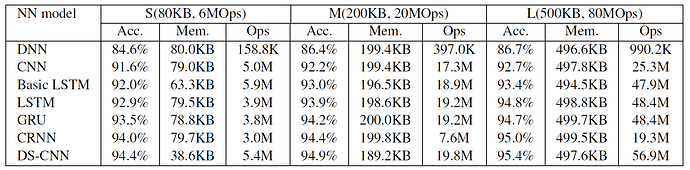

A rough guide to load is number of params where 466,487 is much more than say the 30k of a BC_resnet2 but like the relatively old Arm ML KWS report still much lighter than a GRU

I use an already built framework google-research/kws_streaming at master · google-research/google-research · GitHub Apache2.0 opensource

From

Why create your own when the biggest names in tech are doing it for us and releasing opensource?

The above is an ultra sexy accurate KWS but its a transformer so huge to train…

I ran GoogleKWS with these flags but much is my custom dataset as by far the biggest effect on accuracy is dataset whilst modern classification models all grasp for fractions of percent which is currently around the 97% level.

Namespace(data_url='', data_dir='/home/stuart/GoogleKWS/data2/', lr_schedule='exp', optimizer='adam', background_volume=0.1, l2_weight_decay=0.0, background_frequency=0.0, split_data=0, silence_percentage=10.0, unknown_percentage=10.0, time_shift_ms=100.0, sp_time_shift_ms=0.0, testing_percentage=10, validation_percentage=10, how_many_training_steps='20000,20000,20000,20000', eval_step_interval=400, learning_rate='0.001,0.0005,0.0001,0.00002', batch_size=100, wanted_words='heymarvin,noise,unk,h1m1,h2m1,h1m2,h2m2', train_dir='/home/stuart/GoogleKWS/models2/crnn/', save_step_interval=100, start_checkpoint='', verbosity=0, optimizer_epsilon=1e-08, resample=0.0, sp_resample=0.0, volume_resample=0.0, train=1, sample_rate=16000, clip_duration_ms=1000, window_size_ms=40.0, window_stride_ms=20.0, preprocess='raw', feature_type='mfcc_op', preemph=0.0, window_type='hann', mel_lower_edge_hertz=20.0, mel_upper_edge_hertz=7600.0, micro_enable_pcan=1, micro_features_scale=0.0390625, micro_min_signal_remaining=0.05, micro_out_scale=1, log_epsilon=1e-12, dct_num_features=20, use_tf_fft=0, mel_non_zero_only=1, fft_magnitude_squared=True, mel_num_bins=40, use_spec_augment=1, time_masks_number=2, time_mask_max_size=10, frequency_masks_number=2, frequency_mask_max_size=5, use_spec_cutout=0, spec_cutout_masks_number=3, spec_cutout_time_mask_size=10, spec_cutout_frequency_mask_size=5, return_softmax=0, novograd_beta_1=0.95, novograd_beta_2=0.5, novograd_weight_decay=0.001, novograd_grad_averaging=0, pick_deterministically=0, causal_data_frame_padding=0, wav=1, quantize=0, use_quantize_nbit=0, nbit_activation_bits=8, nbit_weight_bits=8, data_stride=1, restore_checkpoint=0, model_name='crnn', cnn_filters='16,16', cnn_kernel_size='(3,3),(5,3)', cnn_act="'relu','relu'", cnn_dilation_rate='(1,1),(1,1)', cnn_strides='(1,1),(1,1)', gru_units='256', return_sequences='0', stateful=0, dropout1=0.1, units1='128,256', act1="'linear','relu'", label_count=7, desired_samples=16000, window_size_samples=640, window_stride_samples=320, spectrogram_length=49, data_frame_padding=None, summaries_dir='/home/stuart/GoogleKWS/models2/crnn/logs/', training=True)