Thanks to @Daenara and @jrb5665, I was able to get this working. Since I am already using Node Red for my home assistant automations, I went on like this:

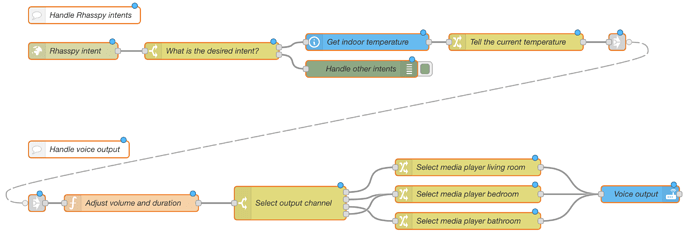

In NodeRed, I listen to all intents and handle them with a switch node. For the voice output, I prepare the text message and things like speaker volume. Then I check the sideId to identify the Sonos speaker I would like to send the output to and call a home assistant service to trigger the actual output.

One thing to note here is that the TTS is handled by Home Assistant in this case.

For reference, here is an example of my node red flow:

[{"id":"55acb4ad.c1540c","type":"websocket in","z":"7e208340.c2710c","name":"Rhasspy intent","server":"","client":"be111083.116b5","x":240,"y":288,"wires":[["3d3a362b.e5074a"]]},{"id":"3d3a362b.e5074a","type":"switch","z":"7e208340.c2710c","name":"What is the desired intent?","property":"intent.name","propertyType":"msg","rules":[{"t":"eq","v":"GetIndoorTemperature","vt":"str"},{"t":"eq","v":"OtherIntent","vt":"str"}],"checkall":"false","repair":false,"outputs":2,"x":488,"y":288,"wires":[["7c8de028.ba1ff"],["8aeb9326.c8f68"]]},{"id":"7c8de028.ba1ff","type":"api-current-state","z":"7e208340.c2710c","name":"Get indoor temperature","server":"84472145.6b711","version":2,"outputs":1,"halt_if":"","halt_if_type":"str","halt_if_compare":"is","entity_id":"sensor.mysensor_temperature","state_type":"num","blockInputOverrides":true,"outputProperties":[{"property":"temperature","propertyType":"msg","value":"","valueType":"entityState"},{"property":"sensordata","propertyType":"msg","value":"","valueType":"entity"}],"override_topic":false,"state_location":"payload","override_payload":"msg","entity_location":"data","override_data":"msg","x":766,"y":272,"wires":[["cc190d1b.91dea"]]},{"id":"cc190d1b.91dea","type":"change","z":"7e208340.c2710c","name":"Tell the current temperature","rules":[{"t":"set","p":"payload.siteId","pt":"msg","to":"siteId","tot":"msg"},{"t":"set","p":"payload.text","pt":"msg","to":"'The temperatur is ' & $round(temperature, 1) & ' degrees'","tot":"jsonata"},{"t":"set","p":"voice.duration","pt":"msg","to":"00:00:07","tot":"str"}],"action":"","property":"","from":"","to":"","reg":false,"x":1032,"y":272,"wires":[["a9e0763b.179238"]]},{"id":"4c68d312.94da8c","type":"switch","z":"7e208340.c2710c","name":"Select output channel","property":"payload.siteId","propertyType":"msg","rules":[{"t":"eq","v":"sat-1","vt":"str"},{"t":"eq","v":"sat-2","vt":"str"},{"t":"eq","v":"sat-3","vt":"str"},{"t":"else"}],"checkall":"true","repair":false,"outputs":4,"x":628,"y":560,"wires":[["dc35fba0.c9db18"],["38f1c480.e3431c"],["295efd9c.0c02d2"],["38f1c480.e3431c"]]},{"id":"34973194.85ac6e","type":"api-call-service","z":"7e208340.c2710c","name":"Voice output","server":"1d26e5fb.67f02a","version":3,"debugenabled":false,"service_domain":"script","service":"sonos_say","entityId":"","data":"{\"sonos_entity\":\"{{ payload.media_player }}\",\"volume\":\"{{ voice.volume }}\",\"message\":\"{{ payload.text }}\",\"delay\":\"{{ voice.duration }}\",\"language\":\"en\"}","dataType":"json","mergecontext":"","mustacheAltTags":false,"outputProperties":[{"property":"payload","propertyType":"msg","value":"","valueType":"data"}],"queue":"none","x":1254,"y":544,"wires":[[]]},{"id":"38f1c480.e3431c","type":"change","z":"7e208340.c2710c","name":"Select media player bedroom","rules":[{"t":"set","p":"payload.media_player","pt":"msg","to":"media_player.bedroom","tot":"str"}],"action":"","property":"","from":"","to":"","reg":false,"x":946,"y":544,"wires":[["34973194.85ac6e"]]},{"id":"dc35fba0.c9db18","type":"change","z":"7e208340.c2710c","name":"Select media player living room","rules":[{"t":"set","p":"payload.media_player","pt":"msg","to":"media_player.livingroom","tot":"str"}],"action":"","property":"","from":"","to":"","reg":false,"x":946,"y":496,"wires":[["34973194.85ac6e"]]},{"id":"295efd9c.0c02d2","type":"change","z":"7e208340.c2710c","name":"Select media player bathroom","rules":[{"t":"set","p":"payload.media_player","pt":"msg","to":"media_player.bathroom","tot":"str"}],"action":"","property":"","from":"","to":"","reg":false,"x":946,"y":592,"wires":[["34973194.85ac6e"]]},{"id":"f4882818.311f38","type":"function","z":"7e208340.c2710c","name":"Adjust volume and duration","func":"if (msg.voice == null) {\n msg.voice = {};\n}\n\nif (msg.voice.volume == null || msg.voice.volume == \"\") {\n msg.voice.volume = 0.2;\n}\n\nif (msg.voice.duration == null || msg.voice.duration == \"\") {\n msg.voice.duration = \"00:00:06\";\n}\n\nreturn msg;","outputs":1,"noerr":0,"initialize":"","finalize":"","libs":[],"x":344,"y":560,"wires":[["4c68d312.94da8c"]]},{"id":"8aeb9326.c8f68","type":"debug","z":"7e208340.c2710c","name":"Handle other intents","active":true,"tosidebar":true,"console":false,"tostatus":false,"complete":"true","targetType":"full","statusVal":"","statusType":"auto","x":756,"y":320,"wires":[]},{"id":"fa26951b.0c3908","type":"comment","z":"7e208340.c2710c","name":"Handle voice output","info":"","x":250,"y":464,"wires":[]},{"id":"5a6b9bb6.e7e224","type":"comment","z":"7e208340.c2710c","name":"Handle Rhasspy intents","info":"","x":260,"y":224,"wires":[]},{"id":"abf736cb.f508e8","type":"link in","z":"7e208340.c2710c","name":"Handle voice output","links":["a9e0763b.179238"],"x":175,"y":560,"wires":[["f4882818.311f38"]]},{"id":"a9e0763b.179238","type":"link out","z":"7e208340.c2710c","name":"","links":["abf736cb.f508e8"],"x":1213,"y":272,"wires":[]},{"id":"be111083.116b5","type":"websocket-client","path":"ws://rhasspy:12101/api/events/intent","tls":"","wholemsg":"true"},{"id":"84472145.6b711","type":"server","name":"Haselwart","version":1,"legacy":false,"addon":false,"rejectUnauthorizedCerts":true,"ha_boolean":"y|yes|true|on|home|open","connectionDelay":true,"cacheJson":true},{"id":"1d26e5fb.67f02a","type":"server","name":"Home Assistant","version":1,"legacy":false,"addon":false,"rejectUnauthorizedCerts":true,"ha_boolean":"y|yes|true|on|home|open","connectionDelay":true,"cacheJson":true}]

This is what it looks like: