Currently Jaco encrypts only the messages, so skills can’t read the content, but they would be able to subscribe to topics and see that something is sent. Not sure which metadata is included in the messages, but that would be readable too. Each skill has its own topic to send text which shall be spoken to the system. The sessions are also handled differently, in a way, that I skipped them completely. I didn’t like the approach of Snips here, because I have some skills that require long running sessions, because they did some web or hardware request which could take half a minute. So currently I’m just blocking wake-word detection while the user or the system are speaking.

The encryption itself is done with python’s cryptography library, and you just need to share the key string to decrypt it. So I think it’s quite easy to implement, if you don’t want to use Jaco’s tools which do everything automatically. I also don’t think this results in a big performance drop.

But I know this is not perfect and Mosquitto ACL could be a good, or maybe better solution for this, I’m open to a more elegant suggestion here.

My plan for some time in the future would be to drop MQTT completely and use some messaging system which runs in peer-to-peer mode, removing the central mqtt server. If you have some external system like HomeAssistant my idea was that you use a skill which serves as communication interface. What do you think about this?

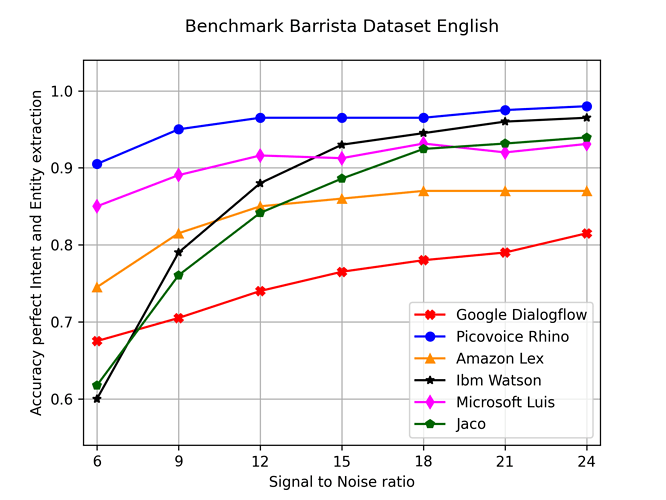

For me this was more a problem for the future, because my current priority is to further improve recognition performance, as well as supporting more languages.

Did you test if it’s also able to run on a Pi3? And how long did training the voice roughly take?

Your larynx container has a nice web interface, really good for a short test

Regarding the intent definition syntax, what do you think of something like this:

## lookup:city

city.txt <- text file with one city per line

## intent:travel_duration

- How long does it take to drive from [Augsburg](skill_travel_city:city_name_1) to [Berlin](skill_travel_city:city_name_2) by (train|car)?

`Comments like this`

`Rasa, and I think Rhasspy too, use something with {} brackets for named slots, but I think this breaks the style somewhat, because you don't get the blue link coloring then`

## synonyms

syn.json <- Currently I'm using a list like with the intents above, but I think this would be more readable.

I would say we are quite free with creating a syntax and should make another conversion script for the different tools. I like your (option1|option2) syntax, but its not supported by Rasa, and I would have to replace it with two sentences anyway.

We can focus on readability this way.

Currently Jaco does collect all dialog files from the skills and merges them into one single place, with skill-name prefixes you can use globally.

Those intent files are used later on to create a text file with possible sentences users can say, which is used to train the language model. I think Rhasspy uses a similar approach here, which should make replacing the STT service with a different on quite easy.

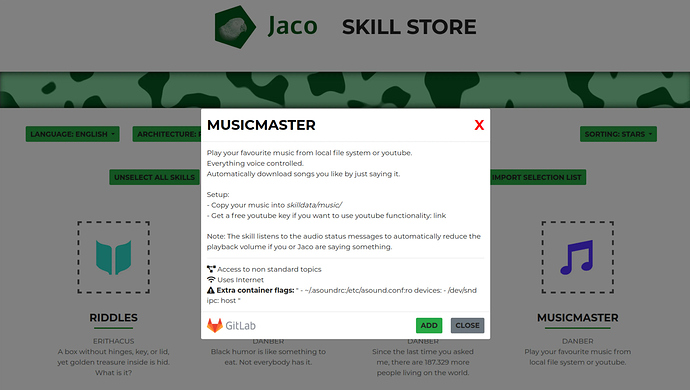

Your skill store also looks awesome; this is definitely something that could benefit the whole community.

Your skill store also looks awesome; this is definitely something that could benefit the whole community.

) and add SSML support to Rhasspy. Possibly afterwards, but I would not count on it.

) and add SSML support to Rhasspy. Possibly afterwards, but I would not count on it.