Look you can be a Pi fan and say it is affordable and yes ESP32-S3-Box works and because it has a audio pipeline containing AEC + BSS in tests it works better than a Pi with Rhasspy lacking simple audio processing.

Its not just Rhasspy as all the linux hobby projects have been missing essential initial audio processing that is an absolute must for what is considered basic voice AI standards.

It is not affordable as a voice AI as Mycroft clearly demonstrate with a $300 unit that offers little over a $50 unit and is completely inferior to $50 to $100 commercially available product and that is reality and yeah some hobbyists will build for fun but that is all they are doing.

There are loads of projects that the Pi does really well but the lower end of original zero to even Pi3 running Python for voice AI doesn’t work well because of specific reasons I often mention because I am being objectively honest and not just a fan boy.

You would not run a Voice Assistant and full featured Home Automation system because they are functionally distinct and benefit from running on distinct hardware that benefits them.

Cars don’t have toilets because generally its considered there are better places to take a dump and bloating a singular system is generally bad practise that often will land you in the shit.

But all the above is not the question or has anything to do with what I was asking as I was presuming because of Mycroft and as synesthesiam confirmed the focus is now a Pi4 2gb which does have far more processing power than the initial zero and asking if there is anything new in the pipeline of anything more capable than a system that had very modest roots.

With TTS we have seen this with larynx which really needs a minimum of that 2gb Pi4 64bit to really run well and all I am doing is asking if there is anything planned.

The ESP32-S3-Box was purely a demonstration that ASR models are generally getting smaller and I have no idea where synesthesiam thinks there are models that will not fit in a 2gb Pi4?

I mentioned flashlight to dodge my opinion that I feel VOSK is now a better option and other elements have evolved whilst the core ASR has pretty much stayed the same whilst elsewhere rapid changes are being made.

So how are you doing Donburch with your own Rhasspy Pi ? Is it ready for real world use ? As I don’t make any false claims about the ESP32-S3-Box as some others do with certain hardware and infrastructure.

The thread is ’ The Future of Rhasspy’ and I was asking as in certain respects it has stayed static.

It will be interesting what Upton says on the 28th The Pi Cast Celebrates 10 Years of Raspberry Pi: Episodes With LadyAda, Eben Upton, and More | Tom's Hardware as hoping we might get something like a Pi4A where the A is AI and minus USB3 the spare PCIe lane brings on board a Raspberry NPU as the PI is starting to lose huge ground in this area.

But that is just discussing the future and what are likely becoming essential requirements.

I personally feel the big processes of voiceAI TTS & STT can be shared centrally be it X86 & a GPU or what I have preordered Rock5 with 6Tops NPU that employs many ears of distributed room KWS to finally get real world use for low cost.

As if a application SoC is not a great platform for audio DSP processing then partition process to what it is great for and that is how I see Raspberry and satellite ESP32-S3 KWS and use both for what they are good for and not for what they are not.

Also waiting for the Radxa Zero2 which has a 5Tops NPU but until we get the cost effectiveness of a Pi with NPU currently unless light load the Pi is not a great platform for AI and that is just fact.

Dan Povey talk from 04:38:33 “Recent plans and near-term goals with Kaldi”

https://live.csdn.net/room/wl5875/JWqnEFNf

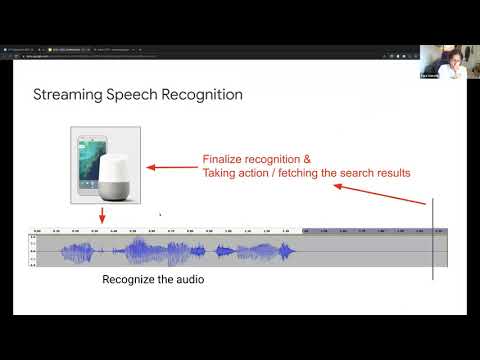

Tara Sainath End-to-end (E2E) models have become a new paradigm shift in the ASR community

Do you have anything to share that could be the future of Rhasspy or any cost effective VoiceAI?

). I’ve already started working a new wakeword system (based on

). I’ve already started working a new wakeword system (based on