Hi all,

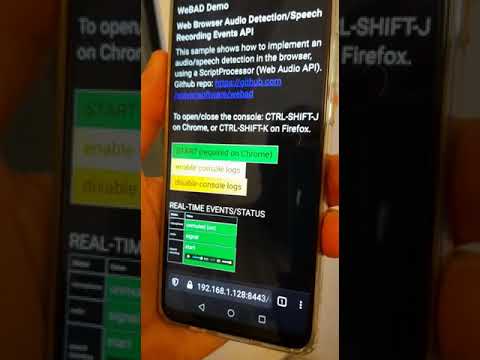

just to share with you my draft project:

I experimenting how to use the browser as a voice interface for … anything.

Of course in the RHASSPY realms, the browser could act as a perfect “satellite” device/frontend.

Here below a quick video demo of one the voice interactions, I call “continuous listening”:

I appreciate any kind of contribute/discussion. Here or, for general open points, feel free to open discussion topic here: solyarisoftware/WeBAD · Discussions · GitHub

cheers

giorgio

2 Likes

hi, when i run the demo page i get the error ‘Stream generation failed’, what i’m doing wrong?

And how can i use this with rhasspy?

Hi Dariel,

Which browser? Which OS? BTW, I suggest to user firefox!

You read this error on console, right? Which code line?

Do you enabled / have a microphone, right? See by example: https://github.com/cwilso/volume-meter/issues/9

The idea is to use the web browser as voice interface. Audio I/O message could be sent to the Rhasspy base station, no? See: https://github.com/solyarisoftware/webad#architecture

BTW, a  on github is welcome

on github is welcome

giorgio

hi, i change the server in npm from express to the one you suggest and boom, it´s working.

The idea is to use the web browser as voice interface. Audio I/O message could be sent to the Rhasspy base station, no?

Yes, that’s why i have interest in this project, i want to use wall mounted tablet´s to show the dashboard and use the integrated mics to send the voice commands to rhasspy, sorry my ignorance but how can i integrate this to rhasspy?.

I was looking a way to do that and the only info that i found was this topic, thanks for build this.

1 Like

on github is welcome

on github is welcome