Well, let’s agree to disagree there, but I actually agree with your other remark that for a $15 price range we should probably look at embedded solutions. If you have some input there, I would love to hear it in the other forum topic.

I don’t think the embedded devices cut the mustard anymore as they are of a similar cost to many dedicated Socs of much more flexibility and capability.

You can take an embedded direction with those socs and create a Buildroot or Yocto ‘embedded’ solution as there is no advantage in cost with say what might be used such as the ESP32-LyraT-Mini.

That was my point as the new function specific low cost Arm boards can be specific embedded solutions and the advantages of a general purpose OS is not so much of an advantage.

If you have a matrix voice its great that a repo is supplied but to purchase one now @$75 with what is available is an extremely dubious choice in terms of $.

MATRIX Voice ESP32 Version (WiFi/BT/MCU)

They are more of a legacy to all what was available a year or more ago when Snips was around that is no longer true now and definitely not of the future.

I’ve tested FFTW3 in place of KISS FFT and there was no noticeable change in the CPU usage on a Pi Zero (still 45% CPU min only for MFCCs).

45% min is pretty hefty but good to know you got it working as FFTW3 if compiled for A53 should use neon from memory.

Anyone got any clues to interfacing Julia libs to Python?  But got a feeling could even be worse.

But got a feeling could even be worse.

I was able to get the CPU % down significantly and have Raven run pretty well on a Pi Zero by:

- implementing @fastjack’s MFCC optimization (or some flavor of it)

- MFCC is calculated once on an incoming audio chunk and the matrix is reused when sliding windows

- Pre-computing the DTW distance matrix using scipy.spatial.distance.cdist

- This made a huge difference in speed, rather than computing the cosine distances as needed inside the DTW for loops

One area where the Zero still as problems is when there’s a lot of audio that activates the VAD but doesn’t match a template. This gets buffered and processed, but can clog it up for a few seconds as the DTW calculations run.

A way to help might be to say if so many frames in a row have very low DTW probabilities (< 0.2) that audio is dropped for a little while. Any thoughts on this? Maybe it will be moot once the DTW calculations are externalized.

MFCC doesn’t seem to be the bottleneck so far.

Thinking about it 45% isn’t all that bad on a Pi Zero as its just short of horsepower.

Acoustic EC on the Pi3 is really heavy but actually doesn’t matter at all because of the diversification of process.

It only runs heavy when audio is playing, doesn’t run on input and TTS is generally the end result of process.

So it doesn’t matter as it runs at alternative timeslots to other heavy process and diversifies load.

How much load does RhasspySilence produce on its own on a zero as isn’t VAD just the summation of a couple of successive frames of the same FFT frame routine that is duplicated in MFCC creation?

You can actually see the spectra in a MFCC so surely you can grab the spectra bins of the low and high pass filter that VAD uses from MFCC instead of WebRtcVad and log the sum there and do away with RhasspySilence at least on a Zero?

Then use diversification and turn off MFCC/VAD after silence until the audio capture is processed.

You don’t have to halt after silence but I am pretty sure the single frame MFCC calculation could make an excellent successive VAD detection with hardly any more load than MFCC itself.

What is the load of RhasspySilence or is it pretty minimal anyway?

Also again from the above it seems like MFCC is batched and searched after whilst like VAD should be frame by frame?

As if your DTW probabilities don’t fit for X series of frames then doesn’t DTW restart again on the current frame?

With webrtcvad I was seeing maybe a millisecond to process a 30 ms chunk of audio.

I’m betting there’s some savings that could be had by combining the VAD, MFCC, and DTW steps. MFCC/DTW are done on “template-sized” chunks of audio (the average length of your wake word), whereas webrtcvad mandates 10, 20, 30 ms chunks.

Some ways I could think to save CPU:

- Use VAD to decide whether a whole template-sized chunk is worth processing

- I wait to process audio until VAD says there’s speech, but if the majority of a template-sized chunk is silence I will still process it (MFCC/DTW)

- Re-use FFT from VAD in MFCC calculation

- Would work well if they’re processing the same chunk size

- Abort DTW calculation early if it can’t reach threshold

- Not sure if this is possible, but I would guess you could tell at some point in DTW that the final distance can’t ever get above threshold. In that case, abort the rest of the calculation.

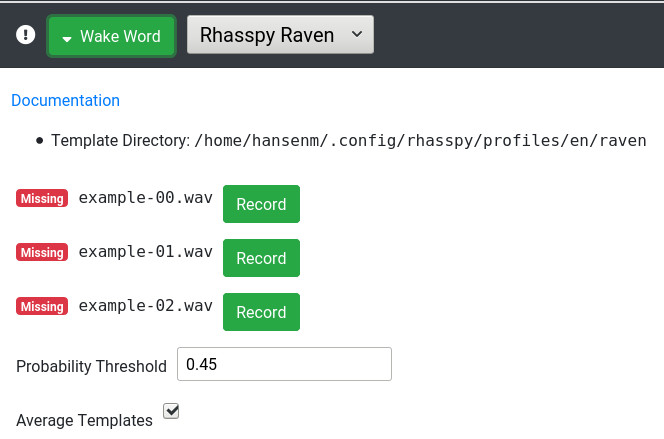

Just so the new Raven doc : https://rhasspy.readthedocs.io/en/latest/wake-word/#raven

Does it support multiple personal wakewords ? How to set them ?

Dunno as the FIR filters which are just FFT routines of webrtc are all contained in WebRTCAudioProcessing and as individual FFT routines you don’t have api access just the results.

@fastjack was testing actual FFT libs for MFCC so sounds like at that point you do have access to the FFT routines and they are not just submerged in some lib.

You mean use the FFT frame for both MFCC frame and VAD calculation and drop webrtcvad?

I added a place in the web UI to record the examples:

You click “Record” next to each example and speak it. Right now, the web UI doesn’t support multiple wake words but this is absolutely possible in Raven

Per @fastjack’s suggesting, I’m also working on the ability for Raven to save any positive detections to WAV files so you can train another system like Precise down the road.

Right. Seems like there are multiple FFTs being done on the same audio.

Looking at Raven WAV templates dir, it seems there is no way to have a keyword use multiple templates (for later averaging per keyword). Averaging templates from different speakers will result in very poor accuracy.

As this is “personal” wake words, it would be nice to have the ability to setup multiple keywords (one for each family member) with multiple templates each (reducing calculation by averaging templates per keyword).

Maybe something like:

templates_dir/

keyword1/

template1.wav

template2.wav

keyword2/

template3.wav

template4.wav

...

And a CLI args like:

--keywords "./templates_dir/"

or

--keyword "keyword1=./templates_dir/keyword1/*.wav,sensitivity=0.54"

--keyword "keyword2=./templates_dir/keyword2/*.wav"

PS: Not a CLI expert

Yeah its always been the same prob due to KWS always using an external VAD but on each frame the data the FFT frame provides can be used for the MFCC frame and VAD frame.

But yeah 2x FFT runs because VAD & MFCC are usually separate projects/libs not sure why KWS don’t have a VAD function that does.

@fastjack Could you do a sort of feature extraction with VAD that looks for a tonal quality and then switch to a family member?

This is almost exactly what I’m working on now

I think the way to do this in Raven would be to just have multiple keywords, one for each family member. Assuming MFCCs retain some information about tone, this should allow you to differentiate who spoke the command.

I keep mentioning it but https://github.com/JuliaDSP/MFCC.jl#pre-set-feature-extraction-applications does diarization.

The code is there even if not used.

This is why I think a specific C++ library should be made to do all this directly in an optimized fashion (Audiochunk->VAD->Preemphasis->windowing->MFCCs).

Another C++ library can eventually help with the templates DTW comparison.

The wakeword is the main CPU bottleneck for a vocal assistant project (satellite). The rest easily run on a Pi 4.

Man it would be Gorgeous to get as it would be so beneficial for so many VoiceAI projects.

I did give it a go and stalked C/C++ DSP programmers on github and sent pleading messages about a month ago.

I failed

Apols all I am no use but at least my heart is there even though as said useless

I know been same with wakeword and Linto now also hate me  for pestering

for pestering

PS just to name drop https://github.com/JuliaDSP/MFCC.jl#pre-set-feature-extraction-applications once more as I am not a Python programmer or any language any more, I do want to ask if some of the guys will take a look as supposed the interfacing between Python and Julia is supposedly quite straight forward.

Also julia for python experts is also supposedly more native whilst challenging C optimization speeds.

Also the Julia guys themselves wrote those libs are they are promoting what Julia could, can do and the author might be a very good contact to know.

@rolyan_trauts this looks like a perfect starting point for a native all-in-one system. I was able to piece together Raven thanks to the Snips article and @fastjack’s implementation, but I don’t know if I could create an all-in-one system in any reasonable amount of time from “scratch”.

I looked at the diarization part of feacalc a bit. So it extracts 13 MFCC features and does some normalization. Could this be calculated for the Raven templates, and then again once the wake word is detected, to do speaker recognition? DTW could be used again (probably without windowing), and the smallest cosine between all “diarization” templates would hint at who was speaking.