I don’t use respeaker, I use an IQaudO Codec Zero, but I get the point. Basically, I just have to wait for the thing to start up properly (and slowly) before any expectations for it to work, despite it not giving any hint that it needs more time. ![]()

Yeah, the RasPi Zero does take a few minutes to get going, prompting me to think that it needed some feedback to indicate that it is ready - say by hermesLEDcontrol flashing a particular pattern.

But I have found that once through the setup and testing phase it wasn’t so much of an issue because once started it only needs rebooting very infrequently.

And yes, the RasPi Zero is noticably slower than my RasPi 3A+ … but I already had one, and it does work well enough for me.

@tetele I am pleased that you have IQaudio running. I thought the specs looked reasonable, but was put off by the focus in documentation etc being on audio output - and me knowing practically nothing about linux audio in case I had to figure out any problem by myself.

It’s pretty simple to set up, to be honest, especially since it has built-in drivers in the HAT’s EEPROM.

The main disadvantage (apart from a single built-in microphone) is that it doesn’t have an out of the box audio jack, so you have to either solder one to the Line Out header or wire a speaker directly to the dedicated 2-pin screw terminal, then apply the according config from the Github repo.

Yeah I have an Iqaudio also and it is a bit weird 1mic + 1 ADC, 1.2 watt speaker output and a solder on Aux output.

I have to admit it the Respeaker for price and function is prob best as $10 for a hat.

I also have the adafruit bonnet which is very much the same as the respeaker but $30, maybe a touch better quality with a better layout of LEDs but IMO its not worth the $20 difference.

The Keyes studio clones are pure noisey dirt and suggest anyone stay clear of them.

I’ve been trying this to build a Rpi3A+ satelite but I run into all kind of errors. According to the rhasspy documentation a rpi3+ should be enough, but I get kernel mismatch errors and the following:

It is a arm64 system so i changed the armel accordingly

The following packages have unmet dependencies:

rhasspy : Depends: libgfortran4 but it is not installable

Recommends: espeak

Recommends: flite but it is not going to be installed

Recommends: mosquitto but it is not going to be installed

I could install the mosquitto dep but libgfortran4, espeak and flite won’t work. There is no documentation about this. Any thoughts?

From a Google as dunno as tend to use the docker install

Great tutorial.

I think you mistyped intent name. Shouldn’t it be ChangeLightState instead LightState?

Maybe I’m wrong, but I think you should handle intent or event on base machine. If you add another satellite in the future, you can’t retrieve home assistant token and forced to create a new one and replace tokens on each existing satellite

Well spotted ! Yes, at the bottom of part 3, references to LightState should be ChangeLightState.

Darn it looks like I can’t edit that post any longer to correct it ![]()

You are probably correct. I had assumed that Rhasspy uses client-server architecture, and therefore the client is controlling the flow of processing. But I misunderstood.

I now understand that once the Wakeword is detected (or it may be when the client starts listening to the microphone ?) each module generates one or more MQTT messages, used to trigger other modules. Thus the Rhasspy Intent Handling module on the Base station is just as capable of picking up the MQTT messsage and calling Home Assistant.

Unfortunately i cannot quickly and easily check this out, due to me having changed my system to use Node-RED instead of Home Assistant intents or events.

Actually the token is stored in the Rhasspy satellite’s profile, and can easily be copied and pasted into the configuration for a new satelite ![]()

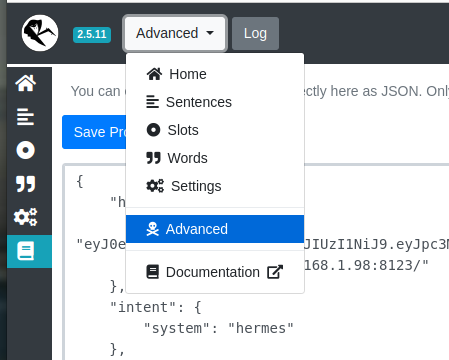

{

"home_assistant": {

"access_token": "eyJ0eXAiOiJ ... long long sequence ... XmCZf2SenOU",

"url": "http://192.168.1.98:8123/"

},

"intent": {

"system": "hermes"

},

Of course that last statement would be so much more meaningful with the instruction of where to find the Rhasspy profile … It is titled “Advanced” (with an unhelpful skull and crossbones icon) in the drop-down menu.

One of my Satellites is also a RasPi 3A+ (great alternative to RasPi Zero 2, especially for the reSpeaker 4-mic HAT with its 12 LEDS, but I digress).

I recall seeing posts about libgfortran4, but it’s been quite a while and don’t remember doing anything for it - there is nothing in the history on my RasPi 3A+ satellite - but that doesn’t qualify as “proof”.

I do wonder if you installed the same version of Raspberry Pi OS Lite (32-bit) - I used the LITE version because once it is running I don’t intend to need a graphical user interface, and probably the 32-bit version because I’m not going to run huge programs that need lots of memory space. Mine starts off with:

Linux Rhasspy-1 5.10.103-v7+ #1529 SMP Tue Mar 8 12:21:37 GMT 2022 armv7l

Thinking about it, maybe the libgfortran4 issue was with the 64-bit OS version ?

The Arm Neon is this extremely fast co-processor that by its 64bit D register or 128bit Q register, it can handle 8bit to 64bit data types.

https://developer.arm.com/documentation/den0018/a/NEON-Intrinsics/Vector-data-types-for-NEON-intrinsics?lang=en

The A53 is a ArmV8a optimised SoC that you will slow Neon operations x2 with a 32bit OS due to needing 2x the instructions to load the Neon.

Neon optimisation is heavily implemented from routines such as FFT to probably all Arm ML optimised libs so that really the memory advantages pale into insignificance compared to the speed-up 64bit gives with operations repeated multiple times on a voice assistant platform.

Memory unless your on a Pi4 4gb or above doesn’t really matter but for many operations purely by choice of picking the 32bit image over the 64bit image you will slow the apps running, by a considerable ammount that benchmarking 32bit Mimic vs 64bit Mimic, will likely cement which version you pick.

libgfortran4 is in Buster but not Bullseye where its libgfortran5

I usually use the docker image so haven’t installed but from the forum

sudo apt-get -f install apparently fixes the missing dependancies, but haven’t tried.

Hello there,

First of all great tutorial! I was looking for doing something similar with home assistent for a while but didn’t find the correct platform or integration with home assistent i already have setup at home.

I tried to replicate the example with a newly bought RPI Zero w and a Pi hat 2 mics to create a standalone satallite. I have a JBL speaker available to connect to the Jack port for testing. I tested the mic and speaker with arecord and could replay the sound with aplay after correcting the mic and speaker with the alsamixer. With a few bumps in the road i managed to get the Rhasspy up and running on the satallite. I also managed to get the MQTT and Rhasspy running on the homeassistent base station(RPI 4B+ with 4Gb ram).

Now i encounter some problems with setting the correct Audio Recording/Audio Playing in the rhasspy environment. Most of the times i refresh the mic/speaker it takes a long time and receive a timeout. (“concurrent.futures._base.TimeoutError”). Other times i do receive a list of devices and can select the “Hardware device with all software conversions(plughw:CARD=Headphones,Dev=0)” but when i test the mic/speaker the bar resets to “Default Device” and nothing happens and receive the timeout error stated below.

And sometimes when i press “refresh” and “test” with a long interval after each other it seems to find one or more devices with “working!”. But after testing the devices will reset to “default device”.

I already tried to restart from scratch and reflash the Sd-card. Also i tried to install this on a RPI B+ V1.2 but i get the same errors. The RPI B+ V1.2 does not have a WiFi module and is connected directy to the ethernet port.

[DEBUG:2023-05-30 00:19:06,454] rhasspyspeakers_cli_hermes: Publishing 105 bytes(s) to hermes/audioServer/rhasspy-sat1/playFinished

[DEBUG:2023-05-30 00:19:36,581] rhasspyserver_hermes:

Traceback (most recent call last):

File "/usr/lib/rhasspy/usr/local/lib/python3.7/site-packages/quart/app.py", line 1821, in full_dispatch_request

result = await self.dispatch_request(request_context)

File "/usr/lib/rhasspy/usr/local/lib/python3.7/site-packages/quart/app.py", line 1869, in dispatch_request

return await handler(**request_.view_args)

File "/usr/lib/rhasspy/rhasspy-server-hermes/rhasspyserver_hermes/__main__.py", line 831, in api_speakers

speakers = await core.get_speakers()

File "/usr/lib/rhasspy/rhasspy-server-hermes/rhasspyserver_hermes/__main__.py", line 882, in get_speakers

result_awaitable, timeout=timeout_seconds

File "/usr/lib/rhasspy/usr/local/lib/python3.7/asyncio/tasks.py", line 449, in wait_for

raise futures.TimeoutError()

concurrent.futures._base.TimeoutError

A similar error as stated above could also happen multiple times when restarting rhasspy.

Maybe the Pi Zero w and RPI B+ V1.2 are not completely compatible with the rhasspy integration but this is all i have at the moment. Also the newer versions are currently unavailable to buy.

But maybe someone has some suggestions to what i could try and how i can make this work?

Kind regards,

Silence

Wow, good timing! I was just about to post the exact same thing. I get the same timeout errors. Glad it’s not just me! I have the 4-mic hat on RPI Zero v1.3 so it’s not a hardware issue since we are using different hardware.

One thing to note: I can record a .wav file just fine using arecord on the terminal. But it seems Rhasspy is having trouble querying the arecord devices and bringing them forward to the UI for use.

I’ve also noticed that the microphone does work for about 0.1 seconds every 2 minutes or so. I can see it capture audio on MQTT Explorer “audioFrame” topic.

Edit: Okay this is down to the MQTT connection. I did a fresh install of Raspian. Installed mic drivers then installed Rhasspy. Once on the web UI I attach and save mic via arecord and everything works fine. As soon as I save my external MQTT broker it all breaks and the mix stops working. If I change MQTT back to “Internal” and save then the mic comes back on and start working again. Can anyone help!?

Edit: I just walked throught the same process with an RPI 3b+ board I have. No issues with that board, everything seems to work fine. Something to do with the Zero boards only?

A couple of days ago i was lucky enough to order a RPI 4B+ 4GB ram. The board cam in the mail yesterday and i started testing. First anoying thing was the Wifi which keeps disconnecting from time to time even though i used the set power_save off command.

Now with ethernet cable the connection seems to drop-out less often…

Regarding the previous issue, i have booked a little more progress with the PRI 4b+. I can refresh the mic and audio devices and also test these although the seeed-voicecard devices do not always show working. I have tested a lot of devices but i’m nog getting any sound or recording done.

If anyone has any ideas of how to solve this problem please let me know.

Side comment…is there any way you can update your docs to put some notes on Hardware - Rhasspy to say that reSpeaker needs to use a different repo (the correct one you have in your instructions!)? Seeed appears incapable of updating their Wiki to mention the fact that their repo doesn’t work with modern Raspbian, and they aren’t bothering to maintain it, and so should use the HinTak repo.

I hadn’t seen this tutorial, was just using the official Rhasspy docs which recommends reSpeaker, and then was following their wiki, and having issues. I had to email their tech support, couldn’t even find any info on any recent forum posts on their forum, in order for them to tell me about the HinTak repo.

Well it has been another long day of struggling with the RPI and rhasspy but with some succes! after several tries with different settings i managed to get the voice and (some of the) sound working! I also setup the rhasspy as service which starts nicely after the RPI is booted.

But of cource i bumped into the next problem, the settings or perhaps the profile.json file is not saved when saving settings. When i reboot the RPi all the settings are set to default… Luckyly i made a backup before reboot so i could easyly copy the files into the advanced tab, but this is not wanted behaviour.

Does anyone have had problems with this?

I have this error on working systems, and have just learned to ignore it.

Wi-fi is a real pain in the backside because we can’t see what is going on. Too many possible causes of interference, most of a very transient nature. Not helped by people treating wi-fi as some type of magic that “just works”.

Are you getting good signal strength, or would moving your device closer to the WAP (or with less interference) help ?

Yep, who in their right mind would go back to 2018 version of Raspbian ? That really put me off seeed ![]() When I was getting mine running HinTak was maintaining his version of the repo - just enough to update it to latest Raspberry Pi OS - and had even got to update the official repo with his up-to-date version. I haven’t needed to revisit that issue, so hope it’s still the case.

When I was getting mine running HinTak was maintaining his version of the repo - just enough to update it to latest Raspberry Pi OS - and had even got to update the official repo with his up-to-date version. I haven’t needed to revisit that issue, so hope it’s still the case.

For these and several other issues i no longer recommend RasPi and reSpeaker combo for Rhasspy. But I don’t know of anything else easy to setup ![]() I am hoping that Nabu Casa sponsor or release a low-cost ESPHome based voice assistant gadget.

I am hoping that Nabu Casa sponsor or release a low-cost ESPHome based voice assistant gadget. ![]()

Welcome to Open Source, also known as “by engineers, for engineers, with only cryptic notes for ‘documentation’”. Congratulations on your success. Seriously, i don’t know how many times i have given up and come back weeks or months later.

I have not encountered this. Is it still happening, or a one-off glitch ?

The respeaker 2mic is the only one really that is cost effective and works reasonabilly.

Much with the 2mic is the dark arts of ALSA audio and working with docker.

If using docker for Rhasspy read this thread

Also make sure Pulse audio isn’t running or anthing else is connecting with using the correct ALSA conf as unless you use dmix/dsnoop ALSA devices are single use blocking.

Also not mentioned in that thread but sometimes PCMs don’t show up and you have to add a hint.description "Default Audio Device" as in Installation - Rhasspy

You can use any USB sound card and wired microphone where the lapel or ‘car’ mics will work.

With a mono mic in likely you need a TRS (Tip Ring Sleeve) type mic, but the expectation is to always be nearfield and likely will be lacking amplification.

You can add that with this great module DC 3.6-12V MAX9814 Electret Microphone Amplifier Module AGC Function For Arduino | eBay that will boost to far field and the chip also includes an analogue AGC.

The board with the mic onboard might be easier with dupont jumpers MAX9814 Electret Microphone Amplifier Board Module AGC Auto Gain For Arduino AM | eBay

With a standard sound card (TRS) the tip is the signal & sleeve is GND whilst you can forget about the ring as that is a bias voltage for an electret which will work but likely volume will be low hence why use a Max9814 that does the bias for you.

The only stereo soundcard I know is Plugable USB Audio Adapter – Plugable Technologies where the TR tip/ring are LR left/right with the sleeve being a common ground.

No-one installs a beamingforming or BSS alg that can use multimic so likely any el-cheapo usb sound card and amplified mic can be used.

If you exclude multi-mic algs then the only difference between a broadcast mic is it expects to be desk mounted and close field so lacking volume, so to get that further field that max9814 is just excellent for that purpose.

Stay away from having pulse audio installed with docker and make sure you /etc/asound.conf is correct and shared in your docker run command.

PS I don’t know why these are not more commonly avail as they are just so handy when working with dupont cables and the limited vcc & gnd pins

https://www.aliexpress.com/item/1005003093298771.html

Also with audio be carefull of creating gnd loops where wire resistance will cause interferance.

Just connect a single GND likely from the soundcard (sleeve) as also having it to Pi gnd will likely create a gnd loop with unequal resistance (The gnd will be common so it only needs to be connected once).

Thanks for your response. I can indeed relate to you regarding your struggles. I have been experimenting with the RPI for a couple of years which also include openCV projects which could be a real pain.

Regarding the Wifi issue i’m currently ~2m away from the router so this “should” not be an issue. Maybe it is the Wifi signal strength from the gateway. At work i do also have a RPI 4B running with openCV ( also on a RPI OS with desktop) but i do not seem to have (noticable) issues with the Wifi there. For now i will just use an ethernet cable.

Last time I indeed rage quited because I thought I had some things working but all the rhasspy settings where gone every time I shut the system down. Now a few days later i rebooted the system and apperently the settings were still there! So i do not yet have an explanation for it right now.

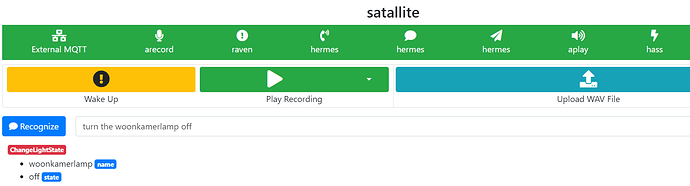

I do have a couple of issues i do not yet understand. I have a RPI 4B running with Rhasspy. I can give it voice commands and it wakes up on wake-words(I called in Jarvis for now:) ) I have made some sentenses which are recognized by the Rhasspy satalite:

On the base station(homeassistent) side i do seem to receive these commands becouse the intents are recognized. Although it seems to be delayed into the logs? When i give Rhasspy a new command, the previous given command will show up as new into the logs:

Rhasspy Assistant

[DEBUG:2023-06-06 20:39:07,963] rhasspynlu_hermes: Publishing 1031 bytes(s) to hermes/intent/ChangeLightState

[DEBUG:2023-06-06 20:39:07,981] rhasspydialogue_hermes: <- NluIntent(input='turn woonkamerlamp off', intent=Intent(intent_name='ChangeLightState', confidence_score=1.0), site_id='satallite', id=None, slots=[Slot(entity='name', value={'kind': 'Unknown', 'value': 'woonkamerlamp'}, slot_name='name', raw_value='woonkamerlamp', confidence=1.0, range=SlotRange(start=5, end=18, raw_start=5, raw_end=18)), Slot(entity='state', value={'kind': 'Unknown', 'value': 'off'}, slot_name='state', raw_value='off', confidence=1.0, range=SlotRange(start=19, end=22, raw_start=19, raw_end=22))], session_id='satallite-default-1aa996ba-6415-4d96-b651-0f0631aed449', custom_data='default', asr_tokens=[[AsrToken(value='turn', confidence=1.0, range_start=0, range_end=4, time=None), AsrToken(value='woonkamerlamp', confidence=1.0, range_start=5, range_end=18, time=None), AsrToken(value='off', confidence=1.0, range_start=19, range_end=22, time=None)]], asr_confidence=0.99036355, raw_input='turn woonkamerlamp off', wakeword_id='default', lang=None)

[DEBUG:2023-06-06 20:39:07,983] rhasspydialogue_hermes: Recognized NluIntent(input='turn woonkamerlamp off', intent=Intent(intent_name='ChangeLightState', confidence_score=1.0), site_id='satallite', id=None, slots=[Slot(entity='name', value={'kind': 'Unknown', 'value': 'woonkamerlamp'}, slot_name='name', raw_value='woonkamerlamp', confidence=1.0, range=SlotRange(start=5, end=18, raw_start=5, raw_end=18)), Slot(entity='state', value={'kind': 'Unknown', 'value': 'off'}, slot_name='state', raw_value='off', confidence=1.0, range=SlotRange(start=19, end=22, raw_start=19, raw_end=22))], session_id='satallite-default-1aa996ba-6415-4d96-b651-0f0631aed449', custom_data='default', asr_tokens=[[AsrToken(value='turn', confidence=1.0, range_start=0, range_end=4, time=None), AsrToken(value='woonkamerlamp', confidence=1.0, range_start=5, range_end=18, time=None), AsrToken(value='off', confidence=1.0, range_start=19, range_end=22, time=None)]], asr_confidence=0.99036355, raw_input='turn woonkamerlamp off', wakeword_id='default', lang=None)

[DEBUG:2023-06-06 20:39:11,635] rhasspyasr_kaldi_hermes: Receiving audio

[DEBUG:2023-06-06 20:39:14,182] rhasspydialogue_hermes: <- AudioPlayFinished(id='c7390f2a-e96f-44b9-aad4-39897325088a', session_id='c7390f2a-e96f-44b9-aad4-39897325088a')

[DEBUG:2023-06-06 20:39:14,183] rhasspytts_larynx_hermes: <- AudioPlayFinished(id='c7390f2a-e96f-44b9-aad4-39897325088a', session_id='c7390f2a-e96f-44b9-aad4-39897325088a')

[ERROR:2023-06-06 20:39:33,936] rhasspydialogue_hermes: Session timed out for site satallite: satallite-default-1aa996ba-6415-4d96-b651-0f0631aed449

[DEBUG:2023-06-06 20:39:33,939] rhasspydialogue_hermes: -> AsrStopListening(site_id='satallite', session_id='satallite-default-1aa996ba-6415-4d96-b651-0f0631aed449')

I also adapted the configuration.yaml by telling it i have the intents listed in a new intent file:

intent:

intent_script: !include intents.yaml

And ofcourse i implemented the intents.yaml with some examples:

#

# intents.yaml - the actions to be performed by Rhasspy voice commands

#

GetTime:

speech:

text: The current time is {{ now().strftime("%H %M") }}

ChangeLightState:

speech:

text: Turning {{ name }} {{state }}

action:

- service: light.turn_{{ state }}

target:

entity_id: light.{{ name }}

GetTemperature: # Intent type

speech:

text: hello

action:

service: notify.notify

data:

message: Hello from an intent!

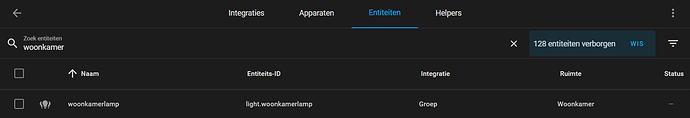

And the entity seems to be corresponding with the enitity in homeassistent:

So i think i have everything set-up to turn a lamp on or off in the livingroom(woonkamer), but still nothing happens. Maybe homeassistent does not reach the intent.yaml or configuration.yaml? Is there a way to check if these files are reached when a command is given by a satalite(by a print statement or something)? Or maybe I have made a mistake somewhere else. Perhaps you have some advise in what direction i should be looking?

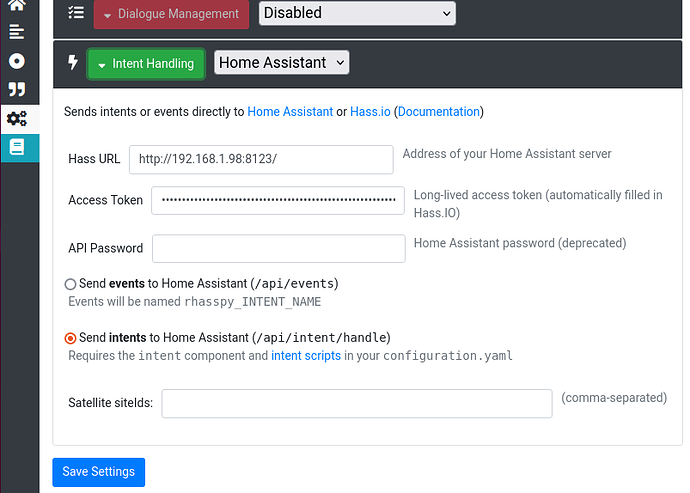

Hmmm, it looks as though your satellite is setup ok; and it looks like your intents in HA are ok …

I guess you are meaning that the log shows the Rhasspy satallite is communicating with the Rhasspy HA add-on running on the base station.

But are the intents getting from the satellite to Home Assistant itself ?

On your satallite, in the “Intent Handling” section of “Settings”, what do you have ? I think it should look something like

See the “Getting the Intents from the Satellite to Host” section in post #6 above. I used “sending intents” method, but I know others send events successfully, and I personally now use node-RED for all my automations via MQTT.

Can anyone help out? I’ve got most everything working fine. My only issue is with Porcupine. I have mic set and porcupine running (list populates as rpi options) everything is fine. Then I switch on exteral mqtt. After this all the model choices (.ppn files) are Linux versions. and the wake word stop working. Has anyone else experienced this. How can I fix it?