Thanks for your response. I can indeed relate to you regarding your struggles. I have been experimenting with the RPI for a couple of years which also include openCV projects which could be a real pain.

Regarding the Wifi issue i’m currently ~2m away from the router so this “should” not be an issue. Maybe it is the Wifi signal strength from the gateway. At work i do also have a RPI 4B running with openCV ( also on a RPI OS with desktop) but i do not seem to have (noticable) issues with the Wifi there. For now i will just use an ethernet cable.

Last time I indeed rage quited because I thought I had some things working but all the rhasspy settings where gone every time I shut the system down. Now a few days later i rebooted the system and apperently the settings were still there! So i do not yet have an explanation for it right now.

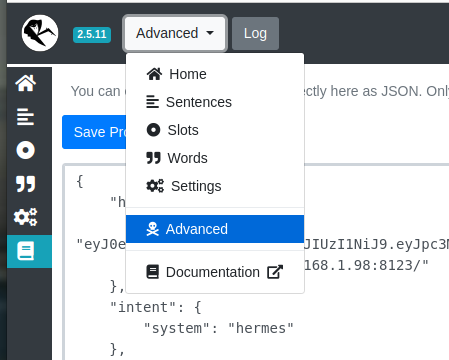

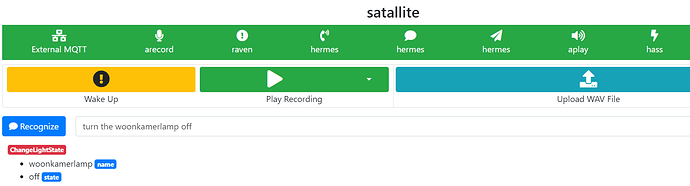

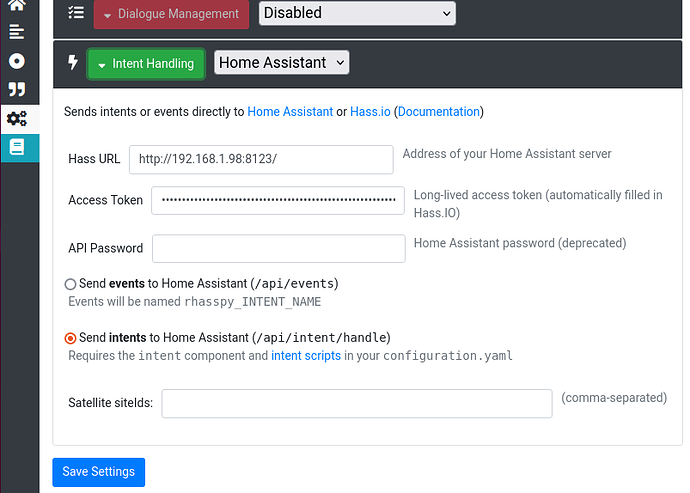

I do have a couple of issues i do not yet understand. I have a RPI 4B running with Rhasspy. I can give it voice commands and it wakes up on wake-words(I called in Jarvis for now:) ) I have made some sentenses which are recognized by the Rhasspy satalite:

On the base station(homeassistent) side i do seem to receive these commands becouse the intents are recognized. Although it seems to be delayed into the logs? When i give Rhasspy a new command, the previous given command will show up as new into the logs:

Rhasspy Assistant

[DEBUG:2023-06-06 20:39:07,963] rhasspynlu_hermes: Publishing 1031 bytes(s) to hermes/intent/ChangeLightState

[DEBUG:2023-06-06 20:39:07,981] rhasspydialogue_hermes: <- NluIntent(input='turn woonkamerlamp off', intent=Intent(intent_name='ChangeLightState', confidence_score=1.0), site_id='satallite', id=None, slots=[Slot(entity='name', value={'kind': 'Unknown', 'value': 'woonkamerlamp'}, slot_name='name', raw_value='woonkamerlamp', confidence=1.0, range=SlotRange(start=5, end=18, raw_start=5, raw_end=18)), Slot(entity='state', value={'kind': 'Unknown', 'value': 'off'}, slot_name='state', raw_value='off', confidence=1.0, range=SlotRange(start=19, end=22, raw_start=19, raw_end=22))], session_id='satallite-default-1aa996ba-6415-4d96-b651-0f0631aed449', custom_data='default', asr_tokens=[[AsrToken(value='turn', confidence=1.0, range_start=0, range_end=4, time=None), AsrToken(value='woonkamerlamp', confidence=1.0, range_start=5, range_end=18, time=None), AsrToken(value='off', confidence=1.0, range_start=19, range_end=22, time=None)]], asr_confidence=0.99036355, raw_input='turn woonkamerlamp off', wakeword_id='default', lang=None)

[DEBUG:2023-06-06 20:39:07,983] rhasspydialogue_hermes: Recognized NluIntent(input='turn woonkamerlamp off', intent=Intent(intent_name='ChangeLightState', confidence_score=1.0), site_id='satallite', id=None, slots=[Slot(entity='name', value={'kind': 'Unknown', 'value': 'woonkamerlamp'}, slot_name='name', raw_value='woonkamerlamp', confidence=1.0, range=SlotRange(start=5, end=18, raw_start=5, raw_end=18)), Slot(entity='state', value={'kind': 'Unknown', 'value': 'off'}, slot_name='state', raw_value='off', confidence=1.0, range=SlotRange(start=19, end=22, raw_start=19, raw_end=22))], session_id='satallite-default-1aa996ba-6415-4d96-b651-0f0631aed449', custom_data='default', asr_tokens=[[AsrToken(value='turn', confidence=1.0, range_start=0, range_end=4, time=None), AsrToken(value='woonkamerlamp', confidence=1.0, range_start=5, range_end=18, time=None), AsrToken(value='off', confidence=1.0, range_start=19, range_end=22, time=None)]], asr_confidence=0.99036355, raw_input='turn woonkamerlamp off', wakeword_id='default', lang=None)

[DEBUG:2023-06-06 20:39:11,635] rhasspyasr_kaldi_hermes: Receiving audio

[DEBUG:2023-06-06 20:39:14,182] rhasspydialogue_hermes: <- AudioPlayFinished(id='c7390f2a-e96f-44b9-aad4-39897325088a', session_id='c7390f2a-e96f-44b9-aad4-39897325088a')

[DEBUG:2023-06-06 20:39:14,183] rhasspytts_larynx_hermes: <- AudioPlayFinished(id='c7390f2a-e96f-44b9-aad4-39897325088a', session_id='c7390f2a-e96f-44b9-aad4-39897325088a')

[ERROR:2023-06-06 20:39:33,936] rhasspydialogue_hermes: Session timed out for site satallite: satallite-default-1aa996ba-6415-4d96-b651-0f0631aed449

[DEBUG:2023-06-06 20:39:33,939] rhasspydialogue_hermes: -> AsrStopListening(site_id='satallite', session_id='satallite-default-1aa996ba-6415-4d96-b651-0f0631aed449')

I also adapted the configuration.yaml by telling it i have the intents listed in a new intent file:

intent:

intent_script: !include intents.yaml

And ofcourse i implemented the intents.yaml with some examples:

#

# intents.yaml - the actions to be performed by Rhasspy voice commands

#

GetTime:

speech:

text: The current time is {{ now().strftime("%H %M") }}

ChangeLightState:

speech:

text: Turning {{ name }} {{state }}

action:

- service: light.turn_{{ state }}

target:

entity_id: light.{{ name }}

GetTemperature: # Intent type

speech:

text: hello

action:

service: notify.notify

data:

message: Hello from an intent!

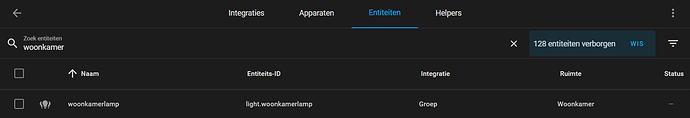

And the entity seems to be corresponding with the enitity in homeassistent:

So i think i have everything set-up to turn a lamp on or off in the livingroom(woonkamer), but still nothing happens. Maybe homeassistent does not reach the intent.yaml or configuration.yaml? Is there a way to check if these files are reached when a command is given by a satalite(by a print statement or something)? Or maybe I have made a mistake somewhere else. Perhaps you have some advise in what direction i should be looking?