I will look but I am targeting supplementary on device training and its hard to convert CTC error rate to what is usually quoted for KW. To get user of use and hardware of use into the dataset is what I am aiming at and its quite easy with Tensorflow but with the above I am quite unsure how to implement that.

I am doing prep work for ‘Project Ears’ which is just a simple interoperable KWS system that will work with any ASR the server side either sits on ASR host or is standalone and on device training is a bit of a misnomer as the server is the device and trained models are shipped OTA to simple ‘Ears’ which are simple KWS.

The models are not big only the original master model is of a large dataset that is loaded and shifted by the weights of locally trained models.

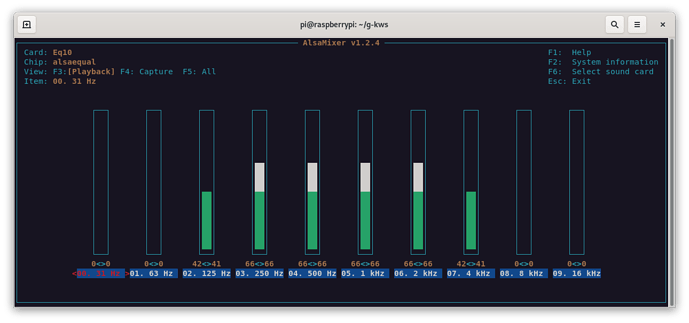

I only know how to do that with standard TFLite models but also I am to employ both Pi & Microcontroller as have a hunch I can squeeze this down to ESP32-S3 but recently been thinking the PiZero2 is such a good price the couple of $ is not worth the horsepower loss but keeping ESP open as likely those ESP32-S3 will eventually land around the $5 mark.

I am using a google framework the models are already done for me google-research/kws_streaming at master · google-research/google-research · GitHub

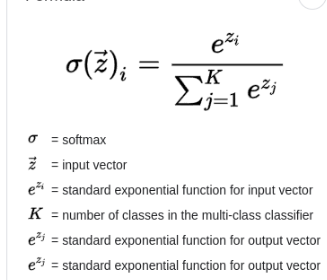

I just just think some basic assumptions of creating datasets are misguided due to the way the vector graph and softmax work.

As the math looks confusing but the the correlation between labels is quite simple especially with low label count KWS as when you create an ‘all-in’ ‘unknown’ its a seesaw as you move away from KW by adding !KW you automatically inflate anything not from that labels softmax score if it doen’t fit that label.

I think we have always had the tools and models to create good KW but the methods of use for me after much trial and thought are slightly suspect.

Google already provide tensorflow and even publish a python framework ready to go to create models I guess its understandable they stop there as you shouldn’t copy what is a benchmark dataset methodology for a working accurate KWS dataset.

What I am saying its any and all vector models are the same and are built with simply too few labels where not only do they act as a sink for !kw due to the seesaw of softmax when there score rises it has a reciprical effect on KW so by clustering more labels you can create more relevance to softmax that often swings to max because the other label fit is far from current input rather than the KW actually matching as often KWS are shy of K in the above to classify accurately… Which is a fairly easy fix as aiming to add a singular ‘similar’ label to the already kw, unknown & noise that has much less variance than unknown but wider than the KW and see how some recipes work, but boy 1050ti aint great hacking thousands of soundfiles with Sox is a nightmare though…

Accessorizing KWS with personalized KW is nice to have but secondary to accuracy and I know accuracy can be much better by adding more labels to stop the near binary switch of ‘if it isn’t this label it must be that as an output must be made’

Once a single model is done it can be shared and the best accuracy is to create models for specific language tailored to fit or use the usual standard English model that garners more personal accuracy through ‘on device training’.

Training a CTC for all the phones than just the single audio sample of a vector model is far beyond me and my GTX1050ti are capable of but with some frustration its possible with more simple vector models.

Also due to the problem of working with even though large on certain words the quality of Ml-commons is not great and sort of need to build models to filter my dataset and create a base dataset to work with but at least for 1st time there is quantity.

I have been wondering about taking a different tack and building 2x models to make dataset manipulation easier with Hey & Marvin on two separate models as like the smaller single phone ‘Hey’ model will also be a reasonably accurate VAD so sheding load and getting a model based VAD for the cost of training flexibility but again prob distraction avenue but I have that one to try also.

The hardest part is automating silence stripping of wav files and after many different tries have just gone for brute repetition of increasing strength of the ones still longer than required window.

for f in *.wav; do

cp "$f" /tmp/sil1.wav

sox /tmp/sil1.wav -p silence 1 0.1 0.0025% reverse | sox -p "$f" silence 1 0.01 0.005% reverse

cp "$f" /tmp/sil1.wav

dur=$(soxi -D /tmp/sil1.wav )

dur=$(echo "scale=0; $dur * 32768 /1" | bc)

if [ $dur -gt 13107 ]; then

sox /tmp/sil1.wav -p silence 1 0.1 0.005% reverse | sox -p "$f" silence 1 0.1 0.01% reverse

fi

cp "$f" /tmp/sil1.wav

dur=$(soxi -D /tmp/sil1.wav )

dur=$(echo "scale=0; $dur * 32768 /1" | bc)

if [ $dur -gt 13107 ]; then

sox /tmp/sil1.wav -p silence 1 0.1 0.01% reverse | sox -p "$f" silence 1 0.1 0.02% reverse

fi

cp "$f" /tmp/sil1.wav

dur=$(soxi -D /tmp/sil1.wav )

dur=$(echo "scale=0; $dur * 32768 /1" | bc)

if [ $dur -gt 13107 ]; then

sox /tmp/sil1.wav -p silence 1 0.1 0.02% reverse | sox -p "$f" silence 1 0.1 0.04% reverse

fi

cp "$f" /tmp/sil1.wav

dur=$(soxi -D /tmp/sil1.wav )

dur=$(echo "scale=0; $dur * 32768 /1" | bc)

if [ $dur -gt 13107 ]; then

sox /tmp/sil1.wav -p silence 1 0.1 0.06% reverse | sox -p "$f" silence 1 0.1 0.1% reverse

fi

cp "$f" /tmp/sil1.wav

dur=$(soxi -D /tmp/sil1.wav )

dur=$(echo "scale=0; $dur * 32768 /1" | bc)

if [ $dur -gt 13107 ]; then

sox /tmp/sil1.wav -p silence 1 0.1 0.15% reverse | sox -p "$f" silence 1 0.1 0.25% reverse

fi

cp "$f" /tmp/sil1.wav

dur=$(soxi -D /tmp/sil1.wav )

dur=$(echo "scale=0; $dur * 32768 /1" | bc)

if [ $dur -gt 13107 ]; then

sox /tmp/sil1.wav -p silence 1 0.1 0.5% reverse | sox -p "$f" silence 1 0.1 0.75% reverse

fi

cp "$f" /tmp/sil1.wav

dur=$(soxi -D /tmp/sil1.wav )

dur=$(echo "scale=0; $dur * 32768 /1" | bc)

if [ $dur -gt 13107 ]; then

sox /tmp/sil1.wav -p silence 1 0.1 1.0% reverse | sox -p "$f" silence 1 0.1 1.5% reverse

fi

cp "$f" /tmp/sil1.wav

dur=$(soxi -D /tmp/sil1.wav )

dur=$(echo "scale=0; $dur * 32768 /1" | bc)

if [ $dur -gt 13107 ]; then

sox /tmp/sil1.wav -p silence 1 0.1 3.0% reverse | sox -p "$f" silence 1 0.1 5.0% reverse

fi

cp "$f" /tmp/sil1.wav

dur=$(soxi -D /tmp/sil1.wav )

dur=$(echo "scale=0; $dur * 32768 /1" | bc)

if [ $dur -gt 13107 ]; then

rm "$f"

fi

if [ $dur -lt 8192 ]; then

rm "$f"

fi

done

Its only way I can find without killing so many files, but slowly getting scripts and methods together to automate much.

With nothing to do for a model apart from edit the param script ffor the Googleresearch KWS benchmark framework

$CMD_TRAIN \

--data_url '' \

--data_dir /opt/tensorflow/data \

--train_dir $MODELS_PATH/crnn_state/ \

--split_data 0 \

--wanted_words heymarvin,unk,noise \

--resample 0.0 \

--volume_resample 0.0 \

--background_volume 0.30 \

--clip_duration_ms 1000 \

--mel_upper_edge_hertz 7600 \

--how_many_training_steps 10000,10000,10000,10000 \

--learning_rate 0.001,0.0005,0.0001,0.00002 \

--window_size_ms 40.0 \

--window_stride_ms 20.0 \

--mel_num_bins 40 \

--dct_num_features 20 \

--alsologtostderr \

--train 1 \

--lr_schedule 'exp' \

--use_spec_augment 1 \

--time_masks_number 2 \

--time_mask_max_size 10 \

--frequency_masks_number 2 \

--frequency_mask_max_size 5 \

--return_softmax 1 \

crnn \

--cnn_filters '16,16' \

--cnn_kernel_size '(3,3),(5,3)' \

--cnn_act "'relu','relu'" \

--cnn_dilation_rate '(1,1),(1,1)' \

--cnn_strides '(1,1),(1,1)' \

--gru_units 256 \

--return_sequences 0 \

--dropout1 0.1 \

--units1 '128,256' \

--act1 "'linear','relu'" \

--stateful 1 \

Then edit on the ondevice at the end of training.