Hi,

I have a main server running mopidy and snapcast server, on the same network I have a couple of RPI with snapcast clients.

These RPI have also rhasspy running, but the thing it’s that I don’t know how to simultaneously listen to music and audio output from rhasspy.

I though about piping audio from rhasspy to the snapcast server, so that it can be heard in all snapcast clients, which is what I’m actually doing with mopidy. Which sends output to snapcast server.

I defined a snapcast stream using a TCP server:

# /etc/snapserver.conf

stream = tcp://127.0.0.1:3333?name=mopidy_tcp

Mopidy configuration have the following:

# /etc/mopidy/mopidy.conf

output = audioresample ! audioconvert ! audio/x-raw,rate=48000,channels=2,format=S16LE ! tcpclientsink host=127.0.0.1 port=3333

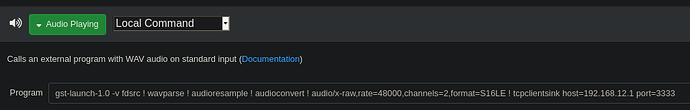

Then in the RPI satellites I tried the following custom command for audio output for rhasspy:

- To use netcat to pipe the stdin audio to the snapcast server:

nc 192.168.IP.SERVER 3333 -

- Use gstreamer (as used in mopidy) to pipe the audio:

gst-launch-1.0 -v fdsrc ! audio/x-raw,format=S16BE,channels=1,rate=8000 ! audioconvert ! audio/x-raw,format=S16LE,channels=1,rate=8000 ! wavenc ! tcpclientsink host=192.168.IP.SERVER port=3333

But both of them have unsuccessful results. I hear a noise sound in the snapcast clients. I think that it might be related with encoding of the audio. It’s difficult to debug gstreamer.

I saw that this have been discussed here Stream music or radio

But still there is no concrete answer.