Hey,

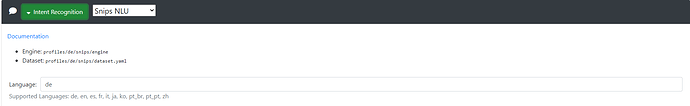

I would like to test snips NLU but have some problems with it.

I have installed the rhasspy docker container with version 2.5

Do I need to install anything else to use snips?

I get a TimeoutError during testing

[ERROR:2020-07-27 11:49:00,023] rhasspyserver_hermes:

Traceback (most recent call last):

File "/usr/lib/rhasspy/lib/python3.7/site-packages/quart/app.py", line 1821, in full_dispatch_request

result = await self.dispatch_request(request_context)

File "/usr/lib/rhasspy/lib/python3.7/site-packages/quart/app.py", line 1869, in dispatch_request

return await handler(**request_.view_args)

File "/usr/lib/rhasspy/lib/python3.7/site-packages/rhasspyserver_hermes/__main__.py", line 1341, in api_text_to_intent

user_entities=user_entities,

File "/usr/lib/rhasspy/lib/python3.7/site-packages/rhasspyserver_hermes/__main__.py", line 2458, in text_to_intent_dict

result = await core.recognize_intent(text, intent_filter=intent_filter)

File "/usr/lib/rhasspy/lib/python3.7/site-packages/rhasspyserver_hermes/__init__.py", line 452, in recognize_intent

handle_intent(), messages, message_types

File "/usr/lib/rhasspy/lib/python3.7/site-packages/rhasspyserver_hermes/__init__.py", line 898, in publish_wait

result_awaitable, timeout=timeout_seconds

File "/usr/lib/python3.7/asyncio/tasks.py", line 449, in wait_for

raise futures.TimeoutError()

concurrent.futures._base.TimeoutError

[DEBUG:2020-07-27 11:49:00,023] rhasspyserver_hermes: Publishing 20 bytes(s) to rhasspy/handle/toggleOn

[DEBUG:2020-07-27 11:49:00,022] rhasspyserver_hermes: -> HandleToggleOn(site_id='testpi')

[DEBUG:2020-07-27 11:48:30,019] rhasspyserver_hermes: Publishing 194 bytes(s) to hermes/nlu/query

[DEBUG:2020-07-27 11:48:30,019] rhasspyserver_hermes: -> NluQuery(input='wie spät ist es', site_id='testpi', id='cac912f7-fdee-4b34-b65d-0d6f7e0eafc4', intent_filter=None, session_id='cac912f7-fdee-4b34-b65d-0d6f7e0eafc4', wakeword_id=None)

[DEBUG:2020-07-27 11:48:30,017] rhasspyserver_hermes: Publishing 20 bytes(s) to rhasspy/handle/toggleOff

[DEBUG:2020-07-27 11:48:30,017] rhasspyserver_hermes: -> HandleToggleOff(site_id='testpi')

I want to test if Snips recognizes words that are not predefined

For example:

Add water to shopping list

The word water would be flexible here and not predetermined

. I already opened an issue for this:

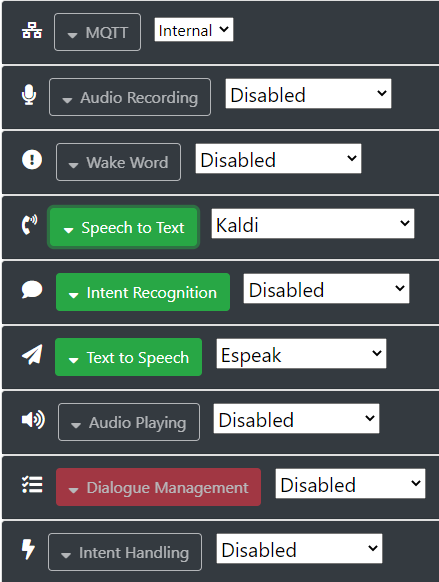

. I already opened an issue for this:  . I see you’re using Kaldi for STT. It should support

. I see you’re using Kaldi for STT. It should support