vajdum,

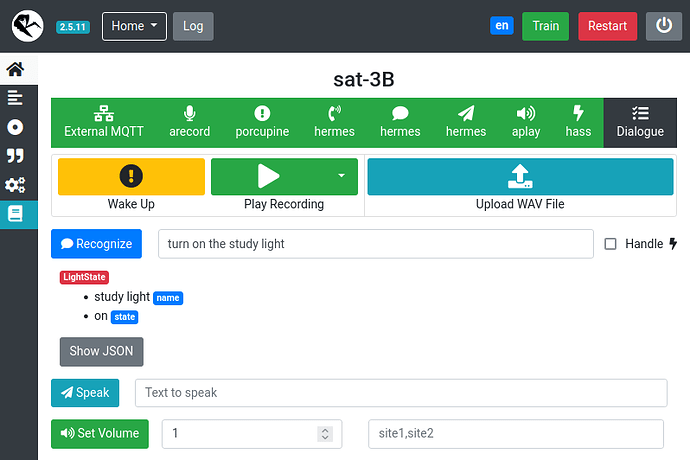

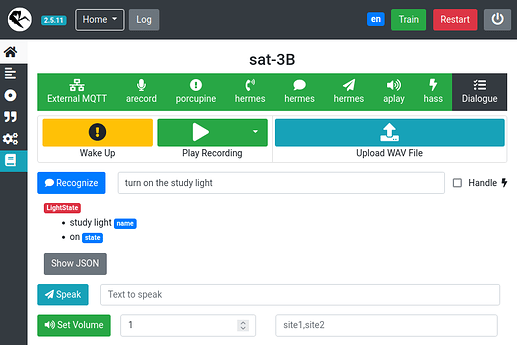

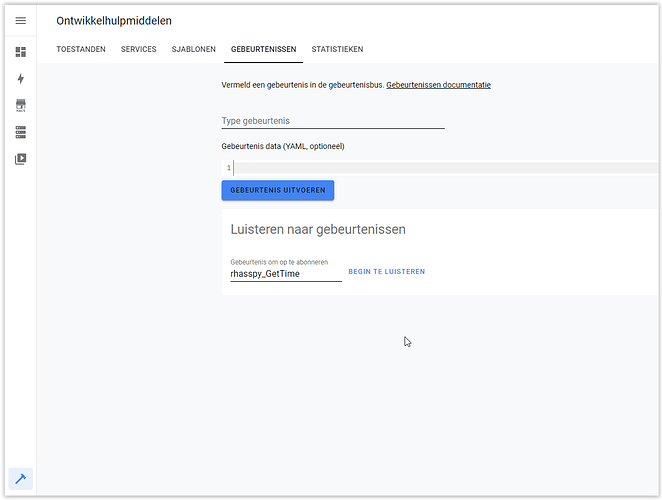

If you have the Satellite and Base recognising the intent … when the words “Porcupine, turn on the study light” are spoken, Rhasspy determines that the “LightState” intent is to be called with slot “name” having a value of “study light” and slot “value” having a value of “on” … as seen here:

… the next step is to get Rhasspy & Home Assistant to action it.

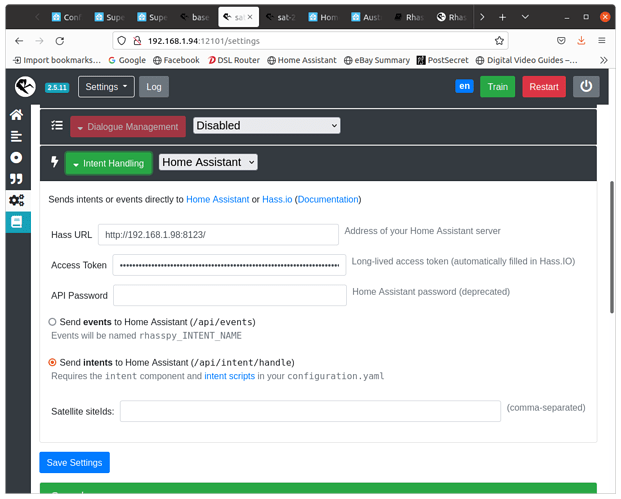

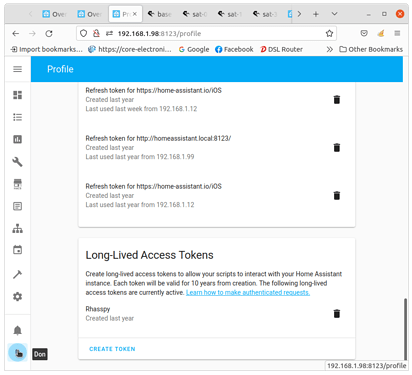

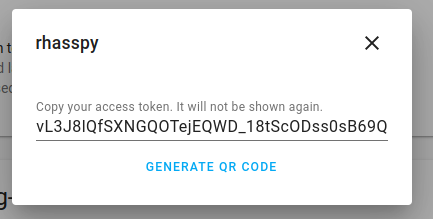

You may need to use a Long-lived Access Token. This is done at the very bottom of your profile page in Home Assistant

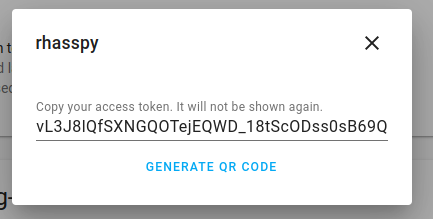

Click [Create Token], give it a name (I called mine “Rhasspy”), and a long sequence of characters (well beyond the left and right sides of the window) will be shown.

Click your mouse anywhere in the line of code, press the [Home] key to move the cursor to the start of the code, press [Shift-End] to highlight to the end of the code, and [Ctrl-C] to copy the code to clipboard.

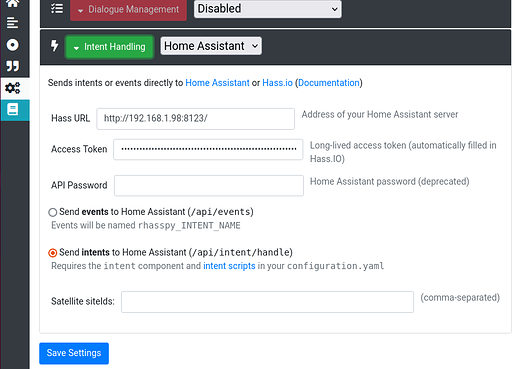

In Rhasspy on the Satellite, set [Intent Handling] to “Home Assistant”, [Save], add the URL for Home Assistant – the “http://” protocol, hostname or IP address of the Home Assistant machine, and port number :8123.

Go to the browser window with your Satellite and paste that long code above into the “Access Token” field and click [Save Settings].

I am using intents in HA, so selected the “send intents”… option.

There, that should send your intents to Home Assistant.

Now to Home Assistant and we will add the intents into configuration.yaml so that Home Assistant will action them.

There are several ways to edit the .yaml files, and I have installed the “File editor” Add-on from Configuration > Add-ons.

I have added into the configuration.yaml page:

intent:

intent_script: !include intents.yaml

The keywords “intent:” and “intent_script:” are required. In my case I have chosen to place the actual intents in a separate file to make them easier to edit; but you can simply list the intents directly after “intent_script:”.

In my new intents.yaml file I have:

#

# intents.yaml - the actions to be performed by Rhasspy voice commands

#

GetTime:

speech:

text: The current time is {{ now().strftime("%H %M") }}

LightState:

speech:

text: Turning {{ name }} {{state }}

action:

- service: light.turn_{{ state }}

target:

entity_id: light.{{ name | replace(" ","_") }}

And that’s it !

When the words “Porcupine, turn on the study light” are spoken, Rhasspy determines that the “LightState” intent is to be called with slot “name” having a value of “study light” and slot “value” having a value of “on” … as seen in the first image above.

This intent is passed (in a JSON file) to Home Assistant, where the intent name is looked up in the configuration.yaml (or intents.yaml) file.

Intent name “LightState” matches with “LightState:”, and parameters are substituted, so that Home Assistant effectively runs:

LightState:

speech:

text: Turning study light on

action:

- service: light.turn_on

target:

entity_id: light.study_light