This is awesome! Can it wiggle its ears differently depending on the intent?

Thanks for your feedback. I really appreciate it.

For now, it only moves the ears at the end of a TTS session. I have written a small python script that subscribes to a mqtt topic and trigger ears moves on messages received.

Curently it is triggered by the message “hermes/tts/sayFinished” and play predefined animation. Obviously, this part needs to be improved.

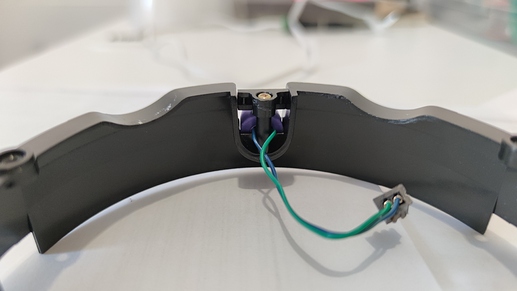

The ears are driven by simple DC motors and cannot be precisely oriented like with stepper motors. I only can move them forward or backward for a certain amount of time. However there is an encoder for each ear that can signal ear position. I don’t use them at the moment but this a good way to improve the precision of the movements.

As I said this is not a finished project and I have a lot to do with this guy !

Keep on going ! I dream I could wake my 2 nabaztag in near future.

Presume the current mic is what looks like an electret central near the base.

If you jump to electret microphone in the above usually you just wire as in the ‘simple schematic’ as the soundcard has the bias resistor and decoupling onboard.

If that doesn’t work or is very noisy do as said and add a capacitor and 2.2k resistor as shown.

Not sure of the Nabaztag wiring but at a guess it will just be a standard lone electret and the resistor and cap are now not included as where onboard some simple board as the decoupling cap usually is placed as close to the input of the preamp circuit.

If the electret isn’t marked the negative will be connected to the metal body of the electret.

The el cheapo usb sound card is with a lsusb

Bus 001 Device 005: ID 1b3f:2008 Generalplus Technology Inc.

SpeexDsp is the wrong version on raspi so its missing from alsa-plugins.

Follow Asound.conf AGC & Denoise

Usual errors in there where forgot to tell you to CD to correct dir on some steps and also if 64bit its.

./configure --libdir=/usr/lib/aarch64-linux-gnu/

But just shout if any probs.

This is just a electret directional like one you posted no hardware agc set to 100% in alsamixer.

https://drive.google.com/open?id=19OLdkmnCRwkmBxPrnGFZM02Ips3P2cp9

Then with a /etc/asound.conf

pcm.agc {

type speex

slave.pcm "plughw:2"

agc 1

agc_level 8000

denoise yes

dereverb no

}

The usb sound card is card index 2 so hence the above, denoise is on there just set to no, dereverb I think was never completed.

Here is AGC no denoise

Here is AGC with denoise

When there is no voice the gain will ramp up to the max and normalise hiss and should ramp down on voice and less hiss.

As said put a preamp on and AGC has to do much less work and SNR will become less.

$0.20 electret (not $20!) on a $1.50 usb sound card it aint that bad. Really gain should never be 100% but the gain is quite low maybe should of been 75% and rely on AGC, but 100% so you can hear without AGC.

When I find where my decibel meter actually is I will post distances and levels.

3.5mm Tip & 1st ring shorted connected to electret positive 3.5mm collar connected electret negative.

No resistor or cap needed.

Here is one of the analogue mems with 3.3v to module just 3.5mm tip as out and using the soundcard as gnd, so the 1st ring of the bias is no longer in use.

This is without AGC dropped gain down to 18db but as said a electret on a preamp will give similar but you get directional noise reduction (with the right electret) it will help whilst mems tend to be less sensitive to vibration.

https://drive.google.com/open?id=17IwTGupeoCB6w_7hMSdTna4-KX3H-7K0

Didn’t spend much time playing with hardware gain likely prob could do with dropping slightly as the AGC will amplify a cleaner signal.

Just test and set with alsamixer and then sudo alsactl store so its persistent

Definitely, I need to try your AGC solution. I am going to burn a new SD to try that out.

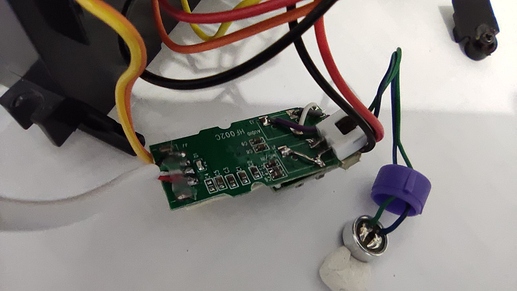

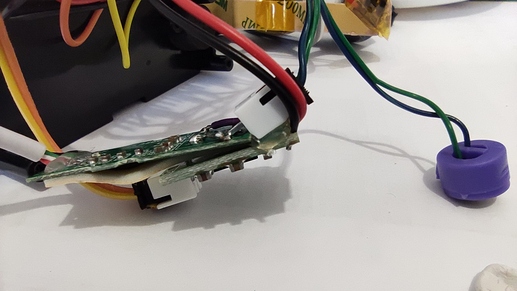

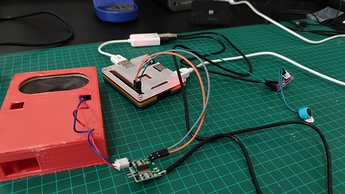

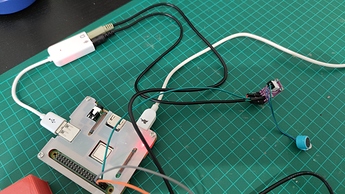

Here some pictures of the current audio setup.

The soundcard is this one, and the amp for the output this one.

Electret and speaker come from the Nabaztag and I don’t know the specs.

Yeah there is a Nabaztag on ebay for £25 which you even got me thinking of purchasing

Current electret looks like a standard omnidirectional rather than directional and the x10 for $2.36 ones are on the left and they are OK but what I have been trying to do is find some on the right but failing as they are supercardinoid with far more rear rejection (which just seems more holes).

The vibration of the ears moving is likely to resonate all through that case, same with the speaker.

The Google & Amazon pucks are really clever designs as they do an amazing job of isolating there mic arrays from internal sound and is a primary part of their design.

The 9.7mm electrets for me are about as small as I can solder but you can get extremely tiny ones, but gave up on actually managing to hand solder.

I was using a short length 15mm OD/ 13mm ID acrylic tube with a 13.5mm / 8mm grommet.

Not brilliant but just easy and cheap to make and a OK assimilation of a mic shock mount. Electret presses into grommet and grommet presses into a short length of tube.

Suggest you do get an electret and a Max9812 board from somewhere as you don’t need a SSM2167 but it will add a noise gate and compressor.

My bad as there are 2 types of sound card mono are mic in and stereo are line in and the SSM2167 comes in handy to give extra gain for stereo line in cards but not needed for the mono bias level cards.

You can still gain from the SSM2167 as you could prob further dial sound card gain down to 0db and lower the AGC level whilst still maintaining good levels of far field with less noise.

Looks like it could be quite easy to provide better mic isolation with minimal work on the components you have.

Amplifier being an audio snob I hate those mini amps and speakers but they do a job and sure its fit for purpose.

If you get a lot of hiss try putting a series capacitor on the output of you soundcard but prob will work well without.

Are you involved with Violet @Pep as the ears are cute but it did occur we do have DOA algs in the form of ODAS as with a cheap slip ring maybe you could rotate 2x electret directional ears rather than just waggle or hard wire with limited traverse.

Also maybe you could cut a deal with https://partners.andreaelectronics.com/andrea-audio-software-for-raspberry-pi-os/ as have a hunch their USB audio adapter is one of the Syba ($15) stereo line in ones I have been using (I have the software still have to test that one).

As beamforming solutions for the Pi do exist sadly just not opensource ones.

Also I think you can have a base mount speaker as I did with some puck ideas and use a much better amp and speaker and maybe play with the idea of https://www.tectonicaudiolabs.com/.

The mounting screws for the speaker I had much longer than needed so they acted as pillars to give a 15mm gap to the surface the puck (which was PVC water tube) rests and sounded pretty awesome.

Your sound card look like the exact same but from experience you can never be sure with the truly wonderful offerings from China but lsusb will report as 1b3f:2008 Generalplus Technology Inc.

I actually think they are better than the CM108 ones now even if unsure what the silicon is.

Loving your Nabaztag as truly hillarious and likely a just a great toy for kids but also could make an exceptionally good educational one as well.

AEC via https://voicen.io/audio_processing/aec/ or the C version I use will cancel the speaker extremely well and produces load only when media is playing.

I will knock you up a 32bit image as presume your not using the new 64bit just yet edit and post here.

One liner to reinstall alsa-plugins and updating speex/speexdsp.

curl -sL https://raw.githubusercontent.com/StuartIanNaylor/Alsa-plugins-speex-update/main/Alsa-plugins-speex-update.sh | sudo -E bash -

One liner to install ec and alsa-plugins-fifo

To install ec & alsa_plugin_fifo

curl -sL https://raw.githubusercontent.com/StuartIanNaylor/Alsa-plugins-speex-update/main/install-ec-fifo.sh | sudo -E bash -

To load the loopback on boot

echo 'snd-aloop' | sudo tee -a /etc/modules

This is optional but as in the readme on https://github.com/StuartIanNaylor/Alsa-plugins-speex-update

You can change the name of your card so that it is unique and recognised.

lsusb and get the vendor and product ids. On my Pi it returns

Bus 001 Device 005: ID 1b3f:2008 Generalplus Technology Inc.

Bus 001 Device 004: ID 248a:00da Maxxter

Bus 001 Device 003: ID 0424:ec00 Standard Microsystems Corp. SMSC9512/9514 Fast Ethernet Adapter

Bus 001 Device 002: ID 0424:9514 Standard Microsystems Corp. SMC9514 Hub

Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

echo 'SUBSYSTEM!="sound", GOTO="my_usb_audio_end"' | sudo tee -a /etc/udev/rules.d/70-alsa-permanent.rules

echo 'ACTION!="add", GOTO="my_usb_audio_end"' | sudo tee -a /etc/udev/rules.d/70-alsa-permanent.rules

echo 'ATTRS{idVendor}=="1b3f", ATTRS{idProduct}=="2008", ATTR{id}="VOICE"' | sudo tee -a /etc/udev/rules.d/70-alsa-permanent.rules

My usb sound is the Generalplus Technology Inc. so 1b3f:2008 is used, just unplug and run lsusb once more to see if it dissapears if unsure.

Now when you reboot we should have a loopback device and renamed ‘VOICE’ soundcard.

So it should work with this /etc/asound.conf but this one liner will save it for you

sudo curl -o /etc/asound.conf https://raw.githubusercontent.com/StuartIanNaylor/Alsa-plugins-speex-update/main/asound.conf

pcm.!default {

type asym

playback.pcm "eci"

capture.pcm "agc"

}

pcm.eci {

type plug

slave {

format S16_LE

rate 16000

channels 1

pcm {

type file

slave.pcm null

file "/tmp/ec.input"

format "raw"

}

}

}

pcm.eco {

type plug

slave.pcm {

type fifo

infile "/tmp/ec.output"

rate 16000

format S16_LE

channels 2

}

}

pcm.agc {

type speex

slave.pcm "plughw:CARD=Loopback,DEV=1"

agc 1

agc_level 8000

denoise no

dereverb no

}

The asym sets the default so you can just use aplay & arecord without specifying a device but first we need to make ec run on boot.

Then last thing is to start ec on login via the .profile script in your $HOME dir.

echo './ec/ec -i plughw:VOICE -o plughw:VOICE -d 20 &' >> .profile

echo 'sleep 10' >> .profile

echo 'arecord -Deco -r16000 -fS16_LE -c1 | aplay -Dplughw:CARD=Loopback,DEV=0 &' >> .profile

Now on reboot because the defaults are set aplay & arecord without need for specifying a device should work.

You might want to be more sophisticated and create a service apols for my lazyness as .profile will run with each logon of the user.

Not needed but play & record devices if needed are eci & agc.

You might need to alsamixer and drop the gain considerably on the soundcard as the input from a powered module will be way too high.

Strangely don’t have a running desktop version of linux currently, so no image but the above should be pretty easy.

You might be wondering why the loopback and pipe but if you record from the eco and then stop, ec stops running so it just gets piped to the loopback so it remains persistent on multiple records.

Ec runs with a default delay of -d 20 which can differ with different setups.

After installing ec there is a util folder and follow Software EC with voice-engine / ec to find out what the latency and delay should be and edit the ./ec/ec -i plughw:VOICE -o plughw:VOICE -d 20 line in .profile.

To test arecord -r16000 -fS16_LE ec-rec.wav should give a result something like this.

https://drive.google.com/open?id=1fpDkMkJUM66IDDTNKMzr8aJFWYu1DK7q

Really after reinstalling alsa-plugins the speex echo should work and EC would not be needed but I found the results really terrible.

If someone want to try it would be a similar setup to the AGC setting but something like

echo 1

frames 10

filter_length 256

maybe?

With AGC agc_level 8000 sets the max gain but any input will normalise so this can set your furthest field but higher values will contain more noise. Lesser will give less gain but less noise and 4000 might be preferable.

Also slightly confused about the VAD as presumed it was similar or https://github.com/wiseman/py-webrtcvad as its not just a RMS detector and should as VAD classifies a piece of audio data as being voiced or unvoiced.

So really it should be clever enough to ignore noise that is not of voice spectra, does it allow you to set the aggressiveness of the voice filter vad.set_mode(1) as in the above (1-3 3=most aggressive)

Maybe on a Pi3A there is enough load space for something a little more clever https://github.com/pyannote/pyannote-audio as quite a few relatively lightweight VAD/SAD (Voice/Speech) work via a neural net.

PyTorch seems to be a really fast light weight framework and wish we had a KWS built with it as Precise seems to be very heavy and not very precise for what it does.

Maybe https://github.com/castorini/honk

My sound card is exactly the same as yours. I’ll try to catch a MAX9812 board for my experiments.

The noise inside the case doesn’t come from the motors as I don’t activate them during listening session. The problem is more the “electronic” noise that come from the RPI (like capacitor/inductor whining) but also kind of a white noise coming from the HP.

I finally have some good results with some foam behind the electret. The sound is cleaner and I don’t have problems of silence detection if I speak loud enough and don’t start before rhasspy starts listening. WIth no noise around, I can be understood from 3/4m far away. Pretty the same with my other satellite using respeaker 2-mics.

I think this is good enough to try the Nabazag in my living room. However, I want to try your setup with Speex and EC. I have done your installation on another SD and get good result with AGC but no luck with EC. And I’m struggling in using this configuration with Rhasspy. I’m not confortable with asound.conf file and need to spend more time on this topic. But thanks for all your explanation, this teaches me a lot.

@fastjack Mathieu does rhasspy default to using the defined defaults in asound.conf?

@Pep the pcms are chained and the AGC runs after EC so if AGC is running so is EC as it takes its output from the eco pcm.

The only thing that might happen is that audio is not being played on the eci pcm so its not being cancelled.

So its a hey community where do I specify the record and playback pcms and does it use the alsa defaults as default?

No one is comfortable with /etc/asound.conf which is system wide or $HOME/.asoundrc same thing but the user override.

https://www.alsa-project.org/wiki/Asoundrc to confuse me and you even more.

The first part just sets the alsa defaults for capture and playback so if nothing is declared those defaults will be used. Asym just allows you to set both playback & capture could even be 2 different cards instead of specifying a single card index.

pcm.!default {

type asym

playback.pcm "eci"

capture.pcm "agc"

}

The confusing bit is some KWS record in segments and create multiple recordings which EC ends for some reason when each record comes to an end, which is a bit useless.

So I cheat even if it does add a bit of confusion but

arecord -Deco -r16000 -fS16_LE -c1 | aplay -Dplughw:CARD=Loopback,DEV=0

Does a single constant record from pcm eco and plays it back on sink side of a loopback adapter. That keeps ec running as its always recording whilst a program can grab it from the loopback. I should of added the subdevice ‘channel’ but we are only using 1 so it will default to the 1st.

So the AGC input in the above if it wasn’t for that quirk would have the line slave.pcm "eco" rather than slave.pcm "plughw:CARD=Loopback,DEV=1" which is the source side of the loopback.

Loopbacks are just handy as you can just aplay to a loopback adapter so you can use another program to use that audio as a standard source.

If I do an aplay -l it will list my alsa devices.

aplay -l

**** List of PLAYBACK Hardware Devices ****

card 0: Loopback [Loopback], device 0: Loopback PCM [Loopback PCM]

Subdevices: 8/8

Subdevice #0: subdevice #0

Subdevice #1: subdevice #1

Subdevice #2: subdevice #2

Subdevice #3: subdevice #3

Subdevice #4: subdevice #4

Subdevice #5: subdevice #5

Subdevice #6: subdevice #6

Subdevice #7: subdevice #7

card 0: Loopback [Loopback], device 1: Loopback PCM [Loopback PCM]

Subdevices: 8/8

Subdevice #0: subdevice #0

Subdevice #1: subdevice #1

Subdevice #2: subdevice #2

Subdevice #3: subdevice #3

Subdevice #4: subdevice #4

Subdevice #5: subdevice #5

Subdevice #6: subdevice #6

Subdevice #7: subdevice #7

card 1: b1 [bcm2835 HDMI 1], device 0: bcm2835 HDMI 1 [bcm2835 HDMI 1]

Subdevices: 4/4

Subdevice #0: subdevice #0

Subdevice #1: subdevice #1

Subdevice #2: subdevice #2

Subdevice #3: subdevice #3

card 2: Headphones [bcm2835 Headphones], device 0: bcm2835 Headphones [bcm2835 H eadphones]

Subdevices: 4/4

Subdevice #0: subdevice #0

Subdevice #1: subdevice #1

Subdevice #2: subdevice #2

Subdevice #3: subdevice #3

card 3: VOICE [USB Audio Device], device 0: USB Audio [USB Audio]

Subdevices: 1/1

Subdevice #0: subdevice #0

https://sysplay.in/blog/tag/snd-aloop-driver/ each loopback has 8 subdevices and think its 8 loopback devices max so you can make up 64 virtual device redirections if you so wanted and are quite handy for that so hence why I used.

I think the kernel is set to 8 devices max can be increased but that does limit.

Really you can ignore the loopback and think of the current setup just pulling from the eco pcm because of arecord -Deco -r16000 -fS16_LE -c1 | aplay -Dplughw:CARD=Loopback,DEV=0 & just pipes it into the loopback.

Also thinking about it to be slightly more bullet proof I should of made a script called start-ec.sh or something and included the lines currently in .profile and just called the script in .profile with a nohup

nohup sh start-ec.sh & https://www.maketecheasier.com/nohup-and-uses/ also nohup.out would be a bit of an ec minilog for you.

Defining a service would prob be better but ctrl+c will kill all foreground processes ec included I guess unless a service or nohup is used

start-ec.sh would just be

./ec/ec -i plughw:VOICE -o plughw:VOICE -d 20 &

sleep 10

arecord -Deco -r16000 -fS16_LE -c1 | aplay -Dplughw:CARD=Loopback,DEV=0 &

The only 2 other pcms defined are eci & eco which are purely 2x file fifo (buffer) in /tmp so in ram so when you run ./ec/ec -i plughw:VOICE -o plughw:VOICE -d 20 & ec takes control of that input and output (source/sink).

eci is just a feed fifo (buffer) so it knows what needs to be cancelled and is just forwarded to the -o output defined.

It applies that to the -i input and provides the results on eco.

If you get a vanilla image and run through the one liners the install is pretty quick so Rhasspy is not adding any confusion.

Just skip the steps to add the lines to .profile so on boot ec is not running.

Grab my usual test file wget https://file-examples-com.github.io/uploads/2017/11/file_example_WAV_10MG.wav

Open up 2x SSH consoles

arecord -Dplughw:VOICE -r16000 -fS16_LE -c1 rec.wav

Switch to the other console

aplay -Dplughw:VOICE file_example_WAV_10MG.wav run till end whilst talking then switch back ctrl+c to stop recording.

Play that back in https://www.audacityteam.org/ so you get a good reference of how much echo/feedback the media is providing and how much your voice is flooded.

Open another ssh console run ./ec/ec -i plughw:VOICE -o plughw:VOICE -d 20

Switch to the record console change to output of ec and change the record file name so you keep both for reference.

arecord -Deco -r16000 -fS16_LE -c1 ec-rec.wav

Switch to the 3rd console and this time play to the ec input.

aplay -Deci file_example_WAV_10MG.wav talk your stuff till end and again switch back to the record crtl+c to stop recording.

All these ctrl+c made me think I should of above used nohup but here manually each is in a separate ssh foreground.

Fire up Audacity again and give the results a compare.

Also for ssh I use https://www.chiark.greenend.org.uk/~sgtatham/putty/latest.html and file sftp https://winscp.net/eng/index.php

The denoise via speexdsp as said does give audio artefacts never used Sox denoise and it might be better as you record a profile to subtract from your input.

I think the Sox play command can use all the same functions as sox but only plays on the default device.

The AUDIODEV environment variable can be used to override the default audio device, e.g.

set AUDIODEV=/dev/dsp2

play …

sox … -t oss

or

set AUDIODEV=hw:soundwave,1,2

play …

sox … -t alsa

I have never used Sox but was just thinking its also probable you can play to a loopback and include that in your audio processing chain.

I am not sure if the above will also change the alsa default which could cause problems if devices are not specified (Sox can you not specify a device !?!).

Generally denoise is a spectrogram no no as it can reduce recognition unless your model was recorded with it.

Also because AGC is dynamic your noise level is always changing depending on the signal strength the mic is receiving, so not sure how you pick a noise profile, but maybe worth a try.

The noise between words doesn’t matter as recognition will just not recognise it and when words are spoken the gain and subsequent noise is dropped.

So it can sound like shit for a broadcast mic but actually be really good for recognition, but also you can drop the agc_level.

The VAD should be able to differentiate between noise and voice unless its just a very simple RMS detector.

I’m back to my project and still struggling with the Rhasspy use of the AGC. Everything works fine outside the docker container and I get good results with rolyan’s configuration.

I think I need to modify my docker command because I can’t use speex with rhasspy. When I try arecord inside the container (docker exec -it rhasspy /bin/bash) I get this error :

arecord -f S16_LE -Dagc > test.wav

gives me :

ALSA lib dlmisc.c:285:(snd1_dlobj_cache_get) Cannot open shared library /usr/lib/arm-linux-gnueabihf/alsa-lib/libasound_module_pcm_speex.so ((null): /usr/lib/arm-linux-gnueabihf/alsa-lib/libasound_module_pcm_speex.so: cannot open shared object file: No such file or directory)

arecord: main:828: audio open error: No such device or address

With Rhasspy, I can use arecord without PulseAudio but not with.

The docker command I use :

docker run -d -p 12101:12101

–name rhasspy

–restart unless-stopped

-v “$HOME/.config/rhasspy/profiles:/profiles”

-v “/etc/localtime:/etc/localtime:ro”

-v “/etc/asound.conf:/etc/asound.conf”

–ipc=“host”

–device /dev/snd:/dev/snd

rhasspy/rhasspy

–user-profiles /profiles

–profile fr

and my asound.conf :

pcm.!default {

type plug

slave.pcm “mix”

}pcm.mix {

type dmix

ipc_key 1024

ipc_perm 0666

slave {

pcm “hw:1,0”

period_time 0

period_size 1024

buffer_size 4096

rate 48000

format S24_3LE

}

bindings {

0 0

1 1

}

}pcm.squeezelite {

type plug

slave.pcm “squeezelite_vol”

}pcm.squeezelite_vol {

type softvol

slave.pcm “mix”

control {

name “Squeezelite”

card 1

}

}pcm.rhasspy {

type plug

slave.pcm “rhasspy_vol”

}pcm.rhasspy_vol {

type softvol

slave.pcm “mix”

control {

name “Rhasspy”

card 1

}

}pcm.agc {

type speex

slave.pcm “plughw:CARD=Device,DEV=0”

agc 1

agc_level 8000

denoise yes

dereverb no

}

Everything is fine with aplay.

Does somebody have an idea ?

Yeah you will have to connect to the container and install it pep.

docker exec -it rhasspy /bin/bash

If you do a

sudo apt-get install libspeex1 libspeex-dev libspeexdsp1 libspeexdsp-dev libasound2-plugins

That should install and give it a try.

Due to raspbian having an old version of speex-dsp I think you will find alsa-plugins has skipped some of the speexplugins so we have to grab the latest and recompile and install.

To save me the hassle I did a one liner

curl -sL https://raw.githubusercontent.com/StuartIanNaylor/Alsa-plugins-speex-update/main/Alsa-plugins-speex-update.sh | sudo -E bash -

If you might want to drop the AGC level but don’t worry about high gain noise when there is no input.

Or you can do it via ffmpeg and a loopback adapter and use the loopback as your source.

sudo modprobe snd_aloop

ffmpeg -thread_queue_size 1024 -ac 1 -f alsa -i plughw:1 -filter:a dynaudnorm=f=200:g=3 -f alsa plughw:0

the soundcard is plughw:1 and loopback plughw:0 in the above example.

You just have to start ffmpeg in the host via a service or .profile or something on boot.

Ok. So, I did it the wrong way. I need to make the installation inside the container.

Thanks for the explanation  and sorry for the stupid question…

and sorry for the stupid question…

Nah containers always sucker me that way.

The ffmpeg you can do on the host as it passes back via the loopback.

You could do something similar with speex by piping an arecord to a loopback.

arecord plughw:1 | aplay plughw:0 would pipe the audio so that you could use the loopback on plughw:0 as a source.

Finally, I installed Rhasspy as a debian package. Too much troubles with Docker.

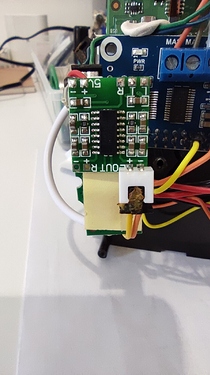

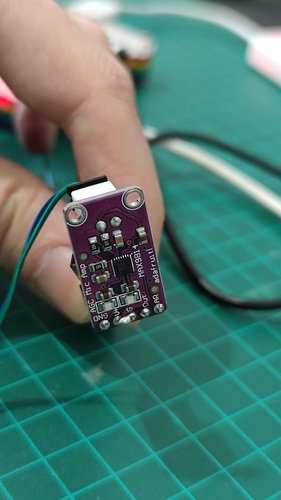

I use the MAX9814 card and a unidirectional electret and this really improved the setup. My satellite doesn’t hang on listening anymore. That was a really good suggestion, thanks @rolyan_trauts !

Here are some pictures

I made a bridge between Vdd and Gain to select 40dB as you suggested in another post.

My Nabaztag is now much more responsive as you can see in this video !

I await the modifications with 2x unidirectional as Nabaztag beamforms with his ears!

PS did you get speex aec working or was it too mauch hassle?

I might do an automated install if any use?

I only use speex for AGC because my main concern was noise and gain settings.

I plan to use the Nabaztag as a radio set, so AEC could be usefull. I didn’t try so much making it work because of my container problem. I might give it another try. I don’t need a automated install, just some time to make it work.  .

.

Thanks for the proposal.

No probs do and my memory is terrible as yeah AGC.

Give the ffmpeg dynaudnorm a go as rather than AGC there is also a compander via FFmpeg or Sox.

Not that sure about the compander as with dynamic volumes with fixed points its a head scratcher how to set but it can also be another method of AGC.

Both Sox and FFmpeg have a rake of filters that might be of interest and playing to the sink side of a loopback so it becomes an alsa source as above works with both.

@JGKK sent me this and haven’t tried it yet but its the sox compander command line.

sox -q -t alsa plughw:1,0 -L -e signed-integer -c 1 -r 16000 -b 16 -t alsa hw:Loopback,0,0 compand .1,.3 -inf,-50.1,-inf,-50,-50,-20,-10,0,-10 -10 -60

AEC is only to cancel playing media to allow ‘barge-in’ so may not be needed but when in conjunction with a unidirectional the effects sum and provide pretty decent results.

Hi @Pep,

did you finish your work with your Nabaztag?

I currently do the same. I have several Nabaztag around in my house.

Other than you, I want to keep the old behavior of the Rabbits.

So until now I plan to insert a Rock Pi S.

Are you still happy with your Microphone setup?

I followed the recommendation of @rolyan_trauts and bought a cheap USB Sound card and some MAX9814 Microphone APM’s.

In my tests Mic tests I have too much gain I guess, because my voice sounds “over tuned”.

Additional to that, I have some “white noise” also in there.

As I saw in your pictures, you bridged Vdd and Gain. I did something similar, I give 3.3v on Vdd and 3.3v on gain, but I did not make a bridge between them. Does that make a difference?

Sorry for asking stupid questions, but my knowledge in electronic is very old and rusty.

Yes, my Nabaztags are finished (until the next mod…). I have 3 Nabaztags that my family and I use every day. It’s not perfect but does the job.

My mic setup works correctly. It’s near perfect in bedrooms and I use it (sometimes with some difficulties) in living room (with kitchen).

I need to stop the air extractor of the kitchen before trigger my rhasspy but music is not a problem.

The bridge is necessary to choose the gain.

The attack/delay time wants to be max and gain wants to be lowest as into a soundcard that is a lot of gain.

If you have gain high you will just get too much SNR

I have been playing with KWS of late and it is possible to get extremely tolerant KWS as I sit with a fan heater at 40db behind me.

I have tested my own custom models with the google streaming_kws with 70db noise and KW is still recognised.

The ASR though is likely recorded clean and prone to noise problems so likely would not much difference as kw would be detected but the command sentence likely not.

Prob if a dirty dataset was fed the overall WER would drop but noise resilience would rise.

You would have to ask @synesthesiam to create noisy models & clean so you have choice.