Yeah there is a Nabaztag on ebay for £25 which you even got me thinking of purchasing

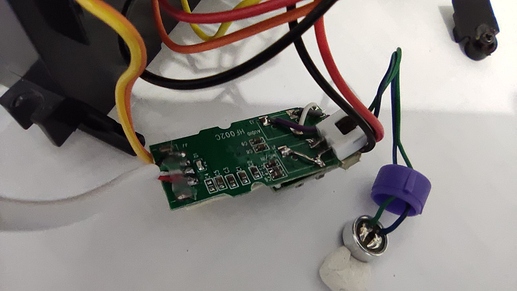

Current electret looks like a standard omnidirectional rather than directional and the x10 for $2.36 ones are on the left and they are OK but what I have been trying to do is find some on the right but failing as they are supercardinoid with far more rear rejection (which just seems more holes).

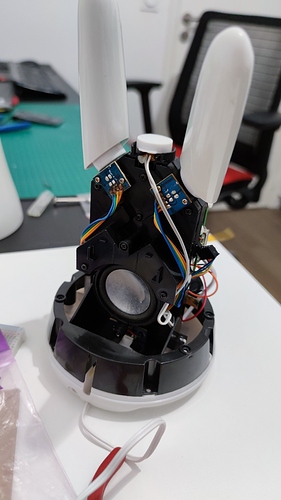

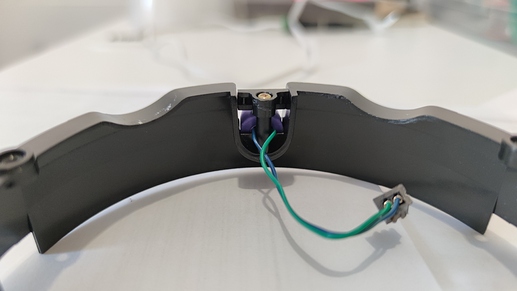

The vibration of the ears moving is likely to resonate all through that case, same with the speaker.

The Google & Amazon pucks are really clever designs as they do an amazing job of isolating there mic arrays from internal sound and is a primary part of their design.

The 9.7mm electrets for me are about as small as I can solder but you can get extremely tiny ones, but gave up on actually managing to hand solder.

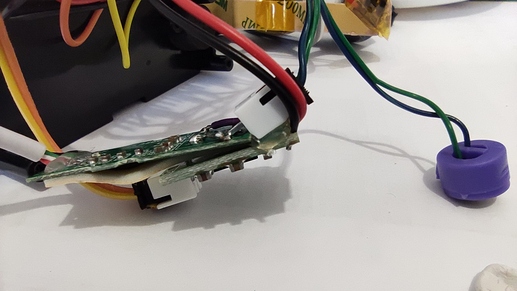

I was using a short length 15mm OD/ 13mm ID acrylic tube with a 13.5mm / 8mm grommet.

Not brilliant but just easy and cheap to make and a OK assimilation of a mic shock mount. Electret presses into grommet and grommet presses into a short length of tube.

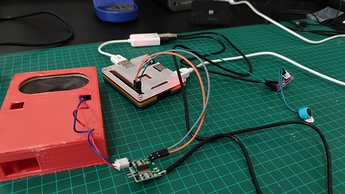

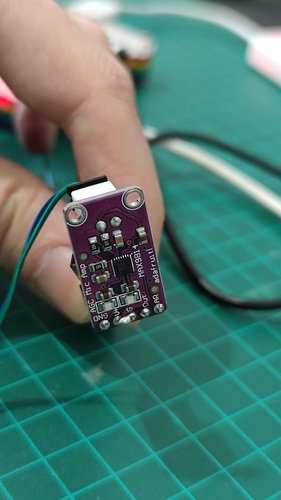

Suggest you do get an electret and a Max9812 board from somewhere as you don’t need a SSM2167 but it will add a noise gate and compressor.

My bad as there are 2 types of sound card mono are mic in and stereo are line in and the SSM2167 comes in handy to give extra gain for stereo line in cards but not needed for the mono bias level cards.

You can still gain from the SSM2167 as you could prob further dial sound card gain down to 0db and lower the AGC level whilst still maintaining good levels of far field with less noise.

Looks like it could be quite easy to provide better mic isolation with minimal work on the components you have.

Amplifier being an audio snob I hate those mini amps and speakers but they do a job and sure its fit for purpose.

If you get a lot of hiss try putting a series capacitor on the output of you soundcard but prob will work well without.

Are you involved with Violet @Pep as the ears are cute but it did occur we do have DOA algs in the form of ODAS as with a cheap slip ring maybe you could rotate 2x electret directional ears rather than just waggle or hard wire with limited traverse.

Also maybe you could cut a deal with https://partners.andreaelectronics.com/andrea-audio-software-for-raspberry-pi-os/ as have a hunch their USB audio adapter is one of the Syba ($15) stereo line in ones I have been using (I have the software still have to test that one).

As beamforming solutions for the Pi do exist sadly just not opensource ones.

Also I think you can have a base mount speaker as I did with some puck ideas and use a much better amp and speaker and maybe play with the idea of https://www.tectonicaudiolabs.com/.

The mounting screws for the speaker I had much longer than needed so they acted as pillars to give a 15mm gap to the surface the puck (which was PVC water tube) rests and sounded pretty awesome.

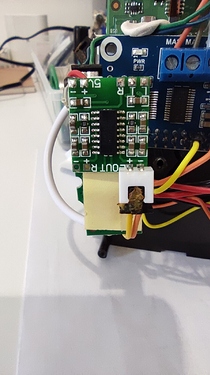

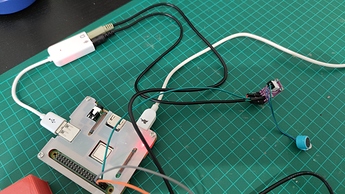

Your sound card look like the exact same but from experience you can never be sure with the truly wonderful offerings from China but lsusb will report as 1b3f:2008 Generalplus Technology Inc.

I actually think they are better than the CM108 ones now even if unsure what the silicon is.

Loving your Nabaztag as truly hillarious and likely a just a great toy for kids but also could make an exceptionally good educational one as well.

AEC via https://voicen.io/audio_processing/aec/ or the C version I use will cancel the speaker extremely well and produces load only when media is playing.

I will knock you up a 32bit image as presume your not using the new 64bit just yet edit and post here.

One liner to reinstall alsa-plugins and updating speex/speexdsp.

curl -sL https://raw.githubusercontent.com/StuartIanNaylor/Alsa-plugins-speex-update/main/Alsa-plugins-speex-update.sh | sudo -E bash -

One liner to install ec and alsa-plugins-fifo

To install ec & alsa_plugin_fifo

curl -sL https://raw.githubusercontent.com/StuartIanNaylor/Alsa-plugins-speex-update/main/install-ec-fifo.sh | sudo -E bash -

To load the loopback on boot

echo 'snd-aloop' | sudo tee -a /etc/modules

This is optional but as in the readme on https://github.com/StuartIanNaylor/Alsa-plugins-speex-update

You can change the name of your card so that it is unique and recognised.

lsusb and get the vendor and product ids. On my Pi it returns

Bus 001 Device 005: ID 1b3f:2008 Generalplus Technology Inc.

Bus 001 Device 004: ID 248a:00da Maxxter

Bus 001 Device 003: ID 0424:ec00 Standard Microsystems Corp. SMSC9512/9514 Fast Ethernet Adapter

Bus 001 Device 002: ID 0424:9514 Standard Microsystems Corp. SMC9514 Hub

Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

echo 'SUBSYSTEM!="sound", GOTO="my_usb_audio_end"' | sudo tee -a /etc/udev/rules.d/70-alsa-permanent.rules

echo 'ACTION!="add", GOTO="my_usb_audio_end"' | sudo tee -a /etc/udev/rules.d/70-alsa-permanent.rules

echo 'ATTRS{idVendor}=="1b3f", ATTRS{idProduct}=="2008", ATTR{id}="VOICE"' | sudo tee -a /etc/udev/rules.d/70-alsa-permanent.rules

My usb sound is the Generalplus Technology Inc. so 1b3f:2008 is used, just unplug and run lsusb once more to see if it dissapears if unsure.

Now when you reboot we should have a loopback device and renamed ‘VOICE’ soundcard.

So it should work with this /etc/asound.conf but this one liner will save it for you

sudo curl -o /etc/asound.conf https://raw.githubusercontent.com/StuartIanNaylor/Alsa-plugins-speex-update/main/asound.conf

pcm.!default {

type asym

playback.pcm "eci"

capture.pcm "agc"

}

pcm.eci {

type plug

slave {

format S16_LE

rate 16000

channels 1

pcm {

type file

slave.pcm null

file "/tmp/ec.input"

format "raw"

}

}

}

pcm.eco {

type plug

slave.pcm {

type fifo

infile "/tmp/ec.output"

rate 16000

format S16_LE

channels 2

}

}

pcm.agc {

type speex

slave.pcm "plughw:CARD=Loopback,DEV=1"

agc 1

agc_level 8000

denoise no

dereverb no

}

The asym sets the default so you can just use aplay & arecord without specifying a device but first we need to make ec run on boot.

Then last thing is to start ec on login via the .profile script in your $HOME dir.

echo './ec/ec -i plughw:VOICE -o plughw:VOICE -d 20 &' >> .profile

echo 'sleep 10' >> .profile

echo 'arecord -Deco -r16000 -fS16_LE -c1 | aplay -Dplughw:CARD=Loopback,DEV=0 &' >> .profile

Now on reboot because the defaults are set aplay & arecord without need for specifying a device should work.

You might want to be more sophisticated and create a service apols for my lazyness as .profile will run with each logon of the user.

Not needed but play & record devices if needed are eci & agc.

You might need to alsamixer and drop the gain considerably on the soundcard as the input from a powered module will be way too high.

Strangely don’t have a running desktop version of linux currently, so no image but the above should be pretty easy.

You might be wondering why the loopback and pipe but if you record from the eco and then stop, ec stops running so it just gets piped to the loopback so it remains persistent on multiple records.

Ec runs with a default delay of -d 20 which can differ with different setups.

After installing ec there is a util folder and follow Software EC with voice-engine / ec to find out what the latency and delay should be and edit the ./ec/ec -i plughw:VOICE -o plughw:VOICE -d 20 line in .profile.

To test arecord -r16000 -fS16_LE ec-rec.wav should give a result something like this.

https://drive.google.com/open?id=1fpDkMkJUM66IDDTNKMzr8aJFWYu1DK7q

Really after reinstalling alsa-plugins the speex echo should work and EC would not be needed but I found the results really terrible.

If someone want to try it would be a similar setup to the AGC setting but something like

echo 1

frames 10

filter_length 256

maybe?

With AGC agc_level 8000 sets the max gain but any input will normalise so this can set your furthest field but higher values will contain more noise. Lesser will give less gain but less noise and 4000 might be preferable.

Also slightly confused about the VAD as presumed it was similar or https://github.com/wiseman/py-webrtcvad as its not just a RMS detector and should as VAD classifies a piece of audio data as being voiced or unvoiced.

So really it should be clever enough to ignore noise that is not of voice spectra, does it allow you to set the aggressiveness of the voice filter vad.set_mode(1) as in the above (1-3 3=most aggressive)

Maybe on a Pi3A there is enough load space for something a little more clever https://github.com/pyannote/pyannote-audio as quite a few relatively lightweight VAD/SAD (Voice/Speech) work via a neural net.

PyTorch seems to be a really fast light weight framework and wish we had a KWS built with it as Precise seems to be very heavy and not very precise for what it does.

Maybe https://github.com/castorini/honk

and sorry for the stupid question…

and sorry for the stupid question…

.

.