@donburch I went through your tutorial but I it did not work for me. Can make the system listen the wakeup word. The part of mqtt don’t get it. Internal or External. I do not have satellites. I am just testing with one raspberry.

That’s the problem with supporting so many different hardware and integrations … it can be so confusing trying to work out which bits are relevant to your own setup ![]() … especially when people write documentation and user guides without acknowledging that they apply to their own specific combination.

… especially when people write documentation and user guides without acknowledging that they apply to their own specific combination.

I use NodeRed for automations (before HA did a major revision) and have 3 satellites - so use the HA Mosquitto Broker add-on as an external MQTT server. If you are running everything on one machine the Internal MQTT should be fine for you.

Are you using one of the reSpeaker RasPi HAT models; or one of their USB models ?

I am using one of the reSpeaker RasPi HAT (2 mics) models in a Raspberry Pi 3+.

I found an image in here: GitHub - respeaker/seeed-voicecard: 2 Mic Hat, 4 Mic Array, 6-Mic Circular Array Kit, and 4-Mic Linear Array Kit for Raspberry Pi that should support the reSpeaker. I have installed and I could make the output audio to work but not the microphone.

Also, Rhasspy is so unstable in the way that sometimes it detects your devices and sometimes it does not. You need to click refresh button in order to do it. It is not intuitive at all.

So maybe you can help me with my setup.

- Do you have or know a working image that I can directly install in my RBPi?

- If not, and lets assume that the one I have downloaded from that link I just shared with you, which is this one: https://files.seeedstudio.com/linux/Raspberry%20Pi%204%20reSpeaker/2021-05-07-raspios-buster-armhf-lite-respeaker.img.xz, works, can you help me to configured it? I have followed your steps but they didn’t exactly worked for me. Also I have tried the Home Assistant Plugin Assistant with an USB connected to the RaspberryPi 4 and didn’t worked either.

I did:

sudo apt-get update

sudo apt-get install git

git clone https://github.com/respeaker/seeed-voicecard.git

cd seeed-voicecard/

sudo ./install.sh

sudo reboot

Then I tested it and worked, the mic and the audio:

aplay -l

arecord -l

arecord -D "plughw:2,0" -f S16_LE -r 16000 -d 5 -t wav test.wav

aplay -D "plughw:2,0" test.wav

Then I have downloaded Rhasspy

wget https://github.com/rhasspy/rhasspy/releases/download/v2.5.11/rhasspy_armhf.deb

sudo dpkg -i rhasspy_armhf.deb

sudo apt-get install -y jq libopenblas-base

sudo apt --fix-broken install

sudo dpkg --configure rhasspy

sudo apt-get install python3-venv

python3 -m venv ~/rhasspy_venv

source ~/rhasspy_venv/bin/activate

And finally executing it:

/usr/bin/rhasspy --profile es

Here said that mosquitto was missing, so:

sudo apt install mosquitto mosquitto-clients

And go again:

/usr/bin/rhasspy --profile es

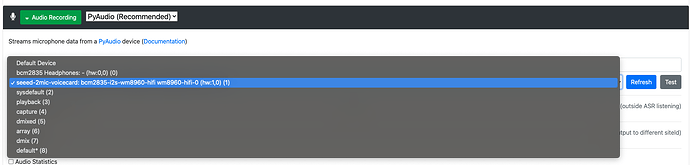

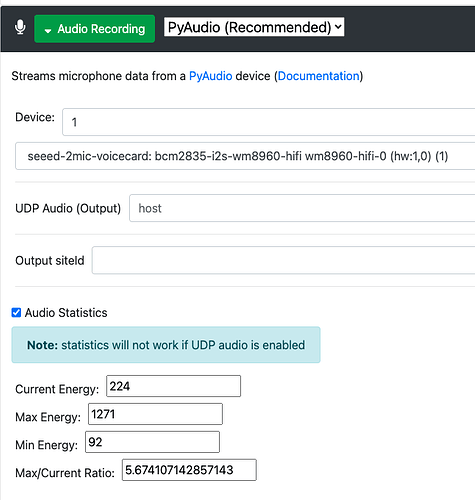

Audio Card found:

Statistics Working:

when clicking on wake up button, the popup never disappears. I see some errors but the problem is not the errors. The issue is that is really hard to install this app. I am looking for an image ready plug and play.

At a quick read it looks as though that should work … but leaves me with a few questions.

Which tutorial did you follow ? This one ? Otherwise please post a link to it.

When I set mine up, the .IMG file on seeed website was already over 4 years old, and so I did not use it. It looks as though someone has updated the image to “buster” version (2021), so it should be usable.

When i was setting up my own Rhasspy satellites I had similar problem and so made a point in my tutorial of testing the audio input and output with arecord and aplay. No point carrying on until you know the hardware works.

Note that “arecord -l” lists only the currently selected recording device, whereas “arecord -L” lists all the available devices. When you did the arecord/aplay of

arecord -D "plughw:2,0" -f S16_LE -r 16000 -d 5 -t wav test.wav

aplay -D "plughw:2,0" test.wav

did you speak and hear your speech ? If not, you will have to get this working first. Maybe “plughw:2,0” is not the correct device.

I don’t recall installing libopenblas-base or having to do a --fix-broken install … so I’m wondering if there was a problem here. Is rhasspy_armhf.deb the correct version for RasPi 4 (my RasPi Zero and RasPi 3A used different packages files) ?

Both my current Rhasspy satellites use arecord for Audio recording … though I remember being surprised a while back to discover that one was using a pyAudio … so it may not make a difference.

If you are seeing the figures under “Audio Statistics” change with sound in the room, then Rhasspy is detecting sound.

The “Wake up” pop-up should time out after a minute or so. This suggests that there is a problem … and probably an error message somewhere.

yeah … NO ! Error messages always indicate a problem, especially when we don’t understand what the message means.

Tell us the error messages, and when and where they occur.

I understand that you’re frustrated … it took me 3 attempts over 12 months before I got Home Assistant running, and Rhasspy was almost as many learning curves. The official rhasspy documentation is better than a lot of FOSS projects - but still still noticably “notes by the developer for other developers”. User documentation is a whole different kettle of fish.

Google and Amazon provide ready plug and play devices. They even have a lot of support in Home Assistant.

Thank you for pointing out the tutorial. I apologize for not sharing the URL earlier; I thought I had included it.

Here’s some fresh and good news: after numerous attempts, I finally got the microphone and speaker to work. This task is now accomplished. How did I do it? I followed some steps in a different order, and it worked. You were right about the ERRORS indicating something specific: a library needed updating, and I needed to understand better how the Test button functions when testing the mic. It seems you have to click it until the system recognizes it and displays a “working” message.

Now, I have another challenge that hasn’t gone as planned, so I might consider moving on from Rhasspy and continue with my previous coding project.

Here’s a summary of my current goals:

- I don’t want to create intents with device names. It involves too much writing for anyone integrating an assistant with Home Assistant (HA). Additionally, it’s not just about the intents but also writing down the device names.

- I want an app that recognizes smart events and can handle conversations without setup, like in Home Assistant. I want to send any input and have a smart engine understand it. I’ve achieved this using Ollama and the ChatGPT API.

Here’s what I’ve done with Rhasspy so far:

- Wake Word Configuration: I’m using Porcupine for the wake word. Rhasspy needs improvement in this area. For instance, it should be listening asynchronously all the time using threads and queues. Currently, it’s a synchronous app, which is not ideal for voice assistance. Continuous listening is crucial, as anyone who uses Alexa will understand.

- Speech to Text: I’ve tried Pocketsphinx and Kaldi. They work fine unless the option [Open transcription mode] is set to true. With this option, they use the entire word library, which is my intention. However, it causes issues:

- It takes 30-60 seconds to recognize simple commands like “turn off the lamp” and often doesn’t recognize them correctly.

- It takes around 10 seconds to process another voice command, likely due to its synchronous nature. It’s not listening continuously like Alexa. I’ve implemented continuous listening in another app I’m working on.

- Intent Recognition and Handling: For intent recognition, I just get the text and return it to Rhasspy, which then calls my middleware for intent handling. The middleware processes commands via HA, Ollama, or ChatGPT, depending on the received command. My next step is creating an Assistant for Ollama. Currently, I have one for ChatGPT that understands and processes commands without prior setup.

Next Steps:

- Edit Rhasspy Code: I could make Rhasspy asynchronous. However, this might take too much time, so I might focus on completing my own async listener.

- Improve Voice Recognition: Continue with synchronous Rhasspy but implement Amazon Transcribe for better voice recognition and faster processing.

- Upgrade Hardware: Install Rhasspy on a more powerful computer to see if using Kaldi or PocketSphinx improves recognition speed and accuracy.

I have two apps: an incomplete async listener and a middleware that’s mostly finished but might have some bugs. I’m willing to open the repository code to everyone, hoping Python developers can help improve both libraries.

Once I finish integrating Amazon Transcribe with Rhasspy (using execute local command), I’ll share the middleware code. I need to work more on the listener before releasing it.

If any developer is interested in helping improve this library, please send me a PM.

Thanks for reading!

Well finally did what I wanted to do. I noticed that Rhasspy is too slow to work with any dictionary and even using Kaldi, for some reason, isn’t really accurate. So I Have created a new endpoint which process the wav audio generated by Rhasspy much much faster. I am using Vosk package and Kaldin Recognizer. With the text I get from the WAV file I just call my MiddleWare which calls HA.

Now I will work on creating one library instead two and I will publish it for everyone to use it.