Hi,

Im starting with setting up rhasspy (one server running as home assistant supervised addon, the server is running manjaro linux) and a raspi 3b+ running as satelite, handling all audio things (the mic for it will arrive soon, I tested it with my big condensor big with interface yesterday and im impressed. my hassio server doesnt recognize any of my sound devices, thats why im going this route). Now I wanna prepare some automations to use and im trying to get them to work with typing the intents. Intents are recognized fine on server and satelite, but even after setting in the intent handling to “send events”, it still sends intents, which i dont like, because for adding a new intent i have to restart HA wich gets pretty annoying, and reacting to events is for me easier, since i can react to them eiter from automations or from node-red, in which i automated many things like controlling my xbox and amp through a cheap IR remote, and now I wanna extend that to voice control.

Do you need anything else that I missed?

Which version of the addon are you using?

The send event should send events, not intents. Are you sure this is indeed the case?

As in: do have some logging to confirm the issue?

Im using version 2.5.5 for the addon, the pi was installed yesterday through docker with latest tag.

I get this in my HA logs (snips and intents are activated, but i have no intent script in it):

‘’’ Logger: homeassistant.components.snips

Source: components/snips/init.py:160

Integration: Snips (documentation, issues)

First occurred: 19:39:29 (1 occurrences)

Last logged: 19:39:29

Received unknown intent GetTemperature ‘’’

And in rhasspy I get this:

‘’’ [DEBUG:2020-09-29 18:55:11,422] rhasspyserver_hermes: Publishing 18 bytes(s) to rhasspy/handle/toggleOn

[DEBUG:2020-09-29 18:55:11,418] rhasspyserver_hermes: -> HandleToggleOn(site_id=‘snow’)

[DEBUG:2020-09-29 18:55:11,414] rhasspyserver_hermes: Sent 394 char(s) to websocket

[DEBUG:2020-09-29 18:55:11,403] rhasspyserver_hermes: Handling NluIntent (topic=hermes/intent/GetTemperature, id=ed4a3b25-f6c4-40c3-8aa5-9ad2af73600a)

[DEBUG:2020-09-29 18:55:11,402] rhasspyserver_hermes: <- NluIntent(input=‘wie heiß ist es’, intent=Intent(intent_name=‘GetTemperature’, confidence_score=1.0), site_id=‘snow’, id=‘5f25e1cf-3672-429f-b2c9-def7ad553771’, slots=[], session_id=‘5f25e1cf-3672-429f-b2c9-def7ad553771’, custom_data=None, asr_tokens=[[AsrToken(value=‘wie’, confidence=1.0, range_start=0, range_end=3, time=None), AsrToken(value=‘heiß’, confidence=1.0, range_start=4, range_end=8, time=None), AsrToken(value=‘ist’, confidence=1.0, range_start=9, range_end=12, time=None), AsrToken(value=‘es’, confidence=1.0, range_start=13, range_end=15, time=None)]], asr_confidence=None, raw_input=‘wie heiß ist es’, wakeword_id=None, lang=None)

[DEBUG:2020-09-29 18:55:11,313] rhasspyserver_hermes: Publishing 206 bytes(s) to hermes/nlu/query

[DEBUG:2020-09-29 18:55:11,312] rhasspyserver_hermes: -> NluQuery(input=‘wie heiß ist es’, site_id=‘snow’, id=‘5f25e1cf-3672-429f-b2c9-def7ad553771’, intent_filter=None, session_id=‘5f25e1cf-3672-429f-b2c9-def7ad553771’, wakeword_id=None, lang=None)

[DEBUG:2020-09-29 18:55:11,304] rhasspyserver_hermes: Publishing 18 bytes(s) to rhasspy/handle/toggleOff

[DEBUG:2020-09-29 18:55:11,303] rhasspyserver_hermes: -> HandleToggleOff(site_id=‘snow’)

‘’’

That is indeed strange, can you post your profile?

It can be found via the “advanced” setting

{

“dialogue”: {

“satellite_site_ids”: “pi”,

“system”: “rhasspy”

},

“handle”: {

“system”: “hass”

},

“home_assistant”: {

“access_token”: [Token],

“handle_type”: “event”,

“url”: “http://192.168.2.33:8123”

},

“intent”: {

“satellite_site_ids”: “pi”,

“system”: “fsticuffs”

},

“microphone”: {

“system”: “hermes”

},

“mqtt”: {

“enabled”: “true”,

“host”: “192.168.2.33”,

“password”: [PW],

“site_id”: “snow”,

“username”: “tenn0”

},

“sounds”: {

“aplay”: {

“device”: “jack”

},

“system”: “hermes”

},

“speech_to_text”: {

“satellite_site_ids”: “pi”,

“system”: “pocketsphinx”

},

“text_to_speech”: {

“espeak”: {

“voice”: “de”

},

“flite”: {

“voice”: “kal”

},

“picotts”: {

“language”: “german”

},

“satellite_site_ids”: “pi”,

“system”: “espeak”

},

“wake”: {

“system”: “hermes”

}

}

Token is censored.

Your password probably not yet

How do you plan to do TTS in your setup? Using a tts.*_say service in Home Assistant?

For the moment, my plan would be using node-red flows and the snips.say service, since its easier to extend my current flows for remote controls then scripting everything new from the beginning.

I think this might be a defect when setting the intent/event option.

I have a publish_intent boolean under mqtt

Try to add this under “mqtt”: just below “password”:

"publish_intents": false,

Its still sending intents, but also events.

Fresh log from rhasspy:

[DEBUG:2020-09-30 19:33:53,779] rhasspyserver_hermes: Sent 394 char(s) to websocket

[DEBUG:2020-09-30 19:33:53,776] rhasspyserver_hermes: Handling NluIntent (topic=hermes/intent/GetTemperature, id=aff6a966-c22a-4ce4-a14f-f51b813f3501)

[DEBUG:2020-09-30 19:33:53,776] rhasspyserver_hermes: <- NluIntent(input=‘wie heiß ist es’, intent=Intent(intent_name=‘GetTemperature’, confidence_score=1.0), site_id=‘snow’, id=‘c2cd1401-a42c-4487-a6d4-90544ae94f17’, slots=[], session_id=‘c2cd1401-a42c-4487-a6d4-90544ae94f17’, custom_data=None, asr_tokens=[[AsrToken(value=‘wie’, confidence=1.0, range_start=0, range_end=3, time=None), AsrToken(value=‘heiß’, confidence=1.0, range_start=4, range_end=8, time=None), AsrToken(value=‘ist’, confidence=1.0, range_start=9, range_end=12, time=None), AsrToken(value=‘es’, confidence=1.0, range_start=13, range_end=15, time=None)]], asr_confidence=None, raw_input=‘wie heiß ist es’, wakeword_id=None, lang=None)

[DEBUG:2020-09-30 19:33:53,622] rhasspyserver_hermes: Publishing 206 bytes(s) to hermes/nlu/query

[DEBUG:2020-09-30 19:33:53,621] rhasspyserver_hermes: -> NluQuery(input=‘wie heiß ist es’, site_id=‘snow’, id=‘c2cd1401-a42c-4487-a6d4-90544ae94f17’, intent_filter=None, session_id=‘c2cd1401-a42c-4487-a6d4-90544ae94f17’, wakeword_id=None, lang=None)

Its not that a big deal that it sends intent and events, it just floods my HA log. Do I need intent in HA activated for snips to work? Im using the snips.say service for tts responses.

Nope, the component works fine without. You might consider google_cloud though, that way you can get rid of the snips component to stop the log from flooding.

Is there any other HA component to use? snips.say and and snips.say_action are easy to use and work locally without internet (which is important for me, since i cant rely on a stable connection), other than google_cloud? I mean, gcloud is after a few million chars paid, which i dont like and its cloud based, what i also dislike.

well, google_cloud responses are chached so they are only generated once.

Also, you can use the Rhasspy TTS as well. For that, there are several choices to use.

Create this in your configuration.yaml:

rest_command:

rhasspy_speak:

url: 'http://<IPRHASSPY>:12101/api/text-to-speech'

method: 'POST'

payload: '{{ payload }}'

content_type: text/plain

Payload should be the text you want to be spoken.

In Home Assistant you can respond to event from Rhapssy via an automation.

In my case (dutch), I have an event for turning on the lights.

- id: '1581372525473'

alias: EventLampen

trigger:

- event_data: {}

event_type: rhasspy_Lights

platform: event

condition: []

action:

- data_template:

payload: OK, {{ trigger.event.data.location }} {% if trigger.event.data.action

== "on" %}aan{% else %}uit{% endif %}

service: rest_command.rhasspy_speak

- data_template:

entity_id: light.{{ trigger.event.data.location }}

service_template: light.turn_{{ trigger.event.data.action }}

location and action are slots from Rhasspy.

As you can see, I call the service rest_command.rhasspy_speak with a payload.

Now, that I got my setup to work, im working on getting dynamic responses via the rest command.

My setup looks like this now: instead of server with a pi as satelite, i got the HA supervisor audio plugin to work (it needs a few restarts and then time to pick up audio hardware) and ditched the pi as satelite. Im uisng raven as hotword detection, sometimes its a bit slow in recognition, but im sure, i can mess with its settings to improve that. Also Im getting my events in HA as expectet.

Now to the next step: Im handling the event “rhasspy_GetTemperature” via node-red, now i wanna get a dynamic response to this. If I set “payload”: “Hi”, in your example service via node-red, everything works, i get my static “Hi” as answer. Im after my event node, it asks the current state of my temperature sensor in a current_state node and then in the call_Service node for the rest_command.rhasspy_speak service I set “payload”: msg.payload, and in the logs theres no text for tts to say. I tried a few variations, but without luck. Any guesses by your side?

Can you share your action regarding to the HA audio plugin?

I’m struggling with that.

If you are using events, you basically do not need Node-red.

In my example, I have an automation listening to the rhasspy_Lights event.

You could do that as well with rhasspy_GetTemperature.

I will see if I can get things working in Node-Red

My guess is that the whole message is the payload, not msg.payload. I think it can me set on the node.

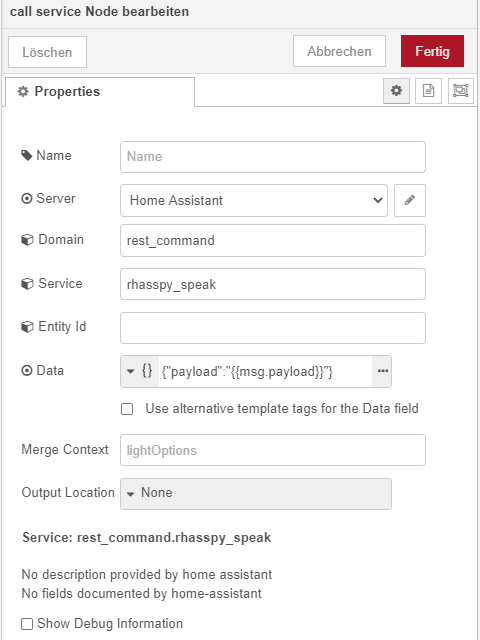

Edit2:

The following should be filled in the Node-Red node:

domain: rest_command

service: rhasspy_speak

data: {“payload”: “{{ payload }}” }

First, after every change of audio hardware, you have to “ha audio restart” a couple times. Even if you accidently unplugged and replugged your external soundcard like happened to me right now. Then you have to wait. When in “ha audio info” your device isnt present, restart ha audio again a few times. Then wait again. I dont now for how long, but it doesnt recognize devices as fast as i wanted to. Check every now and then, if the device gets picked up by ha audio. Tbh, it would be easier running every audio related container as a normal docker container than using supervisor addons.

My hardware is: Fiio Q1mk2 (i know, its a hifi device, but currently its not in use and im using what ive got here instead of buying not needed things) as soundcard for speakers and a cheap usb condenser mic from amazon for 16 euros. HA runs as supervised install on an old office pc from 2006 with a pentium dual, 3 gb ram, as OS im using manjaro-linux, since its the distro i kinda grew up as linux user with. It also serves as a small samba server (having a dedicated 2 tb hdd for that, runs natively on the host), adblocker with adguard, zigbee2mqtt gateway, influxDB and now as rhasspy server. Ok, its performance isnt that fast compared to my gaming setup, especially when compiling esphome firmwares, but it works and i got it for free. My raspi is a bit pain in the ass to work with, i hate arm cpus in desktop/server applications, i call raspis sd card shredder, and i dont wanna get an ssd just for that little piece, which is just used for presence detection (ble, room-assistant, raspbian runs on a usb stick) and cant be easily expanded with additional hdd´s for storage.

The node code works partially, the text in the rhasspy logs is still empty, but it tries to say something, but cant, because i accidently replugged my external soundcard. Gonna test that later.

Still, the text to be spoken is empty. Even if i set msg.payload manually with a change node to a string like “hi ho” or so. Atleast with your example code as HA service.

If I POST using an http-request node to the url, it speaks like its intended.

Can you post your rest_command and also a screenshot from the call service node?

rest_command:

rhasspy_speak:

url: 'http://192.168.2.33:12102/api/text-to-speech'

method: 'POST'

payload: '{{ payload }}'

content_type: text/plain

But Im using now an http request node, which does exactly the same as the service, but i have to insert its settings into every flow i wanna use. But thats not a big problem for me. Atleast it works correctly.

The data input on the Node-Red node should be:

{"payload": "{{ payload }}"}

Not msg,payload

Also, edit the field in the editor, not in the inputfield.

When I tried to change it in the inputfield, I got the richttext version of doublequotes somehow.

Sorry for a long pause, got problems with rhasspy and audio. I switched back from hassio addon to normal docker container because the ha audio plugin needed way too much time to pickup my external audio hardware after a server reboot. With normal container I had similar issues, but the devices are found in config, but not usable (getting errors instead of output when testing tts), after theyve been usable, it “swallowed” a few tts words at the beginning of a sentence (like “23,3 Grad Celsius” instead of “Es sind 23,3 Grad Celsius”), what was kinda random - sometimes it worked, most of the time not.

After this reboot, because the onboard buzzer was beeping all the time (dunno why, the reboot fixed it), same problem. Still no usable devices, tried the hassio addon again, devices are picked up from the ha audio plugin, but still no usable audio devices. The test button under record devices works, but doesnt mark the correct soundcard (a.k.a. usb mic) as working.

Under node-red, if you want to give a node a json parameter that is the message from the node before in the chain, it has to be msg.payload. Either way, if I set {"payload": "{{ payload }}"} or {"payload": "{{ msg.payload }}"} or any variants of this, produce no text to appear in the logs or hearable audio. The HA log is empty regarding this.