I have been playing around with the pulseaudio beamformer and actually getting great results.

I think last time because because the main focus was AEC (pretty lousy on the noise threshold it fails at) and that the beamformer wasn’t steerable (whats the point in that?) that the idea for use was binned.

I think I might of been using a PS3eye which might of been the problem as the mic distances are like 10 or 20mm apart and also prob was using 16kHz SR.

Speed of sound is 343M/s so divide that by 16k gives 0.0214 or 21mm so those mics can only differentiate a single sample or at best 2 if 20mm and likely why my tests stunk.

So I have something much easier as here are 2 files a 2 mic runiing pulseaudio beamforming with a usb speaker at 45 degrees behind it and another mic to the left facing the 2mic and speaker plus me.

So the nobeam.wav should give you an idea of real levels of my voice and noise from the speaker and beamform.wav is the results you get from the pulseaudio beamformer with a 2 mic 96mm array @ 16kHz.

https://drive.google.com/open?id=1hOoNGuWBm0tlZMdFJjC3qmHVQSW0U3c9

https://drive.google.com/open?id=1rlNIv0BDxjCZKQGqXFbzVmBvfIaVKFQc

If you listen to the second you will realise hey we do have beamforming and it works really well.

I think last time the respeaker 2mic causes all sorts of probs with pulseaudio and stupidly I had it lying flat and not vertical and facing a voice actor that yeah I wrote off pulseaudio beamforming as a bad job whilst its really rather excellent.

So I have been doing a rethink as I can steer this by merely unloading the echo-cancel module and reloading with the new steering co-ordinates.

I added some rough examples in GitHub - StuartIanNaylor/g-kws: Google Streaming KWS Arm Install Guide of a KWS triggered TDOA as the biggest problem is it bounces around on any voice so fixing it on KW works really well.

PS if you have a respeaker USB there is a freeze command that you can also lock beamforming on KW hit and with a streaming KWS during.

https://wiki.seeedstudio.com/ReSpeaker_Mic_Array_v2.0/#faq

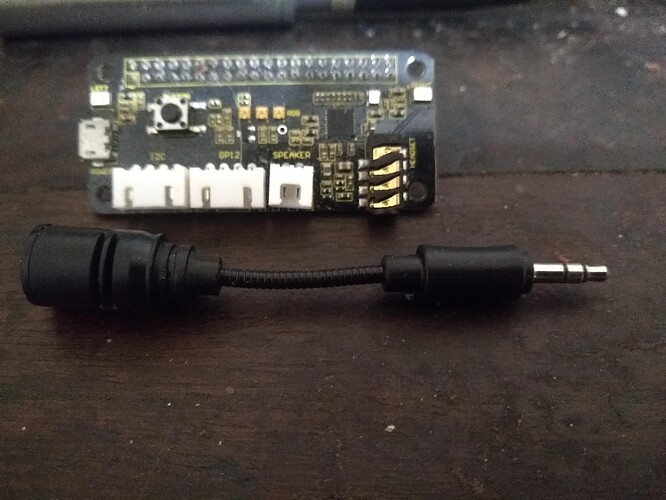

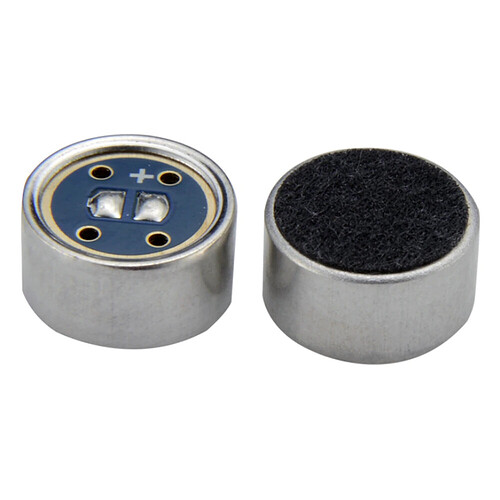

Its a shame the 2mic hats seem to fight with pulseaudio but maybe someone might come up with a solution to that but to be honest I prefer electrets just soldered to a stereo 3.5mm jack plug mounted in grommets of any enclosure.

I used just a bit of aluminium angle for testing which became shorter than intended as the split part of the cable was a tad too short but is just 2x electrets plugged direct into a stereo usb sound card.