I would definitely separate the web interface, which is an interface, and the dialogue manager, which is a core behavioural component.

I think separation is better. I see the webinterface more as a settings manager (+some extras)

The dialogue manager is, like koan said, a core component.

I agree with @koan and @romkabouter

Separation of concern is instrumental for future improvement and maintenance.

That’s the way it should be. With the planned steps of @synesthesiam we come very close to a Snips replacement. But Snips always lacked a management web console like Rhasspy has one . If the individual components run as separate services, communicate with each other via the MQTT protocol and there is a seperate management web console it will be very pleasant to work with the “new” Rhasspy.

OK, I have a basic set of Hermes compatible services:

- ASR

- rhasspy-asr-pocketsphinx-hermes (Docker image)

- Uses

acoustic_model,dictionary.txt, andlanguage_model.txtfrom Rhasspy profile

- NLU

- rhasspy-nlu-hermes (Docker image)

- Uses

intent.jsonfrom Rhasspy profile

- Dialogue Manager

- rhasspy-dialogue-hermes (Docker image)

- Handles sessions and listens for wake word detected event

- TTS

- rhasspy-tts-cli-hermes (Docker image)

- Calls external program for text to speech (optionally plays audio too)

- Microphone

- rhasspy-microphone-cli-hermes (Docker image)

- Streams raw audio from external program (e.g.,

arecord)

- Hotword

- rhasspy-wake-porcupine-hermes (Docker image)

- Listens for MQTT audio and does wake word detection

All of these services take --host, --port, and --siteId parameters to configure MQTT. I’d also recommend passing --debug to see what’s going on.

Next Steps

Going forward, I need help to figure out how we should deploy these services and how to incorporate them into the main Rhasspy interface.

As it stands, I’ve been able to get each of the services above to build with PyInstaller, so they can be deployed as Debian packages, Docker images, or source code (virtual environment). I still need to add builds for ARM, of course.

Questions

- Should Rhasspy become just a call out to supervisord or Docker compose? We can always bundle

mosquittoif they don’t want to install an MQTT broker. - How do we handle profile files and training? Is this just a separate service? What if different services run on different machines, but need access to specific profile files?

- What can we do to make it easier for people to understand and submit pull requests for individual services (or add new ones)?

Rhasspy’s main documentation and main repository could have links to all repositories of the services. General issues can still be opened on the main repository.

If all services run in their own container and the Rhasspy interface too, starting Rhasspy can come down to running Docker Compose indeed. We can even publish Docker Compose files for satellites, for specific combinations of services, and so on.

This is awesome!

The rhasspy-hotword service should not listen to MQTT streamed audio frames as in a base/satellite configuration this will spam the MQTT broker all the time.

I think there should be a rhasspy-satellite service that handle the audio input AND output and do the wake word detection. This is pretty much what Amazon, Google and Snips are doing and is the single requirement for a satellite to work.

Only when the wake word is detected this service will notify the MQTT broker and start streaming audio frames.

This service should also output audio (feedback sounds, tts generated wav files, etc) from MQTT when asked.

The base services like ASR and NLU will have to use the same profile files as they are pretty closely related.

The rest of the services are not related to each other as long as they use the Hermes protocol so they can be easily separated.

Installing a base or satellite via a docker compose is perfect.

How can we choose which ASR, NLU and TTS we want for the base then? Should the base include multiple systems like today (kaldi, pocketsphinx, deepspeech, etc)? If they are separated (which sounds better) maybe there can be a simple docker compose generator script to select these?

What do you think?

I think this is not correct if we see the hermes services as snips replacement.

The mqtt hotword service should listen to the streamed audio, because that is what it’s for.

The audio stream itselfs comes from the microphone service, which is fed via the internal mic or other sources. In snips, all of this is used with an internal broker so we should definitely deploy mosquitto with rhasspy if we want this.

The audio service indeed spams the broker, but this does only affect network if an external broker is used or audio coming from other sources (like my streamer)

The sattelite service which snips used, is basically a hotword and audioserver. Some code is in there that when a hotword is detected on the interal mqtt audiostream, the service starts relaying the stream to the base broker.

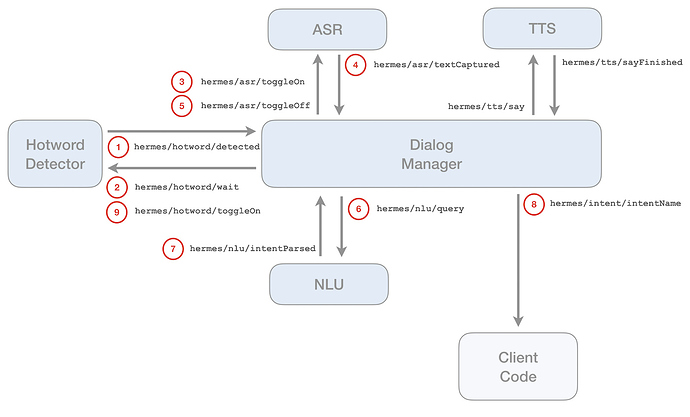

For Snips, the main component is the DialogueManager. This service is the one putting the different services to work via mqtt messages.

A nice picture about the interacttions can be found here: https://snips.gitbook.io/tutorials/t/technical-guides/listening-to-intents-over-mqtt-using-python

This is a little outdated probably, but in it’s basis still correct

I haven’t though of an internal MQTT broker between the wake word and the audio server…

That’ll work but might consume additional resources (CPU and memory) to constantly pack, unpack and push/pull the audio frames to/from this internal broker and increase installation complexity… maybe this can be simpler if these services are in the same process and use the extracted audio frames from record input, pass them to the wake word engine and when detected push them to the base MQTT broker… Although I really like the idea of having separated input handler and output handler…

Both have pros and cons I guess…

As I think that modularity should be one of the main objectives of Rhasspy then I’ll rally the internal MQTT broker proposition. The resources part can be addressed later if required (premature optimization…).

So we’ll have a base with:

- the main MQTT broker

- ASR of choice

- NLU of choice

- TTS of choice

- DialogueManager/MainManager

And a satellite with:

- an internal MQTT broker

- Input of choice

- Output of choice

- Wakeword of choice

- SatelliteManager (can allow to handle satellite registering and bridge both MQTT brokers to avoid maintaining many connections)

And somewhere:

- the web UI that connects to the main manager via HTTP rest API?

Does this look correct?

It seems Rhasspy is heading the MQTT road which is pretty cool  .

.

I will let the vocal specialists on this, but would just be sure that wakeword detection is on the satellite. No network flooding when no wakeword is detected please. Maybe a ping here and there so the base know the satellite is still up or updating some settings for example but no continual audio streaming between the two.

What I understand from snip is that the satellite listen, detect the wakeword, and only then stream the audio to base so the base can do asr then nlu then intent recognition

Also the satellite may have a minimal api http to handle minimal interface to have http access so we can see how it goes, restart it (even reboot the pi) and send TTS speech to it. Or we send TTS speech to the base with siteid and the base forward it to the right satellite. Like this anything can send speech command to any device via http without having mqtt itself.

And related to this : https://github.com/synesthesiam/rhasspy/issues/102

We would need /api/devices, even only on the base, to get all the rhasspy devices via http: all base and satellites with siteid, ip, port, so we can just do an http request and know the setup and all base/satellites to later know which siteid to send speech commands and such (or send to the base with the right siteid).

I also think siteid should be in settings apart from mqtt settings. Actually I don’t have mqtt, don’t need it to get notify in jeedom plugin for intent recognition and send speech commad. This would allow, even if base/satellites talks via mqtt, to control all this with another external device without mqtt. This would allow powerfull lightweight control for entire setup, and allow any smarthome solution to handle entire rhasspy setup with all its satellites without having mqtt itself.

That’s what the ‘internal’ MQTT broker @romkabouter and @fastjack are talking about is for: the hotword detector and audio service on the satellite device run on the same device together with a MQTT broker and satellite manager, and these four services each run in a separate Docker container and are connected to an internal (virtual) network. So no network flooding is happening, because the ‘network’ is contained on the device.

At the same time, these containers are also connected to the ‘real’ network, so when the hotword detector detects a hotword, a rhasspy-satellite service starts relaying the audio messages received on the internal MQTT broker to the external MQTT broker on your home network, and your Rhasspy server starts receiving audio for command handling. When the text is captured, the satellite manager stops relaying the audio to the external MQTT broker.

This sounds great, @fastjack, @romkabouter, and @koan!

For the non-Docker case, we could have the hotword service listen to local (internal) MQTT broker and publish to a remote one. We could even create a special topic for the internal broker that is meant for raw audio chunks instead of tiny WAV files to avoid overhead. Another option is to accept raw audio directly from an external program or over UDP with gstreamer.

It seems like the web UI could be split into the part that lets you test and train, and the configuration portion. I’m not sure what configuration becomes when Rhasspy is split into services. Does the web server generate a Docker compose file or a supervisord.conf file?

I hadn’t thought of that, awesome idea!

Maybe we can have a common shared space on the host that every docker service used to read/write configuration files.

HassIO does that as well, folders like config/share/ssl and such.

I use the proximanager for certicate auto-renewal and the files are placed in /share

My HassIO instance is used these same ssl files.

That way, you could have 1 UI controlling the configuration files, while the services use each their own specific config just like now.

As much as I like the MQTT support, I still believe that the excellent ways of using Rhasspy should be kept so that MQTT is always optional.

The underlying communication layer can be either websocket events on a single endpoint or a MQTT broker. Both work pretty much the same way. They can be secured using tls and credentials so it should be fine.

As long as the websocket events are the same as the MQTT topic/message it should be easy.

The base DialogueManager/MainManager can provide a default websocket endpoint for all the other services (asr, nlu, tts, satellite) to subscribe (like MQTT).

The satellite manager can provide the same for local communication.

An additional MQTT bridge service can eventually also forward websocket events to an MQTT broker and relay MQTT message from this broker as websocket events for Hermes protocol over MQTT compatibility if required.

That way Rhasspy does not depend on another piece of software to work out of the box.

I also like MQTT a lot but it does not seem like a really required dependency.

The web UI main objective should indeed be intents/slots management (creation, edition, etc), training and testing (unit-tests à la Snips would be awesome).

New user here…been a lurker for awhile. I like how this is proceeding! I am not a domain expert in voice systems, but have been interested in this technology for quite some time. I have a Matrix Voice and ReSpeaker gathering dust that I hope to dust off and use soon! Especially the Matrix Voice.

Some comments about the architecture…

It seems that things are moving in the direction of a “distributed” system. That is, one (or more?) “base” stations whose responsibility is to consume audio from a satellite, perform ASR, NLU, TTS (sending the audio back to a satellite for output) and possibly dialog management whilst the satellite responsibility is to produce audio (for input to ASR) and consume audio (presumably from TTS). From an architectural point of view, It seems important to keep in mind that the satellite might (in the future) be a (standalone) device with limited resources (such as a Matrix Voice).

With this in mind, whilst it might be the path of least resistance for an initial implementation to implement a satellite node using an “internal” MQTT broker this certainly seems like overkill in the long run for a “local” service to manage the satellite. As long as the internal MQTT broker is an implementation detail of the satellite and does not architecturally “leak” outside the boundaries of the satellite, I don’t see a problem. We should keep in mind the possibility of implementing a satellite on a standalone device with more limited resources such as the Matrix Voice ESP32. As such, as long as the satellite implements the correct “interfaces” that the base station requires, then we keep the option open to reimplement the satellite functionality in a more compact and resource efficient manner.

It occurs to me that exposing wake word, led control, etc. outside of the satellite is really unnecessary and inappropriate as this is arguably an implementation detail of a “particular type of satellite” and need not be globally exposed. One might envision other types of satellites that don’t operate the same way (with a wake word) such as activation via face recognition, a button, or presence detection. I’m not saying that these necessarily make sense right now, but the architecture should not preclude other types of satellites. Thus, there should be no need to expose these details outside of the satellite…its just a producer of recorded audio and a consumer of audio for playback. One might envision a sort of “plugin” architecture for the satellite that lets one plug in various “local” functionality in order to operate some arbitrary satellite device. Home Assistants plugin and event architecture come to mind as one possible architectural example. A purpose built software for (e.g.) a Matrix Voice is another example of how one might implement a satellite.

There was also a question about how to “configure” all of the various “nodes” in the system. Why not via the system wide “global” MQTT broker? The broker is easily accessable to all nodes in the system (base and satellite) as well as providing a simple form of persistence for the configuration. That means that each satellite (and base station) only needs a small “bootstrap” configuration to operate, i.e. its assigned ID (node ID/site ID?) and the URL and credentials necessary to access the global MQTT broker. Using the MQTT broker would also allow the web interface to easily author and view the configuration for each satellite (and base station) and publish it the an appropriate MQTT topic. If the satellite is listening to that topic, it can easily reload the config dynamically. Same for the web interface, all it needs is the URL and credentials to access the global MQTT broker to configure satellites and the base station.

I know that some of the above assumes a global MQTT broker is part of the core architecture. A pub/sub broker such as this is pretty useful in a distributed system, and MQTT is a proven service. Maybe there are ways of doing the same thing using HTTP and/or websockets, but I’m not familiar with how these might be used in the same manner. This is not to say that some HTTP or websocket APIs might also be useful, but it does seem as if MQTT is a reasonable choice to base the architecture on.

My thoughts. Keep up the good work! This is pretty cool!

ba