I think ASR much more complex than KWS and that generally in raspby ASR is imported whilst currently there is likely and possibility KWS in Rhasspy could be done in house that KWS is much more likely the first step and has more pressing needs.

The fundementals of dataset prepartion is similar and practically everywhere uses general datasets whilst tailoring a dataset to voice in use can massively increase accuracy and this is equally true of ASR and KWS.

Datasets are the code of a model and we have very few options on what code makes up a model and hence work with an extremely limited array of models.

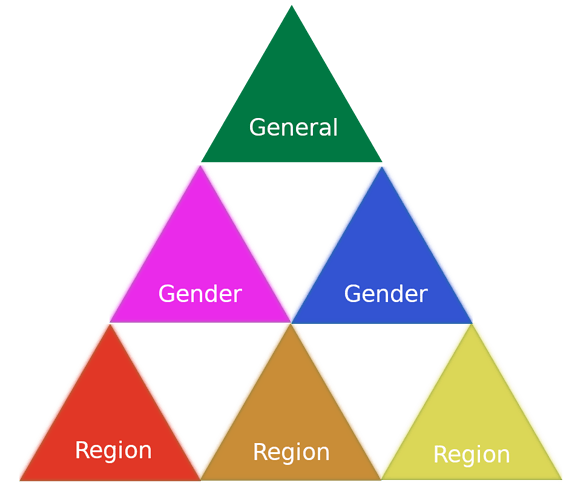

Each demographic dataset is just a collection of word folders and the above is a representation of a single language model.

A dataset should be a dynamic snapshot of parameters fed in a databank that will create tailored datasets and corresponding models.

If a data set is just a general model, gender biased or region biased and what gender and regions are included shoyld be choice with preferences percentages of words, implementation is actually quite easy with an OverlayFS filesystem that is metadata driven.

Its also quite efficient in terms of server storage as the 128 layers of an overlayFS file system are purely virtual linking back to the original databank but presented as the merge layer.

No-one seems to be making dataset construction easy because if its not then the fundemental process of model creation becomes hard.

Both Linto and Mycroft have done things about data collection but very little about data-set construction.

Linto have done quite a bit in model creation on a dataset and https://github.com/linto-ai/linto-desktoptools-hmg/wiki/Training-a-model is excellent as it allows you to parameterise MFCC and also create a parameter file to work off the bat when you have the model prepared with thier KWS.

https://github.com/linto-ai/linto-command-module

Also with the above dataset a the last addition of ‘My voice’ can greatly add to accuracy but each is just an overlayFS lower layer with the common word folders that exist in all… with the exception of “My voice” which could have overrides for KW only but also you could get a transcript of words required.

OverlayFS is quite interesting as you can whitespace word folders that don’t exist in each demographic dataset so that overall word representation is even and tally can be returned as info on the current merge dataset you are trying to produce.

The order and number of tiers across or down is choice of how you wish to tailor your dataset.

The databank (common voice alone is huge) is quite huge but the dataset is purely virtual and likely could be saved and stored for reuse for the addition of ownvoice when needed. Same with the models as they are not virtual but for KWS actually quite small.

Likely when pruned and extracted for metatdata and trimmed into words we prob woud be looking in the region of a 50Gb-100Gb databank of words.

That could make very accurate models for KWS that have a lot of custom choice from top general language to the percentage contents that is gender or region based.

We are not going to get anywhere though if we don’t have databanks that are easily available.