Its called keras or pytorch there is no such thing as cutting edge KWS its merely the cutting edge machine learning platform it is based on.

As in garbage in and garbage out a KWS is the initial voice capture and very much dictates if a garbage stream or not.

I did post last May KWS small lean and just enough for fit for purpose

Of the the various frameworks and the problem is the assumption that it should or needs to fit into rhasspy when a KWS broadcasts from KW till silence and is little more than a stream.

Precise uses keras and tensorflow, the only problem is, that it is out of date.

As for fitting into rhasspy, well, rhasspy is a project that is supposed to be easy to use with minimal knowledge about all the components, so everything has to fit into rhasspy at least in the way that you can select it in the web gui, configure the needed parameters and then use it.

For anything that is not as “beginner friendly” (even rhasspy has a pretty steep learning curve with all the thresholds and sensitivities to be adjusted) as rhasspy you have the custom script you can use as a KWS or the option of using voice2json and build everything yourself.

You obviously are pretty much an expert on this, and you have no problem with training a model without proper guidance, and setting up everything on your own so you can play around all you like and get decent results. Most ppl using rhasspy can’t do that, I would struggle to use the console script option rhasspy provides to even wrap a precise-listen command. I would most likely succeed sometime, but only after sinking a few days into the project and being frustrated to no end by it.

So I hope you can understand why it “needs” to fit into rhasspy for it to be usable by more ppl.

I basically use the config provided by respeaker with their, mostly working, drivers.

Default config for my mic:

# The IPC key of dmix or dsnoop plugin must be unique

# If 555555 or 666666 is used by other processes, use another one

# use samplerate to resample as speexdsp resample is bad

defaults.pcm.rate_converter "samplerate"

pcm.!default {

type asym

playback.pcm "playback"

capture.pcm "ac108"

}

pcm.playback {

type plug

slave.pcm "hw:ALSA"

}

# pcm.dmixed {

# type dmix

# slave.pcm "hw:0,0"

# ipc_key 555555

# }

pcm.ac108 {

type plug

slave.pcm "hw:seeed4micvoicec"

}

# pcm.multiapps {

# type dsnoop

# ac108-slavepcm "hw:1,0"

# ipc_key 666666

# }

My main problem is the fact that I don’t understand how alsa works, I just copy paste stuff that is in “guides” that try to explain how it works with just a bunch of examples.

The problem is with Rhasppy is your are thinking like Wordperfect did with Version 5 when an upstart called microsoft split Wordperfect into a suite of interoperable software that also partitioned complexity into ease of use.

A KWS is a HMI (Human Machine Interface) and should be free of system constraints or the obsolesce of the system its used with.

I am no expert and don’t train models because my knowledge believes the manner of all-in infrastructure is wrong, so I don’t waste my time.

A KWS should just be pointed to the IP of the system its a HMI for and that is it and there is no more need than that.

If that’s soon enough I will install a 4mic on a spare pi tomorrow and set up a working dsnoop asound.conf and share it with you. Are you using the default device in Rhasspy or do you choose the 4 mic directly?

It took me a long time to even half understand it. @rolyan_trauts is definitely the one most knowledgeable about alsa here I would say.

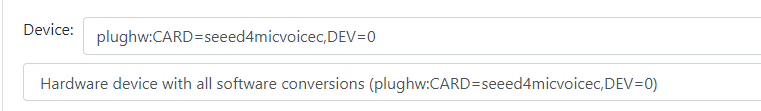

This is what I have set my rhasspy to:

I had some trouble with the default device in docker, selecting the card directly fixed that.

I am also not sure if changing the asound.conf of my host system will automatically work for rhasspy inside docker. Looking inside there is no asound.conf but that is something that i am seeing more and more often in current distributions and I have not yet found any explanation how it works without the config file.

As for the time frame, I have been without a working wakeword basically since I started playing around with rhasspy back on 2.4.19, so I am in no hurry. The only reason I am trying to get a wakeword working now is because I am tired of things not working when testing via web gui because it behaves differently and spending hours on debugging until I figure out it is working fine with a wakeword.

Alsa is a pain and every time I don’t use for a couple of month my MS wipes much of what I know.

4 mic is a weird card as its likely summing the channels is detrimental so just use as a single mic in terms of KWS and 1 channel.

Its the respeaker dross that confuses things as it adds much that is not needed.

I have a 4 mic & a linear in my bin of spare parts and forgot even if they have AGC but because they lack audio out (not the usb one) aec will not work (alsa one) (pulse aec is pretty ineffective on a pi)

I only use one channel of the mic since I have this weird issue (again) where at least one random channel produces loud noise at random. It sometimes fixes itself with a restart but mostly it doesn’t. So I turned all but the first channel down in alsamixer. I know it is not the best mic to use, especially with the driver issues it has but it was recommended for snips and I got one. I don’t try to do anything fancy with that pi, I don’t expect to be understood over music that is more than just a bit of background noise and I like the led ring so it has to work.

But I have to disagree that it is the respeaker stuff that makes it hard. I spend hours staring at non respeaker configs in vms and on my laptop without understanding much more than i do with the respeaker stuff.

Yeah 1 channel is fine its the AGC (ALC) that my memory can not remember as that could help much.

As you may have little to no signal at distance.

That complicates things as when you use the card directly it will be blocked for all other applications. I will set up a asound.conf with dsnoop and the dsnoop as default.

You can give it a try.

I’m not a big fan of docker and have no understanding of how it interacts with the hosts audio. I only know that I ve met a lot of people struggling with it

Docker isn’t that bad its docker syndrome that gets us all that host & container are completely separate instances and either it has to be shared via the docker run or set up internally.

Again if KWS was seperate it would just work via a network connection

I have no understanding how it interacts either but I tend to break linux systems regularly, like every few hours, when I experiment with stuff, I am just not compatible with it, so I found that using docker to capsulate my applications helps me keep the host running, I just break the docker container instead and can fix that by starting from scratch.

My containers all run as network:host and quite a few of them run as priviledged. i don’t care much about capsulizing for the sake of capsulizing, I just like docker containers better to separate my stuff than venvs

I’ll look at that too tomorrow. I agree that unfortunately the 4 mic really is probably the worst choice from respeaker. It gives no better if not worse performance than the 2mic and doesn’t have an audio output. Only that nice led ring but that’s really it.

Yep and not having a go but so that others might read before getting one that the 4 mic apart from the pixel ring is a whole lot of pointless.

My strategy here is to just buy more sd cards and make clones/backups of stable starting points so I can just pull the card if things go broken and reflash a previous image. That’s what I like about raspberry’s and co.

Well, I did not know any of that when buying it, I use an usb sound card with a small speaker my father built for me (I suck at soldering and stuff) and it works. The speaker quality is somewhat bad, but since it is only supposed to output tts I don’t mind.

If I had to get a mic today i would ask my father to solder me a small mic to something that can use it and plug that in somewhere. Another reason I like the respeaker4 is the 2 i2c ports it has, lets me connect my 433mhz chips to it even thought the pins are all blocked by the mic, not a argument to buy one but a nice to have since I already use that feature.

That would work, but once I have to set the same thing up again I have a problem. Quite a few of the dockers i was running on my pi are now on a family pi because my father wants to use them, too. So the copy paste of working dockerfiles and established data and configurations helps

@Daenara Got one myself and the same, still confused why Mycroft and others recommended them.

Same with the P3Eye as really the opposite is true as unless you have inbuilt DSP just get a single mic that also has sound out on the same card.

All are pretty much similar (2mic to usb card)

The new raspberry 2mic looks pretty nice a tad expensive and still not in stock anywhere.

But you can just stick a I2S mems on the gpio if you wanted to there are a lot of options that depend on if you want to use AEC or not, that all produce relatively similar results and more mics = better is a fallacy unless you have the DSP to use them.

PS I struggled finding good unidirectional mics and ended up buying x25 where I will flog and post on ebay at the cost of postage added as not every might want x25

The raspberry pi 2 mic also has line in or the best cheapest soundcard was this one by far.

Even if such an odd shape definately the only card that can record 24bit S24_3LE I know of at that price and electrets just wire direct whilst also being stereo in.

I still mention 2x mic as if unidirectional use one on 2x instances of KWS at different angles to get a form of budget beamforming.

I kinda solved my own problem here. It is not perfect in any way and I sometimes have to adjust timings because sometimes it takes longer before I get the hotword detected message but here is a python script that buffers 10 seconds of audio streamed over mqtt and saves the last second and a half or so as a wav file.

For this to work all the audio has to be streamed over mqtt, as soon as udp streaming is set up it won’t work. It should work with multiple satellites but since I only have my all-in-one system I have not tested that.

import datetime

import json

import os

from io import BytesIO

from paho.mqtt.client import Client

from pydub import AudioSegment

audio_file = {}

frame_rate = 16000

def audio_callback(client, userdata, message):

global audio_file, frame_rate

topic_parts = message.topic.split("/")

if message.topic == "hermes/hotword/computer/detected":

wake_word = topic_parts[2]

site_id = json.loads(message.payload)["siteId"]

filename = wake_word + "_" + str(datetime.datetime.now().timestamp()) + ".wav"

folder = wake_word + "/" + site_id

os.makedirs(folder, exist_ok=True)

file_path = folder + "/" + filename

audio_file[site_id] = audio_file[site_id].get_sample_slice(max(int(audio_file[site_id].frame_count()) - frame_rate - 3000, 0), int(audio_file[site_id].frame_count()) - 1000)

audio_file[site_id].export(file_path, format="wav")

print(f"Recorded audio: {file_path}".format(file_path=file_path))

audio_file[site_id] = audio_file[site_id].empty()

elif topic_parts[3] == "audioFrame":

site_id = topic_parts[2]

if site_id not in audio_file:

audio_file[site_id] = AudioSegment.empty()

audio_file[site_id] += AudioSegment.from_wav(BytesIO(message.payload))

if audio_file[site_id].frame_count() > (frame_rate * 10):

audio_file[site_id] = audio_file[site_id].get_sample_slice(max(int(audio_file[site_id].frame_count()) - frame_rate * 2, 0), int(audio_file[site_id].frame_count()))

mqtt_client = Client("audio_capture")

mqtt_client.username_pw_set("mosquitto", "mosquitto")

mqtt_client.on_message = audio_callback

mqtt_client.connect("192.168.0.4", 1883)

mqtt_client.subscribe("hermes/audioServer/+/audioFrame")

mqtt_client.subscribe("hermes/hotword/+/detected")

mqtt_client.loop_forever()