If you want a beamfomer I have 1 here that I am doing for my project ears thing.

It will have to be gdrive as my phone died and locked out of github 2 factor auth until my new one arrives.

https://drive.google.com/file/d/1K0TMHi9TpyIbmCydz0A6peBDs3MSU2ND/view?usp=sharing

The beamformer works fine the argparser that I inherited haven’t totally worked out yet but its ./ds input_device=1 output_device=1

Run it and press enter to stop and it will list params and devices.

I will set up a github repo when my phones turns up.

Speed of sound is 343ms which with 48k input means a single sample = 7.145mm my custom mic is a stereo 95mm affair but dropping sample rate or smaller mic arrays will get less resolution and that is what the margin is as it just stop spurious errors guess really should have it set to 14 as 95/7 is max.

Its not really what I am going to use in project ears as it will be embedded into the code this is just an external one in C++ and my 1st bit of C++ hacking.

I just changed from file to a streaming alsa interface thanks to portaudio so thanks to

If you where going to use set the output to a loopback and then the other side will act as a mic source of the beamformed multichannel mic set as source.

Playing with ALSA loopback devices | Playing with Systems gives a decent guide but basicall whatever you play into a sink side becomes available as a source on the other.

So ramp up sample rate to max and use a plughw: to auto resample from loopback as then you will get resolution needed.

1 Like

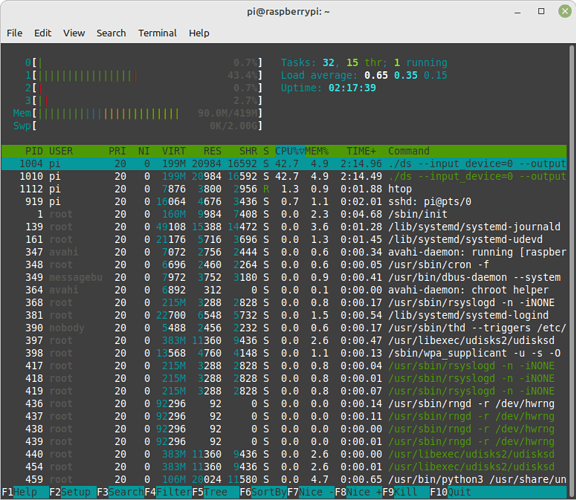

The load on PiZ2 is nearly all TDOA which with a standalone version its running all the time.

The code is there so its quite easy to work out the TDOA & Delay Sum beamform I picked Delay Sum because it is such low load its is possible to run GSC or MVDR but as said delay sum is a tiny fraction of the load here.

The output and code needs tidying but do as you need as this version is purely a working example to share the 1st mic is the reference mic and the tdoa will always be zero and the tdoa for additional mics is always worked from the 1st reference mic.

The delay sum is such a simple piece of code but TDOA is returned in samples and is the index of which sample to the current reference sample will be added and then simply divided by the mic count to get the time adjusted beamform.

I am not really worried about the load as in the application I have planned TDOA only runs on a streaming KW and TDOA is then locked for that sentence and stays static until parts of the stream provide the KW argmax.

So the TDOA only runs for a short duration and the load will be the low load delay sum alg working on the last saved TDOA.

I dunno exactly but something like that as there are a few things I mean to try but it will be whatever turned out to be best whilst retaining low load even though it could run continual I the KW is a much clearer target signal than say just VAD which also you could use.

I will set up a repo and if there is anyone with a smattering of C++ who wants to clean up my nasty hacks as the globals could be passed through the userdata part of the callback but my noobness after failed attempts with structs just threw them in a globals.

But use the code as you wish but please give credit to the above 2 repo’s as all the work is there’s really all I did was meld them together.

The new phone arrived https://github.com/StuartIanNaylor/ProjectEars