Good day!

Install rhasspy in docker on a Raspberry Pi

Get access to rtsp audio stream.

Write the word “test” from the stream to a PCMA file via vlc on a desctop computer.

Recode the file from step 4 to wav via vlc

Configure recognition of the word “test” (in russian language) from the transmitted wav file

That does not work:

Tried:

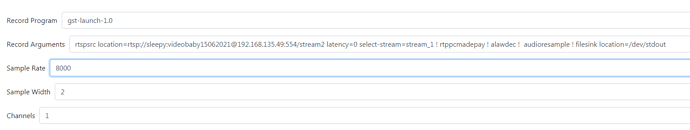

gst-launch-1.0password@192.168.135.49 : 554 / stream2 latency = 0 select-stream = stream_1! filesink location = / dev / stdout

Tried adding:

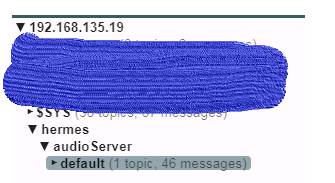

messages appear in mqtt, but when trying to recognize it, I get the “TimeOut” error.

What am I doing wrong? I do not understand encodings, format and other sound parameters.

Please post the logfile where you see this TimeOut

[ERROR:2021-07-01 07:29:41,989] rhasspyserver_hermes:main .py”, line 936, in api_listen_for_commandinit .py”, line 994, in publish_wait

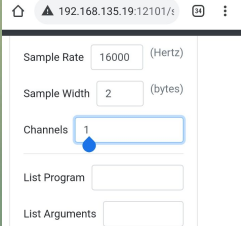

Settings for which logs were received from above

{http://192.168.135.10:8081/path/to/endpoint ”

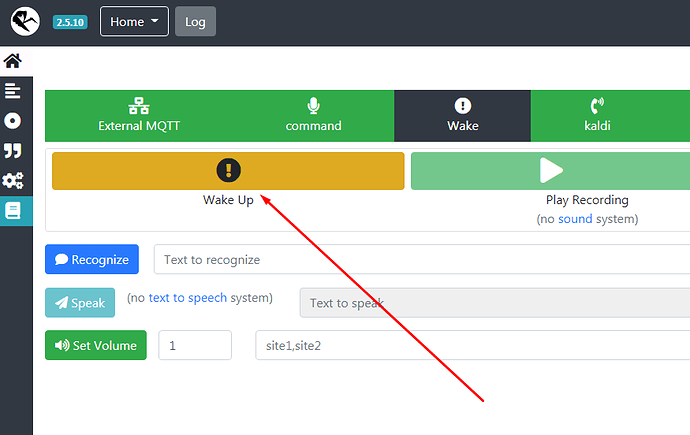

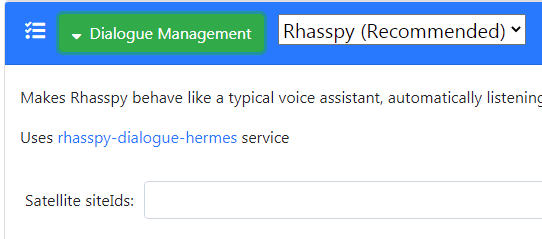

Enable the DialogieManager should fix your problem, because the hotword is detected

Sleepy:

[DEBUG:2021-07-01 07:29:11,992] rhasspyserver_hermes: ← HotwordDetected(model_id=‘default’, model_version=’’, model_type=‘personal’, current_sensitivity=1.0, site_id=‘default’, session_id=None

I tested via a button on the homepage. Expected recognition of the spoken word “test”. This effect was when sending the wav file. Am I not expecting that?

Feeling that the analysis of the stream from mqtt is not happening. Doesn’t even try. I could not find any evidence of this in the logs. Although messages appear in mqtt.

Like I said: you should enable the DialogueManager

He turned on the dialogue.

I started the recognition manually.

{

This might be triggered because of the fact that there was only silence (assuming no audioinput)

Best to do a real test when your at home

1 Like

Sleepy

July 1, 2021, 9:01am

11

I’ll check it out in the evening.

Sleepy

July 1, 2021, 4:34pm

12

Does not hear

[ERROR:2021-07-01 19:32:14,911] rhasspyserver_hermes:main .py”, line 936, in api_listen_for_commandinit .py”, line 994, in publish_wait

how do you trigger the hotword? Have you already setup some sentences?

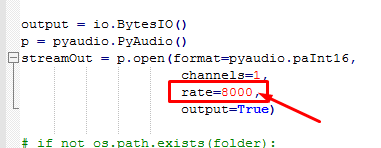

what is the samplerate on the wi-fi camera? You have set this to 8000 and width 4 in Rhasspy. It this correct with respect to the wi-fi camera?

is there a reason you have no hotword detection enabled?

try to record the audio from the stream with this file:ESP32-Rhasspy-Satellite/record.py at voco · Romkabouter/ESP32-Rhasspy-Satellite · GitHub

Change the broker ip adress and on the bottom “office” into “default”

1 Like

Sleepy

July 2, 2021, 6:49pm

14

Thanks for the debug opportunity!

But the sound is accelerated. Cartoon.

Sleepy

July 2, 2021, 7:01pm

15

Changed the rate in record.py and the voice in the text file is now normal.

Rhasspy expects 16000 16bit, maybe you can try and output that from the wifi cam

1 Like

Sleepy

July 3, 2021, 7:36pm

17

Thank you! The final version of the command parameters:

rtspsrc location=rtsp://login:password@192.168.135.49:554/stream2 latency=0 select-stream=stream_1 ! rtppcmadepay ! alawdec ! audioconvert ! audioresample ! audio/x-raw, rate=16000 ! filesink location=/dev/stdout