That is the strange thing as it is a barrier in itself because its a proprietary protocol requiring programs to talk ‘Rhasspy’.

Things seem strangely in reverse for both input and out programs as its not a matter of the Linux kernel adopting Rhasspy protocol as audio is embedded as ALSA ( Advanced Linux Sound Architecture) and various servers are built ontop of that.

They are standard high performnace Linux libs for passing audio and now all programs must be converting to Wyoming for input to Rhasspy.

Why I am scratching my head is why does this as yeah have a simple protocol but don’t embed it into standard audio streams and make them non standard whilst its so easy to use kernel interfaces (ALSA) and the existing audio servers (Pulse, Pipewire, Gstreamer) and just pass the json as an external config file (YAML).

So if you talk about examples that currently work with Rhasspy such as Voice-EN AEC, Speex-AGC, Deepfilter.Net and infact any VAD or KWS that used standard audio interfaces will now have to be converted to embed Rhasspy metadata and render the standard audio stream proprietory.

So if we take for example rhasspy3/wyoming.md at master · rhasspy/rhasspy3 · GitHub and the event types.

Events Types

- mic

- Audio input

- Outputs fixed-sized chunks of PCM audio from a microphone, socket, etc.

- Audio chunks may contain timestamps

Even the very basic simple plug in a mic to a Linux machine now doesn’t work with Rhasspy as its no longer a standard Linux kernel audio stream its this ‘chunked’ protocol !?

- wake

- Wake word detection

- Inputs fixed-sized chunks of PCM audio

- Outputs name of detected model, timestamp of audio chunk

So now every wakeword program needs to strip the Wyoming protocol and return back to a standard linux audio stream to process a Wake event and then reassemble the event into a standard audio stream to make it proprietory once more.

Also why it outputs name of detected model, timestamp of audio chunk as what use or function that has upstream I have absolutely no idea.

I could go through each step where its creating work on the same audio stream and have no need or value for the meta data its injecting that standard standard programs do not need, in fact doing so excludes them.

I am not going to bother but in terms of a Dev talk ignoring standard Linux kernel methods for audio and creating a proprietory protocol that only Rhasspy needs seems the opposite of

Event Streams

Standard input/output are byte streams, but they can be easily adapted to event streams that can also carry binary data. This lets us send, for example, chunks of audio to a speech to text program as well as an event to say the stream is finished. All without a broker or a socket!

Each event in the Wyoming protocol is:

- A single line of JSON with an object:

-

MUST have a

type field with an event type name

- MAY have a

data field with an object that contains event-specific data

- MAY have a

payload_length field with a number > 0

- If

payload_length is given, exactly that may bytes follows

Example:

{ “type”: “audio-chunk”, “data”: { “rate”: 16000, “width”, “channels”: 1 }, “payload_length”: 2048 } <2048 bytes>

I am reading the above and my head is exploding as the very things it extols as virtues are exclusions of standard linux protocols with a replacement that is more complex, proprietory and as far as I can tell not needed for a simple Voice system to work.

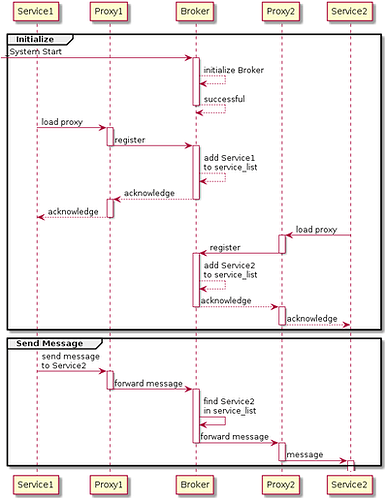

Say we take All without a broker or a socket! and do a wiki on what a broker is.

Wyoming is a broker and its needed at every stage of the pipeline to convert back to normal Linux audio streams that likely the original program now wrapped in the Wyoming stdin/stdout broker pipeline uses anyway.

This means it has to be implemented so that you have to be a dev of the apps whilst many apps are ready an complete and work on standard audio streams, what is being said seems to be paradoxical.

Wyoming seems to be very much a continuation of Hermes audio like control minus the MQTT which I never got anyway as its seems to focus and a load of unnesscary whilst ignoring standard linux process and what is needed for a modern multi room voice server system.

If you look at the new Hass Assist it needs the ASR inference text and absoluely none of what Wyoming/Rhasspy are creating a non standard protocol and workload for as all it needs is the inference text and what is worse in terms of modern multi-rrom systems the protocol lacks the zonal/channel info for Assist to create a simple 2nd level interaction message so its TTS text message can return as audio to the source input.

I keep stating that because it is and it really is simple but for some reason Rhasspy enforces the input/output audio chain to know Rhasspy than Rhasspy work with standard linux audio streams and so becomes complex.

I think I am going to bail on Rhasspy3 as for me it is purely a continuation of what I percieved wrong in 2.5 and likely still continue to play Mic hardware, KWS and generally keep an eye on voice technology.

As an example if you are interested I will sketch out how things should operate so that its not a matter of minimising barriers for programs to talk to a voice server, there simply are no barriers as it uses standard linux streams and protocols.

Example interoperable voice system building blocks

Rhasspy seems to make the assumption that Mics and KWS should be able to talk to Rhasspy so enforcing a Rhasspy protocol because Rhasspy is central.

Rhasspy has always lacked a KWS/Mic server as KWS/mics are simple devices that do a specific job and have no need to know any voice server protocol so that a dev can create a KWS/mic device that is interoperable not just with Rhasspy but various voice applications.

The websocket api GitHub - rhasspy/rhasspy3: An open source voice assistant toolkit for many human languages was a good start and where we do need a simple protcol of “start/stop” and simple quality metric its lacking and strangely embeds a nulll in the binary to singnify an end.

But its not part of a KWS/Mic server that aggregates input to a single stream based on a single zone by its channel and if multiple zones are concurrent it queues one as a file to completed after the 1st is completed or route to another instance as ASR is generally a serial process or can route to another instance.

The KWS/Mic server is missing and Rhasspy forces each mic/kws/process to embed meta-data into a broker protocol because it processes them after reciept than on input and also assumes a single stream where multi-room connections will be processed later.

If you process connections and streams local and remote as a KWS server then each device doesn’t need to know Rhasspy or have any embedded protcol as the KWS server can embed it, but its not even needed if audio is ready to stream, queued and routed.

There is a whole section at that start of the audio processing system that is absent in Rhasspy and it creates a whole load of complexity to do this after the fact.

Any Skill such as Hass Assist needs only to send a text message to TTS but is missing the important KWS server zone and channel to return audio to.

Also skill meta-data that initiates a KWS/Mic stream is also absent so that the system can not simply differentiate between OP command streams and secondary response streams, but that is another example of how easy and simple control should be.

Its that simple but wow, so I am going back to playing with Mics, KWS and voice tech news and refrain from giving an honest opinion andf getting more than the tempory forum ban I got last time for purely expressing opinion.

Bemused  is all I will say but on this topic and Hass Assist has proven how great inference based skills are, but a very simple kws-server protocol seems to be missing and the complex one proposed is likely not needed…

is all I will say but on this topic and Hass Assist has proven how great inference based skills are, but a very simple kws-server protocol seems to be missing and the complex one proposed is likely not needed…