Google AI Blog: Lyra: A New Very Low-Bitrate Codec for Speech Compression

Its not that much of a wow as even though the low bit rate might be minimal for network traffic the codec processing could be quite high as say audio codecs that have some trade off such as AMR_WB or APTX which minimising load for power usage & processor is the main priority.

Its a sort of strange article as “Opus has the low algorithmic delay (26.5 ms by default)[8] necessary for use as part of a real-time communication link, networked music performances, and live lip sync; by trading-off quality or bitrate, the delay can be reduced down to 5 ms.”

So the Google waffle about being comparable is just not true especially with the like of APTX or even AMR-WB.

It could be cool though as the distributed processing to send audio as mel_spectrograms is the precursor to MFCC and for a central ASR quite a lot of load will be removed and a specification might really help as the parameters need to be exact as it can have much effect on resultant image.

As rather than sending as 16Khz S16_LE audio and then converting to Mel spectrogram or decoding a codec to raw and then mel spectrogram its already done.

Sort of confused.com by it as " Pairing Lyra with new video compression technologies, like AV1, will allow video chats to take place, even for users connecting to the internet via a 56kbps dial-in modem"

As who does connect to the internet via 56kbps dial-in modem? Guess maybe some sort of new ultra lowpower / bandwidth.

“This trick enables Lyra to not only run on cloud servers, but also on-device on mid-range phones” This is my bemusement as “only mid-range phones and above” that is actually really high load for a audio codec?

Yeah I get your point. The audio quality was a whole lot better but as you say is it really better

I have been thinking about it and the article is badly worded as the creation of a MFCC is high load.

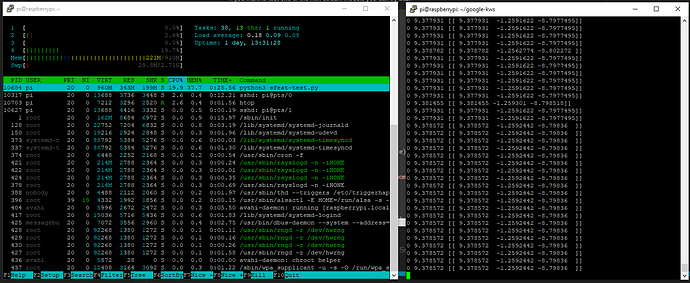

If you have a look at what I posted with a single mfcc created and then repeatedly streamed at 20ms to a tensorflow-lite model the load is massively low.

I am sort of getting what they are doing and as usual bemused as if you follow the above thread there is a 20ms mfcc stream there on a pi3b (!+).

But the I guess the 13 mfcc vectors of a tensor that represent a 20ms audio chunk (320 16khz mono audio samples) could be fed into a voice synthesizer like many of the current TTS are now and create this tremendously low bandwidth voice connection that is great if you live on Mars and calling home as if modeled on the caller it would sound just as normal.

So I guess the 90ms is creation broadcast and then conversion to voice which for many is mweh unless like the above Mars example.

But for remote voice control its pretty amazing as the load of MFCC is already done and you can just feed direct into a lightweight model with near no bandwidth requirement.

Thanks for that bit of research