So the key here is that satellite are in another room with microphone. I’d suggest that this is NOT mentioned in the “getting started” section of the manual, but is a separate use-case with a semarate entry. Of course it wants to be easy to do, so the “getting started” set-up should have clearly defined “modules” that can be taken off the voice server and put on a satellite machine. Similarly, multiple microphones for beam forming should be an extension style section of the manual. Finally, given the popularity of pis, I’d suggest the getting started part of the manual is all about a Pi3B. The mic-in-another-room(s) bit uses Pi Zero 2w - even if they are hard to get, because the migration of modules should be easy. And I’d suggest the beam forming uses the respeaker hat for a pi because they will have an interest in getting it working with flashing lights and so on.

Dunno about Pi Stock Petr even if they are faves but prob deserves a discusssion of its own so I created

Then…

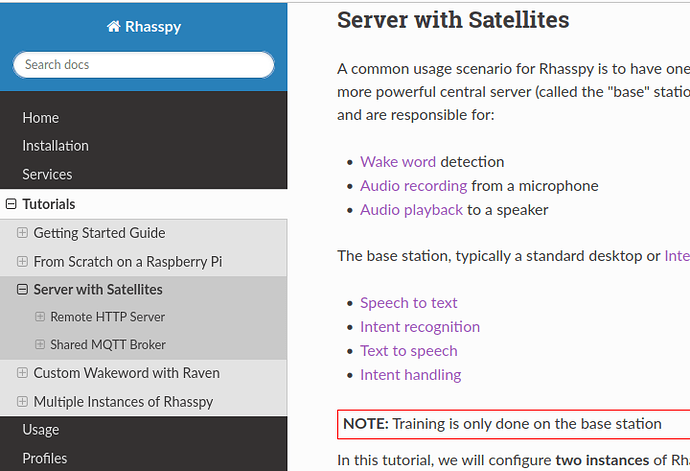

Alas, while Rhasspy was re-factored to support the Base+Satellite model, I am of the opinion that the documentation was “bolted on”. I did find all the information in the documentation … somewhere … after at least 3 reads through the whole thing. The first mention of base+Satellite is tucked away down in the Tutorials section, and even there it isn’t fully explained (e.g. both approaches show Intent Handling as “disabled”).

Unfortunately I think at the time it would have been a major exercise to restructure the documentation to better reflect the base+satellite approach, while still satisfying the existing users. Michael also strikes me an excellent developer, and I doubt he considers documentation fun or even a core competency.

And now…

Well I made notes and tried building into a tutorial for my combination (RasPi ZeroW satellite + Base running HAOS with Rhasspy add-on), and at 30 pages started feeling that there is probably a better approach than one huge document … such as a website (allowing links to audio troubleshooting tips without bogging down the main flow). The other thing is that rhasspy is more a toolkit, with many ways it can be used … so really wanting multiple tutorials … and it would be great if they could be fitted into one framework.

It looks now as though 90% of users have adopted the base+satellite model, so easy to justify re-doing the official documentation, and with lots of new users maybe all that good technical information can be moved a bit to the back ?

Except that with v3 in the pipeline, is there much point ?

–

BTW, a few days back I tried sending you a private message offering to email my 30-page tutorial. Did you receive it ?

Sorry Don I am travelling at the moment and working off a phone and shared machines. I would love to see your 30 pager but can I leave it another 2 weeks when I will have a real machine and more time.

Of course !

There seems to be several new users asking for help setting up, so maybe I should buckle down and finish my tutorial now.

Also, perhaps a crazier idea (maybe Rhasspy v4 or v5), would be using plugins compiled to WASM. Honestly, I don’t know much about how it actually works, but from reading/trying to stay on top of it - it seems like you could not rely on the OS to call out to cli and just execute them directly.

Weird benefits are the plugins could be easily downloadable and cross platform and maybe cross architecture. The biggest downside is that there are only a few languages that can currently compile to WASM (C and Rust, and i think Ruby), and I have no idea what interacting with WASM object from python looks like.

The tech might be a little to new atm - so it might be more of a future endeavour. It just seems to be the current hype machine around application packaging.

Websockets would be great! It would be a lot easier to use for satellite communication.

Also external training would fix the issue that a rasspberry pi is sometimes too slow and times out.

Could be just a Socket but the ease of use and guarantee of order and delivery of TCP vs UDP is a big bonus.

Websockets has already been written and neatly differentiates text and binary packets which also makes it super easy to separate binary audio and text protocol messages.

Websockets is low latency and its 1to1 so it doesn’t broadcast across networked nodes just the destination.

Its included in SDK’s such as Arduino and ESP32 and scales all the way as if pretty much universally adopted, there are others but often microcontroller libs have less support such as gRPC.

Websockets really should of been a no-brainer.

I have been testing hardware and updating my simple beamformer code and really we have very few choices that are worthwhile.

Adafruit voice bonnet is good but for a ‘soundcard’ prob too expensive as also the respeaker 2mic is also OK but $10

The keyesstudio and other 2mic clones are often noisey and definately ewaste and not sure where it is as just got another, but be careful where you get your respeaker from as the 1st one I got was the same as the clones. This 2nd one is working fine.

I have GitHub - StuartIanNaylor/2ch_delay_sum: 2 channel delay sum beamformer /tmp/ds-out is the current TDOA

/tmp/ds-in if it exists sets the beam to the integer in the file, to clear just delete.

If anyone has even a touch of C/C++ finese then feel free to clone and tidy.

There is also Plugable USB Audio Adapter – Plugable Technologies with 2 channels or any el cheapo usb with a mic if you are not going to beamform.